Browse our range of reports and publications including performance and financial statement audit reports, assurance review reports, information reports and annual reports.

Management of Smart Centres’ Centrelink Telephone Services — Follow-up

Please direct enquiries through our contact page.

The objective of the audit was to examine the extent to which the Department of Human Services (Human Services) has implemented the recommendations made by the Australian National Audit Office (ANAO) in Auditor-General Report No. 37 of 2014–15 Management of Smart Centres’ Centrelink Telephone Services; as well as Human Services’ performance against call wait time and call blocking metrics.

Summary and recommendations

Background

1. The Department of Human Services (Human Services) delivers payments and associated services on behalf of partner agencies, and provides related advice to government on social welfare, health and child support delivery policy.1 Through its Social Security and Welfare Program, Human Services delivers payments and programs that support families, people with a disability, carers, older Australians, job seekers and students.2 In 2017–18, Human Services delivered $112.4 billion in social security payments to recipients.3

2. Human Services offers a variety of service delivery options to customers including face-to-face services for Centrelink and Medicare in service centres4, as well as telephony and digital services. In 2017–18, Human Services received approximately 17 million visits to service centres and handled approximately 52 million calls for Centrelink, Child Support and Medicare Program services.5 In 2017–18, there were also 49.1 million Centrelink transactions for digital and self-service.6

3. In 2017–18 the Average Speed of Answer7 for calls to Centrelink was 15 minutes and 58 seconds against a target of less than or equal to 16 minutes.8

Rationale for undertaking the audit

4. This audit is to follow up on recommendations made in Auditor-General Report No. 37 of 2014–15 Management of Smart Centres’ Centrelink telephone services.9 The Joint Committee of Public Accounts and Audit (JCPAA) has shown interest in performance reporting relating to Centrelink telephony, specifically recommending more complete and publicly available data on the performance of these services. The Community Affairs Legislation Senate Committee also maintains ongoing interest in call wait times and performance reporting. There is regular media interest in the call wait times experienced by Centrelink customers.

Audit objective and criteria

5. The objective of the audit was to examine the extent to which Human Services has implemented the recommendations made by the Australian National Audit Office (ANAO) in Auditor-General Report No. 37 of 2014–15 Management of Smart Centres’ Centrelink Telephone Services; as well as Human Services’ performance against call wait time and call blocking10 metrics.

6. To form a conclusion against the audit objective, the ANAO adopted the following high level audit criteria:

- Human Services has implemented a channel strategy that effectively supports the transition to digital service delivery and the management of call wait times.

- Human Services has implemented an effective quality framework to support the quality and accuracy of Centrelink telephone services.

- Human Services has implemented effective performance monitoring and reporting arrangements to provide customers with a clear understanding of expected service standards.

7. The audit did not include an examination of Smart Centres’ processing services, other than the processing that is done as part of the telephone service; or Smart Centres’ Medicare and Child Support telephone services.

8. The audit did not directly follow up on the implementation of the JCPAA recommendations. However, the audit considered the intent of the JCPAA recommendations and their relationship with the original ANAO recommendations.

Conclusion

9. As at November 2018, Human Services has fully implemented one and partially implemented two of the three recommendations made in Auditor-General Report No. 37 of 2014–15 Management of Smart Centres’ Centrelink Telephone Services. Human Services performance against the Average Speed of Answer Key Performance Indicator (KPI) has remained largely stable since the previous audit.

10. In response to the recommendation in the previous Auditor-General report, Human Services has developed two channel strategies. The first was not effectively implemented. Human Services is currently developing mechanisms to support the implementation of the revised strategy. The transition to digital service delivery and the management of call wait times are supported by individual projects within the department.

11. Human Services has effectively applied the department’s Quality Framework to Centrelink Smart Centres’ telephony staff supporting the quality and accuracy of telephone services.

12. Human Services telephony program has appropriate data and largely effective internal performance reporting for management purposes. External reporting does not provide a clear understanding of the overall customer experience.

Supporting findings

Managing call wait times and supporting digital service delivery

13. In late 2016 Human Services developed the Channel Strategy 2016–19, however the strategy was not used to guide decision-making activity across the various channels. A revised channel strategy was endorsed in June 2018 that more clearly articulates initiatives that will be completed and how these link to the strategy’s key objectives of reducing preventable work, increasing digital take up, and improving customer experience and staff engagement. Governance and reporting arrangements have not yet been fully implemented and it is too early to assess the effectiveness of the revised channel strategy.

14. Human Services does not have appropriate mechanisms in place to monitor and report on the effectiveness of its transition to digital services, with only one high-level performance measure in place. The measure, which is the percentage increase in the total number of interactions conducted via digital channels compared to the previous year, does not examine the effectiveness, intended outcomes or the impact on other channels of the shift to digital services across the department. Human Services has identified a need to improve indicators in this area and is working to address these limitations.

15. Human Services has developed a strategy to assess the benefits to its telephony services made under its Telephony Optimisation Programme. Although the strategy details the management, metrics, targets and reporting for each sub-project, the current benefits realisation approach does not clearly articulate how each individual sub-project contributes to the Programme’s overall objectives and key performance measures. This potentially hinders prioritisation of future telephony improvement activities. The Telephony Optimisation Programme remains underway and Human Services intends to assess its impact on the management of call wait times and increased call capacity at the end of 2018 and again in mid-2019.

16. Human Services has undertaken an evaluation of its pilot program to test whether use of an external call centre provider was feasible to increase call capacity. Additional resources have been allocated following the evaluation, which found the model was effective and comparable to the department’s telephony service delivery workforce. The direct impact this approach has had on the number of busy signals and call capacity is unclear due to a range of other factors influencing these outcomes, such as seasonal variations, other policy changes, and impacts from the Telephony Optimisation Programme projects.

Quality and accuracy of Centrelink telephone services

17. Quality assurance activities for Centrelink Smart Centres’ telephony services are undertaken in accordance with the Human Services Quality Framework. There are largely effective quality assurance mechanisms in place to support the consistency of service and information provided to customers, except that not all required evaluations are currently completed and calibration activities have not been applied consistently across all sites.

18. The Quality Call Framework and the Quality On Line processes apply to all staff who provide Centrelink telephone services regardless of staff classification, employment status or work type. Human Services is actively exploring options to further improve quality processes, such as a pilot currently underway to trial Remote Call Listening evaluations. Monitoring and analysis of quality assurance activities occurs regularly within Smart Centres and at the strategic level to inform continuous improvement activities.

Performance monitoring and reporting

19. Human Services collects appropriate performance data for internal operational management of its telephony services. Performance information is regularly reported to Smart Centre management and used to identify local performance trends, adjust resource allocation and consider staff development needs. Human Services’ Executive receive performance reporting to inform monitoring against call wait times and call blocking to support achievement of the external Average Speed of Answer Key Performance Indicator. Reporting to Executive does not provide full insight into the overall customer experience – such as the time spent waiting before customers abandon calls or the number of calls answered within specified timeframes. This information would support Human Services to continue improvements in the telephony channel and the transition to digital services.

20. Human Services’ external reporting of telephone service performance is not appropriate as it is does not provide a clear understanding of the service a customer can expect. The Average Speed of Answer Key Performance Indicator does not consider the various possible outcomes of a call such as abandoned calls. Human Services has undertaken several reviews of its performance metrics, however it has not yet identified and finalised its preferred set of updated metrics. Therefore, it has only partially implemented recommendation three of Auditor-General Report No. 37 of 2014–15. No changes have yet been made to external performance information to provide a clearer understanding of the service experience a customer can expect.

21. External reporting on the performance of Centrelink telephony services remains limited to annual reporting of the single Average Speed of Answer Key Performance Indicator within the department’s Annual Performance Statement.

Recommendations

Recommendation no. 1

Paragraph 2.14

The ANAO recommends that Human Services further develop implementation plans and monitoring and reporting arrangements to provide its executive with a holistic view of the effectiveness of the Channel Strategy to support the transition to digital service delivery and assist with the management of call wait times.

Department of Human Services response: Agreed

Recommendation no. 2

Paragraph 4.32

Human Services finalise its review of Key Performance Indicators and implement updated external performance metrics for the 2019–20 Portfolio Budget Statement.

Department of Human Services response: Agreed

Summary of entity response

22. The proposed audit report was provided to the Department of Human Services, which provided a summary response that is set out below. The full department response is reproduced at Appendix 1.

The Department of Human Services (the department) welcomes this report, and considers that implementation of its recommendations will enhance the delivery and management of telephony and digital services by reducing preventable work, increasing digital take-up and improving customer experience and staff engagement.

The department agrees with the Australian National Audit Office’s (ANAO’s) recommendations and has commenced the work necessary to implement them. The Department has implemented improvements to monitoring and reporting arrangements, which will provide the Department’s executive leadership team with enhanced visibility of progress in delivering its digital transformation strategy. In addition, the Department is currently reviewing performance measures relevant to telephony services, including consulting with relevant stakeholders. Any changes are expected to be in place to support the 2019-20 Portfolio Budget Statement.

Key messages from this audit for all Australian Government entities

Below is a summary of key messages, including instances of good practice, which have been identified in this audit that may be relevant for the operations of other Commonwealth entities.

Policy / program implementation

Performance and impact measurement

1. Background

Introduction

1.1 The Department of Human Services (Human Services) delivers payments and associated services on behalf of partner agencies, and provides related advice to government on social welfare, health and child support delivery policy.11 Through its Social Security and Welfare Program, Human Services provides social security assistance through various payments and programs to assist families, people with a disability, carers, older Australians, job seekers and students.12 In 2017–18, Human Services delivered $112.4 billion in social security payments to recipients.13

1.2 Human Services offers a variety of service delivery options to customers including face-to-face services for Centrelink and Medicare in service centres14, as well as telephony and digital services. In 2017–18, Human Services received around 17 million visits to service centres and handled around 52 million calls for Centrelink, Child Support and Medicare Program services.15 In 2017–18, there were also 49.1 million Centrelink transactions for digital and self-service.

1.3 Figure 1.1 shows the total number of calls received by Human Services under Program 1.1 Services to the Community – Social Security and Welfare since July 2014.

Figure 1.1: Total number of calls received by Human Services under Program 1.1 Services to the Community — Social Security and Welfare

Source: ANAO analysis of Human Services data.

1.4 In 2017–18, the Average Speed of Answer16 for calls to Centrelink was 15 minutes and 58 seconds against a target of less than or equal to 16 minutes.17 Table 1.1 shows that since Auditor-General Report No. 37 of 2014–15 Management of Smart Centres’ Centrelink Telephone Services (the previous ANAO audit)18, the average speed of answer has remained largely stable.19

Table 1.1: Results against the Average Speed of Answer KPI target

|

Financial Year |

Result |

Target |

|

2014–15 |

15 minutes and 40 seconds |

≤ 16 minutes |

|

2015–16 |

15 minutes and 9 seconds |

≤ 16 minutes |

|

2016–17 |

15 minutes and 44 seconds |

≤ 16 minutes |

|

2017–18 |

15 minutes and 58 seconds |

≤ 16 minutes |

Source: Human Services Annual Reports.

Previous scrutiny

1.5 The ANAO previously reviewed Centrelink telephone services in Auditor-General Report No. 37 of 2014–15 Management of Smart Centres’ Centrelink Telephone Services, tabled in May 2015. The ANAO concluded that Human Services had established a soundly based quality assurance framework for Centrelink telephone services, however this should be extended to all relevant staff to improve the overall level of assurance. The ANAO further concluded that the Department’s target Key Performance Indicator (KPI) relating to Average Speed of Answer does not clearly indicate what service standard customers can expect due to the distribution of actual wait times around the ‘average’. The audit recommended that Human Services:

- establish a pathway and timetable for the implementation of a coordinated channel strategy to help deliver improved services across all customer channels and a more coordinated approach to the management of call wait times;

- apply the Quality Call Listening (QCL) framework to all staff answering telephone calls, and review the potential impact of gaps in the implementation of the QCL; and

- review Key Performance Indicators for the Centrelink telephony channel to clarify the service standards that customers can expect and better reflect the customer experience.

1.6 Human Services agreed to recommendations one and two and agreed with qualifications to recommendation three, noting that the department estimated that to reduce the KPI to an average speed of answer of 5 minutes, it would require an additional 1000 staff at a cost of over $100 million each and every year.20 Human Services did not address the review of the KPIs in its response to the recommendation.

1.7 Human Services provided updates to its Audit Committee against the implementation of the recommendations. Reporting against recommendations one and two was completed in 2016. The Channel Strategy received in principle endorsement from the Minister in September 2016 and the Quality Call Framework was launched in August 2016 and was progressively implemented. Reporting against recommendation three was completed in September 2018 advising that KPIs and customer satisfaction have been considered as part of the DHS Telephony Review.

1.8 The Joint Committee of Public Accounts and Audit (JCPAA) Report No. 452 Natural Disaster Recovery; Centrelink Telephone Services; and Safer Streets Program considered Auditor-General Report No.37 2014–15 and made a further five recommendations relating to Smart Centres. These recommendations covered:

- increased training to undertake blended processing and telephony tasks in the Smart Centre context (supported by Human Services);

- the implementation of the Welfare Payment Infrastructure Transformation (WPIT)21 and its impact on performance measuring, management and telephony (supported with qualification);

- reviewing internal Key Performance Indicators (KPIs) (supported);

- public reporting on a broader range of KPIs (supported with qualification); and

- more frequent publishing of performance information for Centrelink’s telephone services (not supported).22

1.9 The full JCPAA recommendations can be found at Appendix 2.

Updates to telephone services since the previous audit

1.10 In 2016, Human Services reviewed the department’s telephony operations (DHS Telephony Review). The review identified that customers experience high call wait times; high rates of busy signals (due to call blocking parameters to manage high incoming call volumes); and high rates of calls transferred or left unresolved (25 per cent to 30 per cent). The review made seven recommendations aimed at simplifying telephony operations and improving performance measurement.

1.11 In early 2018, Human Services initiated the Telephony Optimisation Programme (TOP) to action the recommendations made in the review. The TOP consists of a portfolio of 16 projects operating across four tranches intended for full implementation by June 2020.23

1.12 In October 2017, Human Services engaged an external provider as part of a pilot program to provide an additional capacity of 250 staff for telephony services. The two-year contract is worth $53 million.

Rationale for undertaking the audit

1.13 This audit is to follow up on recommendations made in the Auditor-General Report No. 37 of 2014–15 Management of Smart Centres’ Centrelink telephone services. The JCPAA has shown interest in performance reporting relating to Centrelink telephony, specifically recommending more complete and publicly available data on the performance of these services. The Community Affairs Legislation Senate Committee also maintains ongoing interest in call wait times and performance reporting. There is regular media interest in the call wait times experienced by Centrelink customers.

Audit approach

Audit objective, criteria and scope

1.14 The objective of the audit was to examine the extent to which Human Services has implemented the recommendations made by the ANAO in Auditor-General Report No. 37 of 2014–15 Management of Smart Centres’ Centrelink Telephone Services; as well as Human Services’ performance against call wait time and call blocking metrics.

1.15 To form a conclusion against the audit objective, the ANAO adopted the following high level audit criteria:

- Human Services has implemented a channel strategy that effectively supports the transition to digital service delivery and the management of call wait times.

- Human Services has implemented effective performance monitoring and reporting arrangements to provide customers with a clear understanding of expected service standards.

- Human Services has implemented an effective quality framework to support the quality and accuracy of Centrelink telephone services.

1.16 The audit did not include an examination of Smart Centres’ processing services, other than the processing that is done as part of the telephone service; or Smart Centres’ Medicare and Child Support telephone services.

1.17 The audit did not directly follow-up on the implementation of the JCPAA recommendations. However, the audit considered the intent of the JCPAA recommendations and their relationship with the original ANAO recommendations.

Audit methodology

1.18 The major audit tasks included:

- analysis of Human Services’ performance data and performance reporting methodologies;

- an examination of the department’s files and documentation relating to the management of Smart Centres’ Centrelink telephony services;

- site visits to a number of Smart Centres to observe operations; and

- interviews with Human Services managers and staff involved in the management of Smart Centres and the development of the channel strategy, both in Canberra and across the national network.

1.19 The audit was conducted in accordance with ANAO auditing standards, at a cost to the ANAO of approximately $372,136. The team members for this audit were Jacqueline Hedditch, Tara Rutter, Barbara Das, Steven Favell, Emily Drown and David Brunoro.

2. Managing call wait times and supporting digital service delivery

Areas examined

This chapter examines the extent to which Human Services has implemented a channel strategy, in accordance with Recommendation One of Auditor-General Report No.37 of 2014–15, and Human Services’ mechanisms to monitor the transition to digital service delivery and initiatives to increase call capacity.

Conclusion

In response to the recommendation in the previous Auditor-General report, Human Services has developed two channel strategies. The first was not effectively implemented. Human Services is currently developing mechanisms to support the implementation of the revised strategy. The transition to digital service delivery and the management of call wait times are supported by individual projects within the department.

Area for improvement

The ANAO has made one recommendation aimed at ensuring implementation plans and monitoring and reporting arrangements are developed and actively used to better assess the effectiveness of the channel strategy.

Has Human Services effectively implemented a coordinated Channel Strategy?

In late 2016 Human Services developed the Channel Strategy 2016–19, however the strategy was not used to guide decision-making activity across the various channels. A revised channel strategy was endorsed in June 2018 that more clearly articulates initiatives that will be completed and how these link to the strategy’s key objectives of reducing preventable work, increasing digital take up, and improving customer experience and staff engagement. Governance and reporting arrangements have not yet been fully implemented and it is too early to assess the effectiveness of the revised channel strategy.

2.1 The previous Auditor-General report found that, at the time, Human Services did not have a detailed channel strategy. The ANAO recommended that, to help deliver improved services across all customer channels and a more coordinated approach to the management of call wait times, Human Services establish a pathway and timetable for the implementation of a coordinated channel strategy.24 Human Services agreed to the recommendation.

2.2 Human Services defines its four distinct customer channels for Centrelink services as:

- in-person — face-to-face communication with local staff;

- by telephone — speaking to staff at Smart Centres, not limited by geographical location;

- digital — accessing information or reminders or submitting claims, details or documents online, via mobile apps or by phone self-service technology; and

- in writing — letters and hard copies of claim documents and information.

Channel Strategy 2016–19

2.3 In late 2016 Human Services finalised the Channel Strategy 2016–19. The strategy was intended to provide a clear path forward to support the shift towards digital service delivery as the primary mode of interaction with customers. It was also intended to outline ‘how staff-assisted channels would support the shift to digital delivery’. The strategy included three broad strategic priorities for service improvements:

- ‘connected services — provide a consistent and integrated experience as customers move across touchpoints;

- efficient services — provide efficient and cost effective ways to deliver services; and

- personalised services — know, connect with and enable customers by providing a personalised experience based on their circumstances.’25

2.4 The Channel Strategy 2016–19 was a high-level document, which identified customer groups, existing channel use, current strengths and inhibitors for each of its channels.

2.5 Box 1 shows future directions outlined within the Channel Strategy 2016–19 for telephony and digital services.

| Box 1: Channel Strategy 2016–19 Human Services’ directions for digital and telephony services |

|

Digital services strategies:

Telephony services strategies:

Collectively, the telephony strategies were to lead to a gradual move towards outbound contact and reduce inbound telephony. |

Source: ANAO analysis of Human Services documentation.

2.6 Human Services did not develop a detailed implementation plan to support the Channel Strategy 2016–19 and the strategy was not used to guide decision-making activity across the various channels in a manner that supported a more coordinated approach to the management of call wait times. For example, a Channel Strategy Alignment Checklist was developed to support prioritisation of projects aligned to the channel strategy, however Human Services advised the ANAO that this checklist was not used.

2.7 The Channel Strategy 2016–19 required that progress reports against the strategy be provided quarterly to the department’s Customer Committee and annually to its Executive Committee.26 No dedicated progress reporting was undertaken against the Channel Strategy 2016–19, however Human Services did report against a number of individual projects and initiatives that supported the channel strategy objectives.27 Human Services advised the ANAO that overarching channel strategy reporting was not undertaken due to the October 2017 decision to revise the channel strategy.

Revised channel strategy (2018–onwards)

2.8 A revised Channel Strategy was endorsed by the Secretary in June 2018. Human Services advised the ANAO that the revised strategy was prompted by significant changes across the organisation and in other major programs such as the Welfare Payment Infrastructure Transformation (WPIT), which is now known as Delivery Modernisation.

2.9 The revised strategy is intended to provide a greater focus on outcomes and more clearly identify specific initiatives required to progress the strategy. Human Services’ Corporate Plan 2018–1928 states that the Channel Strategy provides a roadmap for delivering more connected, efficient and personalised services through a multi-channel approach to service delivery.

2.10 The revised strategy is in two documents, the Channel Strategy Narrative and the Initiatives Roadmap.

- The Channel Strategy Narrative positions the strategy amongst Human Services’ other significant ongoing strategic developments29, identifies the main levers for improving service outcomes and presents the key objectives, which are:

- reduce preventable work30 to optimise staff-assisted touchpoints;

- increase digital uptake to support the department’s transformation agenda; and

- improve customer experience and staff engagement.

- The Initiatives Roadmap identifies a large range of service improvement initiatives that support the achievement of each objective. The initiatives are categorised according to whether they contribute to developing capability, are new, or a refinement of existing service approaches.

2.11 The ANAO’s comparison of the Channel Strategy 2016–19 with the revised Channel Strategy found that the revised Channel Strategy documents more clearly articulate the initiatives that will be completed under the strategy, such as individual projects within the telephony channel and as such improves integration of projects and initiatives.

2.12 In July 2018, Human Services informed the ANAO that it was developing entity level governance and reporting arrangements for its new Channel Strategy Narrative and Initiatives Roadmap. Between July 2018 and December 2018, monthly reporting was provided to the Service Delivery Operations Group Executive (SES Band 3). In December 2018, a decision was made to utilise existing governance arrangements to provide entity level oversight of the Channel Strategy. This will include reporting to the Enterprise Transformation Committee and monthly reporting to the Executive Committee.

2.13 Human Services advised the ANAO in February 2019 that it has commenced development of a benefit assessment and prioritisation approach for the revised Channel Strategy to determine which initiatives will proceed.

Recommendation no.1

2.14 The ANAO recommends that Human Services further develop implementation plans and monitoring and reporting arrangements to provide its executive with a holistic view of the effectiveness of the Channel Strategy to support the transition to digital service delivery and assist the management of call wait times.

Department of Human Services response: Agreed.

2.15 In addition to the governance and reporting arrangements that have been in place throughout the development and since the endorsement of the Channel Strategy, from January 2019, monthly reports on the Channel Strategy will be provided to the Executive Committee. The Executive Committee provides advice and assurance to the Secretary on major transformation activities to ensure coordinated delivery of the Department’s transformation ambition. Reporting to Executive Committee will include progress against implementation milestones for the key projects under the Channel Strategy programme. Oversight of progress against detailed implementation plans will occur at project level.

Does Human Services have appropriate mechanisms in place to monitor and report on the effectiveness of its transition to digital service delivery?

Human Services does not have appropriate mechanisms in place to monitor and report on the effectiveness of its transition to digital services, with only one high-level performance measure in place. The measure, which is the percentage increase in the total number of interactions conducted via digital channels compared to the previous year, does not examine the effectiveness, intended outcomes or the impact on other channels of the shift to digital services across the department. Human Services has identified a need to improve indicators in this area and is working to address these limitations.

2.16 In 2015–16, Human Services implemented the current Key Performance Indicator (KPI) to monitor its progress towards increasing Centrelink customer self-service through digital channels. The KPI tracks the number of Centrelink customer interactions completed in the year through digital tools31, with a target of increasing these by, or greater than, five per cent compared to the previous year.

2.17 Internally, the KPI is reported against quarterly to the Executive Committee. Externally, the KPI is reported against annually within the department’s Annual Performance Statements.

2.18 Table 2.1 outlines Human Services’ performance against its digital services KPI.

Table 2.1: Centrelink performance against the digital service KPI for the period 2015–16 to 2017–18

|

|

2015–16 |

2016–17 |

2017–18 |

|

Number of interactions completed via digital channels |

141.2 million |

148.6 milliona

|

149.8 million |

|

Percentage increase from previous years |

21.3 |

5.3 |

6.6 |

|

Target |

≥5% |

≥5% |

≥5% |

|

Result |

Met |

Met |

Met |

Note a: The number of interactions that are included in the calculation vary between financial years. This reflects the change in digital service offerings and how the customer is required to interact with the platform resulting in a varied number of total interactions.

For calculating the 2017–18 result, the number of interactions in 2016–17 was revised to 140.5 million. The methodology for calculation of interactions remains the same.

Source: Human Services’ Annual Reports (2015–16 to 2017–18) and Human Services documentation.

2.19 As shown in Table 2.1, Human Services has met its target for the last three years. The number of interactions Human Services includes in the calculation for the achievement of digital service level standards is updated each year to reflect changes to the way that some information is presented within the digital channels.

2.20 Human Services has identified limitations with its digital service KPI. In particular, that it does not provide information about:

- customer behaviours in and across channels such as;

- quality of the digital experience (is it simple, intuitive, provides end-to-end service)

- drop-out points in digital including cause and effect

- what prevented use of digital (what is getting in the way of digital adoption)

- quality of and return on investment from new or improved digital services and products; and

- staff attitudes to digital services including capability, confidence and advocacy.

2.21 Accordingly, increased take-up under this performance measure does not necessarily represent a reduced need for staff resources, improved efficiency or an improved customer experience and therefore does not provide insights into the impact of the digital transition target on call wait times.

2.22 The annual variation in the calculation for the achievement of digital service level standards and the above limitations are not clearly articulated in the Annual Performance Statements.

2.23 In July 2018, the department’s Enterprise Transformation Committee noted that work was being undertaken to address these limitations by developing and implementing new measures of the suitability and effectiveness of digital service delivery and investigating options for a new digital business model. Human Services expects to commence implementation of a new suite of digital measures from July 2019.

Does Human Services have an evaluation strategy in place to assess the extent to which outcomes have been achieved through the changes to its telephony services?

Human Services has developed a strategy to assess the benefits to its telephony services made under its Telephony Optimisation Programme. Although the strategy details the management, metrics, targets and reporting for each sub-project, the current benefits realisation approach does not clearly articulate how each individual sub-project contributes to the Programme’s overall objectives and key performance measures. This potentially hinders prioritisation of future telephony improvement activities. The Telephony Optimisation Programme remains underway and Human Services intends to assess its impact on the management of call wait times and increased call capacity at the end of 2018 and again in mid-2019.

Human Services has undertaken an evaluation of its pilot program to test whether use of an external call centre provider was feasible to increase call capacity. Additional resources have been allocated following the evaluation, which found the model was effective and comparable to the department’s telephony service delivery workforce. The direct impact this approach has had on the number of busy signals and call capacity is unclear due to a range of other factors influencing these outcomes, such as seasonal variations, other policy changes, and impacts from the Telephony Optimisation Programme projects.

2.24 While the Channel Strategy sets out the desire to shift to digital as the primary channel for Centrelink customers, telephony remains an important and heavily utilised channel option. Human Services continues to invest in improvements to the telephony channel and increase resourcing. As such, it is important that Human Services evaluate key initiatives to understand their effectiveness as well as impact and relationship on the transition to digital services.

Telephony Optimisation Programme

2.25 In 2017, Human Services reviewed the department’s telephony operations (the Telephony Review). The review identified a number of telephony issues experienced by customers including: high call wait times; high rates of busy signals (due to call blocking parameters to manage high incoming call volumes)32; and high rates of calls transferred or left unresolved (25 per cent to 30 per cent). The review made the following recommendations aimed at simplifying telephony operations and improving performance measurement:

- dramatically simplify the operation;

- plan and manage to true workloads;

- establish the right objectives and metrics;

- build an aligned and engaged organisation;

- strengthen the provision, support and use of enabling technologies;

- fundamentally improve how new work is incorporated; and

- effectively communicate performance.

2.26 In early 2018, Human Services initiated the Telephony Optimisation Programme (TOP) to action the review recommendations.33 The TOP consists of a portfolio of 16 projects34 operating across four tranches intended for full implementation by June 2020.35 As at January 2019, the majority of initiatives for the TOP were in-progress. (See Appendix 4 for further detail.)

Benefits realisation

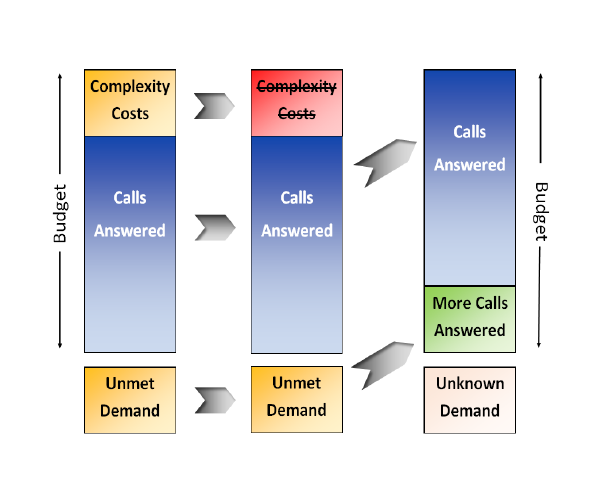

2.27 The TOP is intended to deliver increased call capacity, achieved via a number of interconnected project deliverables. As shown in Figure 2.1, the Programme Management Plan indicates that the TOP “will result in the reduction of complexity overheads36 inherent to the telephony management arrangements, facilitating the receipt of more calls.”

Figure 2.1: Telephony Optimisation Programme — intended outcome

Source: Human Services’ documentation.

2.28 To assess the TOP, Human Services has developed a Benefits Realisation approach, which includes a Benefits Realisation Plan and associated Benefit Profiles. Seven expected benefits have been identified, which align to and replicate the wording of the recommendations from the Telephony Review (outlined in paragraph 2.25). Each benefit profile details management, metrics, targets and reporting activities. The TOP plan outlines the criteria that Human Services will use to measure the overall performance of the programme. The five criteria and their associated key performance measures are:

- improved public narrative — the number of times and how often Human Services telephony performance is negatively reported in the media.

- Programme and Project staff satisfaction — staff indicate an average of three or higher satisfaction ratings against a range of survey questions.

- reporting and monitoring — the Programme meets the reporting and monitoring timeframes in 90 per cent of instances.

- deliverables align with Project requirements — Project deliverables are assessed as meeting Project requirements at each end of tranche review.

- Human Services’ call capacity — Human Services’ call capacity has increased and is trending upwards.

2.29 With the exception of improved public narrative and call capacity, the criteria outlined in the program plan are primarily focussed on reporting against milestone achievement.

2.30 The Benefit Profiles consider individual projects rather than the overall Telephony Optimisation Programme. The Benefit Profiles for each project do not clearly articulate the expected flow on impacts towards increasing Human Services’ call capacity. For example, one benefit measure is the total percentage increase in the number of customers enrolling for a voiceprint, with a target of 10 per cent in the first year and incremental increases of 5 per cent each year for four years. However, this benefit profile does not consider what effect an increase in voiceprint enrolment may have on the broader telephony operations, or the resulting impact on call capacity.37

2.31 Human Services advised the ANAO that, when the benefit measures are considered in the context of overall telephony performance reporting, it considers it will have sufficient information available to assess the impact of the TOP on call capacity and the staff capacity freed up for reinvestment in improved service levels.

2.32 According to the TOP Plan, Human Services intends to measure the increase in call capacity at the end of Tranche 2 (31 December 2018) and Tranche 3 (30 June 2019). The results for Tranche 2 will be included in the Programme Benefits Realisation Report for Quarter 2 2018–19 which Human Services expects to finalise in late February 2019.

Increased resourcing for telephony

2.33 In response to Recommendation Three of the previous Auditor-General report38, Human Services noted that the department estimated that to reduce the KPI to an average speed of answer of 5 minutes, it would require an additional 1000 staff at a cost of over $100 million each and every year.

2.34 Human Services advised the ANAO that while this response was accurate at the time of the previous audit, it has since shifted to a resourcing model that allows for the allocation of work to staff from both telephony and processing queues. At times, staff may be directed towards processing claims, which reduces the number of calls that can be received. As such, Human Services advised that the level of staff allocated to telephony fluctuates and so there is not a direct relationship between staff numbers and the expected impact on the average speed of answer.

2.35 As at December 2018, there were about 6900 Human Services staff with skill tags relevant for work in Smart Centres. This includes ongoing, non-ongoing and Irregular and Intermittent Employees. These staff may undertake telephony activities, processing activities or a mix of both, depending on their skill tag.

2.36 In October 2017, Human Services engaged an external provider (Serco) as part of a pilot program to provide an additional capacity of 250 staff for telephony services. The purpose of the pilot program was to test a different service delivery approach and increase overall call centre capacity. The two-year contract is worth $53 million. Operations at the Melbourne-based Serco site commenced with Serco staff taking generalist calls.39 In mid-2018, Serco increased to 400 equivalent staff and workers began taking calls for job seeker payments and services.

2.37 Human Services engaged an external provider to undertake an evaluation of the outsourcing arrangements. This evaluation was done in two phases aimed at considering:

- whether the outsourcing arrangement was feasible, particularly as it required external personnel to access Human Services systems (December 2017); and

- how well the arrangement was working (January 2018).

2.38 Part one of the evaluation found that Human Services could work with a commercial supplier to increase overall call centre capacity. Part two found that the outsourced delivery model is effective and comparable to the department’s telephony service delivery workforce.

2.39 In March 2018, Human Services provided the outcomes of this evaluation to the Government seeking approval for implementing expanded outsourcing arrangements. This approach involved expanding operations with Serco as well as engaging additional suppliers of telephony services. Subsequently, in April 2018, the Minister for Human Services announced a further 1000 call centre staff would be employed from an outsourced provider. In May 2018, the Government allocated $50 million to Human Services to further improve service delivery and target call wait times. These funds were not tied to outsourcing arrangements.

2.40 In August 2018, the Government announced a further 1500 call centre staff would be employed through outsourcing arrangements to answer Centrelink telephone calls. The total staff employed under outsourcing arrangements will be 2750, with the final outsourcing arrangements expected to be operational by the end of April 2019.

Impact of increased resourcing on calls answered and call blocking

2.41 There are a number of factors, such as seasonal factors and policy announcements, which influence the overall volume of calls and therefore the incidence of call blocking. This also includes the range of initiatives implemented under the TOP.

2.42 In August 2018, the Minister for Human Services stated that since their introduction in October 2017 Serco staff had ‘already answered more than two million calls and helped reduce busy signals on Centrelink phone lines by almost 20 per cent’.40

2.43 The ANAO analysed the number of calls that entered the telephone network41 and the incidence of call blocking for the last two financial years. Figure 2.2 shows this analysis.

Figure 2.2: Number of calls that enter the network (successful); busy signals per month and percentage of busy signals as a proportion of all successful calls

Source: ANAO analysis of Human Services’ data.

2.44 Figure 2.2 shows that the number of busy signals fluctuate throughout the year and has continued to fluctuate since the introduction of the outsourcing arrangements in October 2017.

3. Quality and accuracy of Centrelink telephone services

Areas examined

This chapter examines the extent to which Human Services has implemented an effective quality framework to support the quality and accuracy of Centrelink telephone services, in accordance with Recommendation Two of Auditor-General Report No. 37 of 2014–15.

Conclusion

Human Services has effectively applied the department’s Quality Framework to Centrelink Smart Centres’ telephony staff to support the quality and accuracy of telephone services.

Areas for improvement

The ANAO suggests that Human Services progress work to implement a risk-based approach to determining the number of quality call evaluations required per Service Officer, under the Quality Call Framework, to direct resources at Service Officers at a higher risk of making errors.

The ANAO also suggests that Human Services implement calibration and Aim for Accuracy exercises more consistently across all sites and programmes.

Are quality assurance activities undertaken in accordance with the department’s quality framework?

Quality assurance activities for Centrelink Smart Centres’ telephony services are undertaken in accordance with the Human Services Quality Framework.

3.1 Human Services implemented its Quality Framework (the Framework) in September 2013. Entity wide application of the Framework was examined in greater detail in Auditor-General Report No. 10 of 2018–19 Design and Implementation of the Quality Framework.42 That audit concluded that while the Framework strengthened quality arrangements in service delivery operations (such as the Smart Centres), where it has been comprehensively implemented, there had been lower levels of implementation elsewhere in the department.

3.2 The Framework defines quality as ‘ensuring Government outcomes are achieved as intended and that we are meeting our published service commitments to customers’. The aim of the Framework is to mandate a consistent and integrated approach to delivering quality services.

3.3 Quality Strategy Action Plans are used to identify the tasks and initiatives required to implement the framework elements. The Smart Centres Division Quality Strategy Action Plan (the Plan) provides a structure for each Smart Centre region to consistently monitor and improve quality and continuous improvement activities. The Plan set out four quality standards, as seen in Box 2, to guide how Smart Centre staff will provide effective and high quality services. A set of performance indicators has been developed to determine how each standard will be achieved.

|

Box 2: Smart Centres quality standards |

|

Access — Our customers receive a consistent and timely service. Accuracy — Customer payments and records accurately reflect customers’ known circumstances. Customer solution — Each staff member acts to meet the customer’s presenting needs, including their need to manage self-service. Policy intent — Our staff understand and can explain how the relevant policy is intended to operate and will escalate challenging cases. |

Source: Human Services documentation.

3.4 The Framework sets out the expectations for high quality service delivery across the department and includes six quality elements: Accountability; Quality Processes; Issues Management; Capability; Culture; and Reporting.

3.5 To achieve the requirements of the Plan and ensure quality assurance mechanisms align to the elements of the Framework, the department revised and implemented the following quality initiatives within Smart Centres:

- redesigned the Quality Call Listening (QCL) program, which was in place during the previous audit, to reflect the introduction of blended work.43

- refined the Quality On Line (QOL) process, which is a decision monitoring and checking system that uses a sampling algorithm to select processing activities for checking.

3.6 The ANAO examined the Quality Call Framework and Quality On Line processes and supporting documentation for alignment and consistency with the six elements of the department’s Quality Framework. The ANAO’s analysis found that quality assurance activities undertaken within Smart Centres are consistent with the six quality elements and undertaken in accordance with the Framework.

Are effective quality assurance mechanisms applied to ensure the consistency of service and information provided to customers?

There are largely effective quality assurance mechanisms in place to support the consistency of service and information provided to customers, except that not all required evaluations are currently completed and calibration activities have not been applied consistently across all sites.

The Quality Call Framework and the Quality On Line processes apply to all staff who provide Centrelink telephone services regardless of staff classification, employment status or work type.

Human Services is actively exploring options to further improve quality processes, such as a pilot currently underway to trial Remote Call Listening evaluations.

Quality Call Framework

3.7 The ANAO’s previous audit found inconsistencies in the application of the (then) Quality Call Listening Framework (QCL). Specifically, it was found that the QCL was not applied to Irregular or Intermittent Employees44; the required number of call evaluations per Service Officer was low; and participation in the calibration exercise45 by quality evaluators was also low.46 The ANAO previously recommended that the department should review these potential gaps in the implementation of the Quality Call Listening Framework and apply the Quality Call Listening framework to all staff answering telephone calls.

3.8 In 2017 Human Services implemented the Quality Call Framework (QCF) to replace the previous Quality Call Listening Framework. The QCF applies to a number of business groups within the department including Health and Aged Care; Integrity and Information; and Smart Centres within Service Delivery Operations. Within Centrelink Smart Centres, the QCF applies to all telephony staff, including Irregular and Intermittent Employees and those Service Officers who engage in outbound calls who were previously excluded from the Quality Call Listening framework.

3.9 The QCF is used to measure the quality of the interaction between departmental staff and customers. The QCF has two main measures:

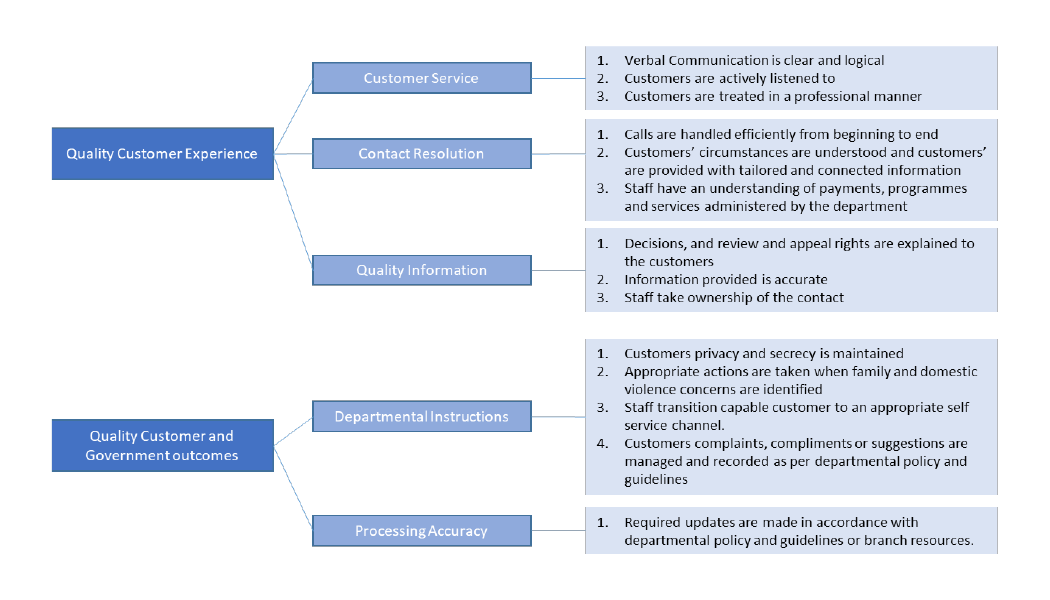

- ‘Quality Customer Experience’ which measures staff interaction with customers; and

- ‘Quality Customer and Government Outcomes’ which measures adherence to procedure and processes.

3.10 The two measures are further broken down into five standards, and each standard has a series of elements against which calls are assessed to define aspects of a quality call (see Figure 3.1).

Figure 3.1: Quality call standards

Source: ANAO analysis of Human Services documentation.

3.11 Human Services has developed a departmental Quality Call evaluation form that is intended to be used consistently by all Quality Checkers. Call quality is assessed as either ‘met’ or ‘not met’ against the above five standards. Failure to meet any of the five standards results in the quality of the call evaluated as ‘not met’. There is no scoring guide and checkers use their individual judgement to assess calls. This has led to some inconsistencies in the interpretation and use of the standards to assess calls for quality across different Smart Centres.

Application of the Quality Call Framework

3.12 Service Officers employed on an ongoing basis are required to have nine calls evaluated per quarter. The Operational Blueprint states that the number of calls evaluated can be varied with approval from the National Manager to no fewer than three calls per quarter, per Service Officer. The sample size for Irregular and Intermittent Employees (IIEs) is reduced to five calls per quarter. In October 2018, changes were made to policy to allow National Managers greater autonomy in applying variations to the number of required evaluations per Service Officer.

3.13 At a national level six types of variations were approved for Smart Centres in Quarter 4 2017–18 and Quarter 1 2018–19. Variations include reductions for IIEs and partial unavailability due to leave or scheduling to other tasks and increases to support learning and development. Despite variations being approved at a national level, there is no visibility of approved variations across Smart Centre sites. As such, both Human Services and the ANAO have identified an ongoing risk relating to adhering to variations — either too few or too many evaluations may be completed for a Smart Centres Service Officer. Smart Centres recently introduced a variation workbook which is provided to managers at an operational level to give greater and more timely access to centralised variations data.

3.14 Table 3.1 shows that across the three quarters from October 2017 to June 2018 less than 100 per cent of required evaluations were completed. Team leaders interviewed advised the ANAO that they did not always manage to complete call evaluations due to competing priorities.

Table 3.1: Number of Quality Call Framework evaluations completed for Smart Centres

|

Quarter |

Required evaluations |

Completed evaluations |

Percentage of required evaluations completed |

|

Quarter 2 2017/18 |

37,137 |

34,945 |

94.1 |

|

Quarter 3 2017/18 |

36,946 |

36,442 |

98.6 |

|

Quarter 4 2017/18 |

40,475 |

38,480 |

95.1 |

Source: Human Services internal document.

3.15 Quality Checkers assess calls through one of two methods:

- side-by-side listening (calls are assessed in real time through use of dual head sets); or

- via call recording.

3.16 Call recording was introduced at the end of 2017 and has been implemented at all sites where the required telecommunications infrastructure is available. Call recording allows Quality Checkers to listen to calls at a later time and to pause, replay and flag sections of the call. Human Services is currently piloting a Remote Call Listening approach to evaluations. Under this pilot, calls are evaluated by a Quality Checker in a different geographical location and with no direct relationship to the Service Officer. Outcomes from phase one of the Remote Call Listening pilot suggest that using call recording improves Quality Checkers’ ability to apply the policy, practices and procedures. During audit fieldwork, team leaders indicated that Remote Call Listening leads to the identification of more errors and greater consistency in the assessment of staff performance. Smart Centres’ Performance Reports support this feedback, indicating that recorded calls identify more errors than side-by-side listening.

Calibration process

3.17 The QCF includes a calibration process to ensure quality call evaluations are conducted consistently across Quality Checkers. During the calibration exercise, all accredited Quality Checkers listen to the same imitation call and assess it against the QCF criteria. The calibration exercise results show the level of variation in the application of quality call standards and are intended to be used to support business teams to facilitate continuous improvement activities to provide more consistent outcomes. Each business team is required to undertake a calibration exercise every six months, with each Quality Checker required to participate in at least one calibration exercise annually. Human Services has not fully implemented calibration processes in the Smart Centres Division within the requirements as only one Divisional calibration activity has been conducted since the implementation of the QCF in 2017 with a participation rate of 38 per cent. Some branches within Smart Centres Division conducted additional analysis of results from this calibration activity.

Quality On Line

3.18 Quality On Line (QOL) is the decision monitoring and checking system applied to transactions completed within Centrelink Service Delivery. For telephony, QOL will assess processed work activities that affect customer payments for accuracy before they are completed. This ensures that customers receive the correct payments. Unlike the QCF there is no set number of checks as the number of telephony transactions subject to QOL will vary depending on the proficiency of staff as defined under the QOL sampling policy (see Table 3.2 below).

3.19 QOL Checkers47 review a sample of processing activities completed by Service Officers, which may be as a result of a call, to identify potential errors and return errors with feedback to the Service Officer for correction. Errors identified through QOL are defined as either critical or non-critical errors.48 Critical errors are ‘returned’ to the Service Officer for correction. Non-critical errors are ‘released’ with feedback to the Service Officer. Non-critical errors may require further work or improvements for next time, however they do not impact on delivery of the correct outcome for the customer.

Application of QOL checking

3.20 Human Services applies a risk-based approach to QOL checking. As shown in Table 3.2, a Service Officer’s proficiency level is set based on their experience in processing the type of work as well as their correctness rate. There are three proficiency levels for staff: learner, intermediate and proficient.

Table 3.2: Quality On Line proficiency levels and sample rates for Service Officers

|

Proficiency level |

Description |

Sample rate of activities (%) |

Minimal correctness rate (%) |

|

Learner |

New staff or existing staff who have moved to processing work in a new benefit, system, or have little experience in the work type |

100 |

<85 |

|

Intermediate |

Staff who have achieved a correctness rate of 85%–94.9% in the relevant system(s) and benefits(s) |

25 |

85–94.9 |

|

Proficient |

Staff who have achieved 95% correctness retain the relevant system(s) and benefits(s) |

2 |

95 |

Source: Human Services’ National Quality On Line Standards.

3.21 A number of automated QOL reports are available. Results can be selected at an officer level or for a Smart Centre or region. Each Service Officer has access to view their own work and run reports for themselves. National QOL reports are generated each month and provide a high-level report of results for all QOL assessments completed. Results from these reports are also included in the Smart Centres Performance Reports, which include a range of performance and quality results over a settlement period.49

3.22 Table 3.3 outlines the QOL results for Smart Centres over 2016–17 and 2017–18.

Table 3.3: Smart Centres’ Centrelink Quality On Line results

|

Year |

Employment type |

Number of activities processed |

Activities QOL checked |

Percentage of activities telephony QOL checked (%) |

QOL correctness results (%) |

|

2016–17 |

Irregular and Intermittent Employee |

1,680,949 |

491,603 |

29 |

94 |

|

Non-ongoing |

283,496 |

153,347 |

54 |

90 |

|

|

Ongoing |

7,312,071 |

735,673 |

10 |

95 |

|

|

2017–18 |

Contractor |

13,712 |

13,421 |

98 |

92 |

|

Irregular and Intermittent Employee |

1,158,356 |

282,459 |

24 |

95 |

|

|

Non-ongoing |

151,114 |

109,999 |

73 |

94 |

|

|

Ongoing |

7,190,261 |

883,042 |

12 |

96 |

|

Source: ANAO analysis of Human Services’ data.

3.23 Human Services target for QOL is 95 per cent. For 2016–17 the target was not met for Irregular and Intermittent Employees or non-ongoing staff. There has been an improvement in results for 2017–18 with only non-ongoing employees falling short of the target at 94 per cent and contractors at 92 per cent.

Aim for Accuracy

3.24 Human Services undertakes processes to ensure the integrity and reliability of quality checking activities completed through QOL. Since the beginning of 2017, Human Services has been deploying the Aim for Accuracy tool across programs (including Age Pension and Family Tax Benefit) and will replace the previous ‘Check the Checker’ process that was in place at the time of the previous audit. As Aim for Accuracy is still to be implemented across all programs, there is limited reporting currently available. Initial reporting of Aim for Accuracy within the Family Tax Benefit payment indicates that 26 per cent of activities were incorrectly returned or passed but not returned during initial quality checking processes, demonstrating that the process is identifying continuous improvement opportunities as intended. Given the high rate of non-compliant results for this activity, there is scope for recommendations to be more targeted and more consistent deployment of the Aim for Accuracy process across all programmes.

3.25 Human Services initial recommendations arising from initial Aim for Accuracy results include increasing awareness of the errors identified by QOL checkers and ensuring activities are fully checked and feedback is complete. Recommendations are addressed at the operational level and have not been escalated to the strategic level.

Transition to Quality Management Application

3.26 Human Services advised the ANAO that the department is currently replacing the Quality On Line system for Centrelink processed activities. As programmes transfer to the new Process Direct system50, quality checking will occur using a new software tool called the Quality Management Application (QMA).51

3.27 Using the QMA system, errors are not returned to the accountable Service Officer for correction. Rather, errors are corrected by Quality Management Officers (QMO) during the checking process. However, feedback is provided to the Service Officer following the identification of an error. As errors are corrected by QMOs at the time of identification, a proposed benefit of the QMA system is that it will reduce customer wait times for claims to be processed. As Service Officers are not required or provided the opportunity to correct their previous mistakes there is a potential risk that they may continue to make similar errors. The ANAO considers it is important that Human Services continue to ensure Service Officers receive appropriate feedback and training, informed by QMA results, to minimise repeated errors and support continuous improvement.

Are the results of quality assurance activities monitored and analysed to inform continuous improvement?

Monitoring and analysis of quality assurance activities occurs regularly within Smart Centres and at the strategic level to inform continuous improvement activities.

Service Officer coaching and improvement

3.28 Quality results are used at an individual Service Officer level to support performance and quality improvements. Coaching and development opportunities vary between staff who are ongoing, non-ongoing and Irregular and Intermittent Employees. Ongoing and non-ongoing staff are provided with ten hours of learning and development time per settlement period, however there is no formal coaching scheduled for contractors or Irregular and Intermittent Employees. Individual Smart Centres make provisions to support Irregular and Intermittent Employees with coaching as required, however this varies between sites and sometimes between teams within the same site. Interviews with staff and site leadership indicated a broad range of capabilities across the team leader cohort in terms of coaching and development of staff. This variation presents a potential risk that the coaching and development requirements of Irregular and Intermittent Employees are not adequately addressed.

3.29 In late 2017, as part of the Telephony Optimisation Programme, Human Services undertook the Queue Rationalisation Project.52 One goal of the Queue Rationalisation Project was to reduce the number of main business lines and increase end-to-end servicing for customers. This resulted in changes to the required capabilities and expectations of all Services Officers, including Irregular and Intermittent Employees, answering telephone calls. Where previously Service Officers were only trained for particular types of queries relating to their business line, they are now expected to make all attempts to fully resolve a customer’s query. While Human Services advised that Irregular and Intermittent Employees primarily work on less complex payment lines, they are still required to meet these increased expectations, although without the formal coaching they may have fewer opportunities to consolidate skills.

3.30 Human Services has not established a performance target for quality call evaluations across all groups of employees. In February 2018, Human Services implemented a 95 per cent target for all quality activities for Irregular and Intermittent Employees. As at November 2018, this target has not yet been implemented for all employee types. The absence of a consistent target may have implications for identifying and delivering staff training, as it relies on individual smart centres or team leaders’ discretion in determining when a Service Officer requires additional support or training.

Strategic monitoring

3.31 Human Services has several groups in place to provide strategic monitoring of quality issues. At a departmental level, a Quality Council was implemented in March 2016. The Terms of Reference for the Quality Council state that its broad role is to ensure engagement in quality activity across products and services at operational levels. The Quality Council has the relevant authority to direct programs of work to address issues affecting quality and to provide information and advice to other branches in the department on the management of matters regarding quality as required. The Quality Council consists of 15 National Managers from across the department and meets every two months.53

3.32 In 2017, Human Services introduced a Productivity and Quality (P&Q) Manager role into each Smart Centre to support the implementation of a consistent approach to managing performance. P&Q Managers meet monthly to discuss performance, productivity and quality in the context of development and continuous improvement opportunities within the Smart Centres network. P&Q Managers also meet regularly with the leadership teams within Smart Centres.

3.33 Quarterly QCF reporting identifies the top five most common errors for Smart Centres. Figure 3.2 shows the most common errors identified through call evaluations since the implementation of the QCF in the first quarter for 2017–18. The five most common element error categories are Digital Streaming; Call Management; Complete Solution; Privacy and Secrecy; and Information Accuracy.54

Figure 3.2: Top five element errors by count for the period July 2017 to July 2018

Source: ANAO developed from Human Services’ data (Quarterly Framework Report Dashboard).

3.34 The ANAO’s review of Quality Council minutes identified that the top element errors are considered by the Quality Council. Consistent with the role and authority set out in the Quality Council Terms of Reference, action items are identified and assigned to responsible officers for resolution. Some items have been resolved while others remain in progress.

4. Performance monitoring and reporting arrangements

Areas examined

This chapter examines the extent to which Human Services has reviewed and implemented effective performance monitoring and reporting arrangements to provide customers with a clear understanding of expected service standards, in accordance with Recommendation Three of Auditor-General Report No. 37 of 2014–15.

Conclusion

Human Services telephony program has appropriate data and largely effective internal performance reporting for management purposes. External reporting does not provide a clear understanding of the overall customer experience.

Area for improvement

The ANAO has made one recommendation aimed at Human Services finalising its review of Key Performance Indicators and implementing updated external performance metrics.

Does Human Services collect appropriate telephone services performance data for internal management?

Human Services collects appropriate performance data for internal operational management of its telephony services. Performance information is regularly reported to Smart Centre management and used to identify local performance trends, adjust resource allocation and consider staff development needs. Human Services’ Executive receive performance reporting to inform monitoring against call wait times and call blocking to support achievement of the external Average Speed of Answer Key Performance Indicator. Reporting to the Executive does not provide full insight into the overall customer experience– such as the time spent waiting before customers abandon calls or the number of calls answered within specified timeframes. This information would support Human Services to continue improvements in the telephony channel and the transition to digital services.

4.1 Human Services uses a range of performance reports at the national and local Smart Centre level to help manage workloads and drive individual service officer performance.55 These reports are used regularly and form a significant component of operational management.

4.2 The Channel Operations Facility56 maintains a real time view of a number of performance metrics across the department to monitor the impact of business changes and service interruptions in all channels (telephony, digital services and face-to-face) and to all Human Services programs. The Channel Operations Facility provides real time management of Smart Centres including workforce and demand management and analysis with the aim of ensuring telephony and processing services meet agreed targets. For example, in August 2018 the Channel Operations Facility redirected Service Officers from processing to inbound telephony to support high call demand resulting from a drought relief funding announcement.

4.3 Individual Smart Centre leadership teams use performance reports to improve site and individual performance. Some Smart Centres also develop their own tailored performance reports to assist in these activities.

4.4 As a result of the recent introduction of call recording, Human Services is now able to examine call types and demand drivers, such as the impact on call demand from customers with queries from letters they received.

Understanding the customer experience

4.5 Human Services’ telephony service provider57 collects data to inform performance monitoring. The Workload and Performance Information Branch within Human Services is responsible for extracting, collating, cleansing, analysing, calculating and reporting on performance information including the Average Speed of Answer KPI. There are a number of limitations relating to the data that is received by Human Services and the way that this data is reported on.

4.6 Human Services does not receive or store individual call level data for analysis and reporting. Human Services receives the majority of its data aggregated in 15-minute blocks. Human Services advised the ANAO that it receives data in an aggregated format for two main reasons. Firstly, aggregating key data tables in 15-minute blocks is consistent with and supports workload management and scheduling. Secondly, the amount of data Human Services is able to receive from the telephony service provider is limited due to Human Services’ data storage capacity. Human Services has advised the ANAO that it is considering options to expand its data storage capacity and allow for receipt of more detailed information, such as moving to a ‘data lake environment’58, however this work has not yet been formalised.

4.7 Although Human Services does not receive or store individual call data, it does have access to other aggregated information that is used for performance and other ad hoc analysis to understand some aspects of customer services. Human Services also has access to disaggregated data that could be used to further understand and report on the customer experience, however this data is not used in regular reporting. This disaggregated data includes time spent waiting before a customer abandons a call, and the proportion of all wait time that relates to abandoned calls. It also includes the number of calls answered within specified timeframes59 (for example, the number of calls answered within one to five minutes) which provides a greater level of detail than the average speed of answer.

4.8 Utilising this data would enable Human Services to better understand a wider range of customer experience metrics such as the wait time experienced by customers prior to abandoning a call60 and aggregated service level standards such as the number of calls answered within specified timeframes. Regularly analysing and reporting against a wider range of customer experience metrics may enable Human Services to better target resourcing; further tailor IVR messaging to customers; and provide a clearer linkage back to initiatives under the channel strategy to support the shift to the digital delivery as the primary mode of interaction and reduce overall demand on telephony systems.

4.9 As detailed further at paragraph 4.30, Human Services is implementing a new internal reporting mechanism in the form of a Balanced Scorecard which includes existing and new metrics.

Has Human Services developed appropriate external performance indicators to measure telephone services performance and which reflect customer experience?

Human Services’ external reporting of telephone service performance is not appropriate as it does not provide a clear understanding of the service a customer can expect. The Average Speed of Answer Key Performance Indicator does not consider the various possible outcomes of a call, such as abandoned calls.

Human Services has undertaken several reviews of its performance metrics, however it has not yet identified and finalised its preferred set of metrics. Therefore, it has only partially implemented Recommendation Three of Auditor-General Report No. 37 of 2014–15. No changes have yet been made to external performance information to provide a clearer understanding of the service experience a customer can expect.

4.10 Human Services included nine KPIs within its 2018–19 Corporate Plan related to the Social Security and Welfare Program, delivered under Human Services’ Centrelink master program.61 Of the nine KPIs, one — the Average Speed of Answer — directly relates to Centrelink telephone services. The Customer Satisfaction KPI provides an overall view of customer satisfaction across all social security and welfare services provided by Human Services, of which telephone services is one component.

4.11 Human Services defines the Average Speed of Answer as the average length of time a customer waits to have a call answered through the department’s telephony services. This is calculated by dividing the total time customers waited for their call to be answered by the total number of calls answered by Service Officers. Human Services has used an Average Speed of Answer of less than or equal to 16 minutes as its target for external reporting purposes for telephone services since 2012–13.62