Browse our range of reports and publications including performance and financial statement audit reports, assurance review reports, information reports and annual reports.

Indigenous Advancement Strategy

Please direct enquiries relating to reports through our contact page.

The audit objective was to assess whether the Department of the Prime Minister and Cabinet has effectively established and implemented the Indigenous Advancement Strategy to achieve the outcomes desired by government.

Please note: Aboriginal and Torres Strait Islander people should be aware that this website may contain images of deceased people.

Summary and recommendations

Background

1. In September 2013, responsibility for the majority of Indigenous-specific policy and programs, as well as some mainstream programs that predominantly service Indigenous Australians, was transferred into the Department of the Prime Minister and Cabinet (the department). These substantial changes saw 27 programs consisting of 150 administered items, activities and sub-activities from eight separate entities moved to the department.

2. In May 2014, the Indigenous Advancement Strategy (the Strategy) was announced by the Australian Government as a significant reform in the administration and delivery of services and programs for Indigenous Australians. Under the Strategy, the items, activities and sub-activities inherited by the department were consolidated into five broad programs under a single outcome. The Australian Government initially committed $4.8 billion to the Strategy over four years from 2014–15.1 The 2014–15 Budget reported that the Australian Government would save $534.4 million over five years by rationalising Indigenous programs, grants and activities.

3. In the first year of the Strategy, from July 2014 to June 2015, the department focused on transitioning over 3000 funding agreements, consolidating legacy financial systems, administering a grant funding round and establishing a regional network.

Audit objective and criteria

4. The objective of the audit was to assess whether the department had effectively established and implemented the Indigenous Advancement Strategy to achieve the outcomes desired by Government.

5. To form a conclusion against the audit objective, the Australian National Audit Office (ANAO) adopted the following high-level audit criteria:

- the department has designed the Strategy to improve results for Indigenous Australians in the Australian Government’s identified priority areas;

- the department’s implementation of the Strategy supports a flexible program approach focused on prioritising the needs of Indigenous communities;

- the department’s administration of grants supports the selection of the best projects to achieve the outcomes desired by the Australian Government, complies with the Commonwealth Grants Rules and Guidelines, and reduces red tape for providers;

- the department has designed and applied the Strengthening Organisational Governance policy to ensure funded providers have high standards of corporate governance; and

- the department has established a performance framework that supports ongoing assessment of program performance and progress towards outcomes.

6. The audit examined the department’s activities relating to the Strategy’s establishment, implementation, grants administration and performance measurement leading up to the Strategy’s announcement in 2014 and its initial implementation until the audit commenced in March 2016.

Conclusion

7. While the Department of the Prime Minister and Cabinet’s design work was focused on achieving the Indigenous Advancement Strategy’s policy objectives, the department did not effectively implement the Strategy.

8. The Australian Government’s identified priority areas are reflected in the Strategy’s program structure which is designed to be broad and flexible. The department considered the potential risks and benefits associated with the reforms. Planning and design for the Strategy was conducted in a seven week timeframe, which limited the department’s ability to fully implement key processes and frameworks, such as consultation, risk management and advice to Ministers, as intended.

9. The implementation of the Strategy occurred in a short timeframe and this affected the department’s ability to establish transitional arrangements and structures that focused on prioritising the needs of Indigenous communities.

10. The department’s grants administration processes fell short of the standard required to effectively manage a billion dollars of Commonwealth resources. The basis by which projects were recommended to the Minister was not clear and, as a result, limited assurance is available that the projects funded support the department’s desired outcomes. Further, the department did not:

- assess applications in a manner that was consistent with the guidelines and the department’s public statements;

- meet some of its obligations under the Commonwealth Grants Rules and Guidelines;

- keep records of key decisions; or

- establish performance targets for all funded projects.

11. The performance framework and measures established for the Strategy do not provide sufficient information to make assessments about program performance and progress towards achievement of the program outcomes. The monitoring systems inhibit the department’s ability to effectively verify, analyse or report on program performance. The department has commenced some evaluations of individual projects delivered under the Strategy but has not planned its evaluation approach after 2016–17.

Supporting findings

Establishment

12. The five programs under the Strategy reflect the Government’s five priority investment areas and were designed to be broad and flexible by reducing the number of programs and activities. Clearer links could be established between funded activities and the program outcomes.

13. The department developed a consultation strategy but did not fully implement the approach outlined in the strategy.

14. The department considered the impacts of the Strengthening Organisational Governance policy upon organisations, including whether it was discriminatory to Indigenous organisations.

15. The department identified, recorded and assessed 11 risks to the implementation of the Strategy. The department implemented or partially implemented 27 of 30 risk treatments. Risk management for the Strategy focused on short-term risks associated with implementing the grant funding round.

16. The department provided advice to the Prime Minister and Minister on aspects of planning and design during the establishment of the Strategy. The department did not meet its commitments with respect to providing advice on all the elements identified as necessary for the implementation of the Strategy. The department also did not advise the Minister of the risks associated with establishing the Strategy within a short timeframe.

Implementation

17. The department identified but did not meet key implementation stages and timeframes.

18. The department established and implemented transitional arrangements at the same time as administering the application process for the grant funding round. During this time, general information was sent to service providers about the new arrangements under the Strategy that was not targeted at the project or activity level. To support continuity of investment, the department extended some contracts at reduced funding levels of five per cent or more. Following the grant funding round additional service gaps were identified and action was taken to fill them.

19. The regional network did not have sufficient time to develop regional investment strategies and implement a partnership model, which were expected to support the grant funding round.

Grants Administration

20. The department developed Indigenous Advancement Strategy Guidelines and an Indigenous Advancement Strategy Application Kit prior to the opening of the grant funding round. The guidelines and the grant application process could have been improved by identifying the amount of funding available under the grant funding round and providing further detail on which activities would be funded under each of the five programs. Approximately half of the applicants did not meet the application documentation requirements and there may be benefit in the department testing its application process with potential applicants in future rounds.

21. The department provided adequate guidance to staff undertaking the assessment process. The department developed mandatory training for staff, but cannot provide assurance that staff attended the training.

22. The department’s assessment process was inconsistent with the guidelines and internal guidance. Applications were assessed against the selection criteria, but the Grant Selection Committee did not receive individual scores against the criteria or prioritise applications on this basis.

23. Internal quality controls and review mechanisms did not ensure that applications were assessed consistently.

24. The department did not maintain sufficient records throughout the assessment and decision-making process. In particular, the basis for the committee’s recommendations is not documented and so it is not possible to determine how the committee arrived at its funding recommendations. The department did not record compliance with probity requirements. Further, the department did not maintain adequate records of Ministerial approval of grant funding.

25. The list of recommended projects provided to the Minister did not provide sufficient information to comply with the mandatory requirements of the Commonwealth Grants Rules and Guidelines, or support informed decision-making, and contained administrative errors.

26. The department’s initial advice to applicants about funding outcomes did not adequately advise applicants of the projects and amount of funding approved.

27. Negotiations with grant recipients were largely verbal and conducted in a short timeframe, with some agreements executed after the project start date. In most instances the department negotiated agreements that were consistent with the Minister’s approval. There is limited assurance that negotiations were fair and transparent.

28. The department has established arrangements to monitor the performance of service providers, but has not specified targets for all providers and arrangements are not always risk-based.

Performance Measurement

29. The department developed a performance framework and refined this in line with the requirements of the enhanced performance framework under the Public Governance, Performance and Accountability Act 2013. The current framework does not provide sufficient information about the extent to which program objectives and outcomes are being achieved.

30. The performance indicators against which funding recipients report cannot be easily linked to the achievement of results and intended outcomes across the Strategy.

31. The department developed a system by which entities could provide performance reporting information electronically. However, the processes to aggregate and use this information are not sufficiently developed to allow the department to report progress against outcomes at a program level, benchmark similarly funded projects, or undertake other analysis of program results.

32. The department drafted an evaluation strategy in 2014, which was not formalised. In May 2016 the Minister agreed to an evaluation approach and a budget to conduct evaluations of Strategy projects in 2016–17.

Recommendations

Recommendation No. 1

Paragraph 3.29

The Department of the Prime Minister and Cabinet ensure that administrative arrangements for the Indigenous Advancement Strategy provide for the regional network to work in partnership with Indigenous communities and deliver local solutions.

Department of the Prime Minister and Cabinet’s response: Agreed.

Recommendation No. 2

Paragraph 4.28

For future Indigenous Advancement Strategy funding rounds, the Department of the Prime Minister and Cabinet:

- publishes adequate documentation that clearly outlines the assessment process and the department’s priorities and decision-making criteria; and

- consistently implements the process.

Department of the Prime Minister and Cabinet’s response: Agreed.

Recommendation No. 3

Paragraph 4.49

The Department of the Prime Minister and Cabinet, in providing sound advice to the Minister for Indigenous Affairs about the Indigenous Advancement Strategy:

- clearly and accurately outlines the basis for funding recommendations; and

- documents the outcomes of the decision-making process.

Department of the Prime Minister and Cabinet’s response: Agreed.

Recommendation No. 4

Paragraph 5.21

The Department of the Prime Minister and Cabinet identify the outcomes and results to be achieved through the Indigenous Advancement Strategy and analyse performance information to measure progress against these outcomes.

Department of the Prime Minister and Cabinet’s response: Agreed.

Summary of entity response

The department welcomes the audit of the processes involved in the establishment of the Indigenous Advancement Strategy, as a major reform in the administration and delivery of services and programs to Indigenous Australians in response to widespread criticism over many years.

The department acknowledges the findings of the report but notes that the implementation occurred in a very challenging timeframe and was accompanied by a reasonably well-managed transition from the old to the new arrangements without serious interruption to service delivery. Having moved through the transition period, with greater experience among applicants and stakeholders, the Strategy is moving into a more mature phase of implementation that draws on lessons learnt.

The ANAO report highlights broad stakeholder support for features of Indigenous Advancement Strategy, including the benefits of having a more consolidated program structure, greater flexibility through which organisations can receive funding, reduced red tape for service providers and developing on the ground responses to issues.

The department accepts all four recommendations, with action already taken or underway that implement improvements consistent with the recommendations. This includes revisions to the Indigenous Advancement Strategy Guidelines; a strengthened role for regional network staff in supporting organisations to develop funding proposals; improvements in application assessment processes, briefing processes and record keeping; and strengthening of performance monitoring and evaluation approaches.

1. Background

The Indigenous Advancement Strategy and Indigenous affairs reform

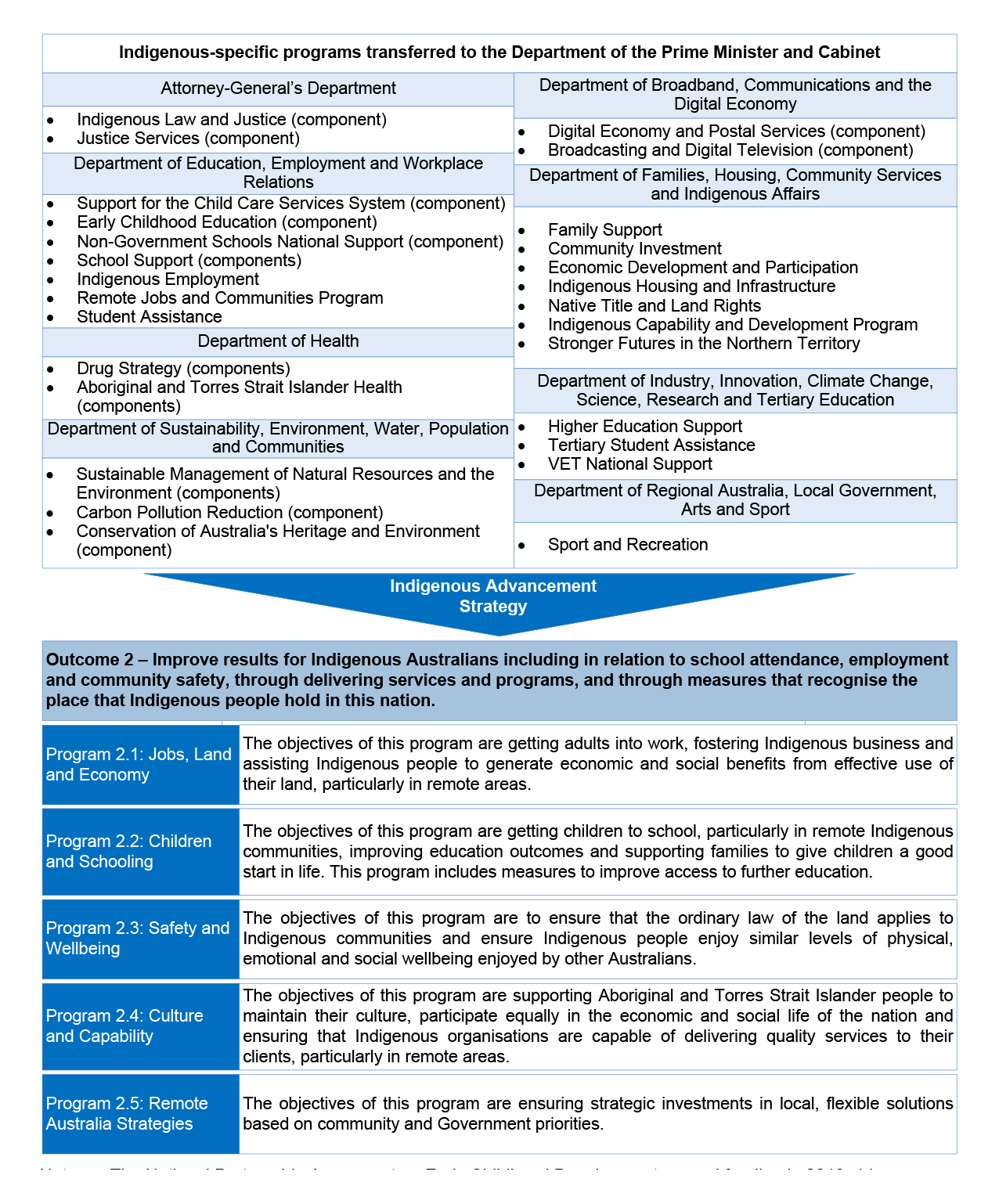

1.1 In September 2013, responsibility for the majority of Indigenous-specific policy and programs, as well as some mainstream programs that predominantly service Indigenous Australians, was transferred into the Department of the Prime Minister and Cabinet (the department). These substantial changes saw 27 programs consisting of 150 administered items, activities and sub-activities from eight separate entities moved to the department.

1.2 In May 2014, the Indigenous Advancement Strategy (the Strategy) was announced by the Australian Government as a significant reform in the administration and delivery of services and programs for Indigenous Australians. Under the Strategy, the items, activities and sub-activities inherited by the department were consolidated into five broad programs under a single outcome. The Australian Government initially committed $4.8 billion to the Strategy over four years from 2014–15.2 The 2014–15 Budget reported that the Australian Government would save $534.4 million over five years by rationalising Indigenous programs, grants and activities.

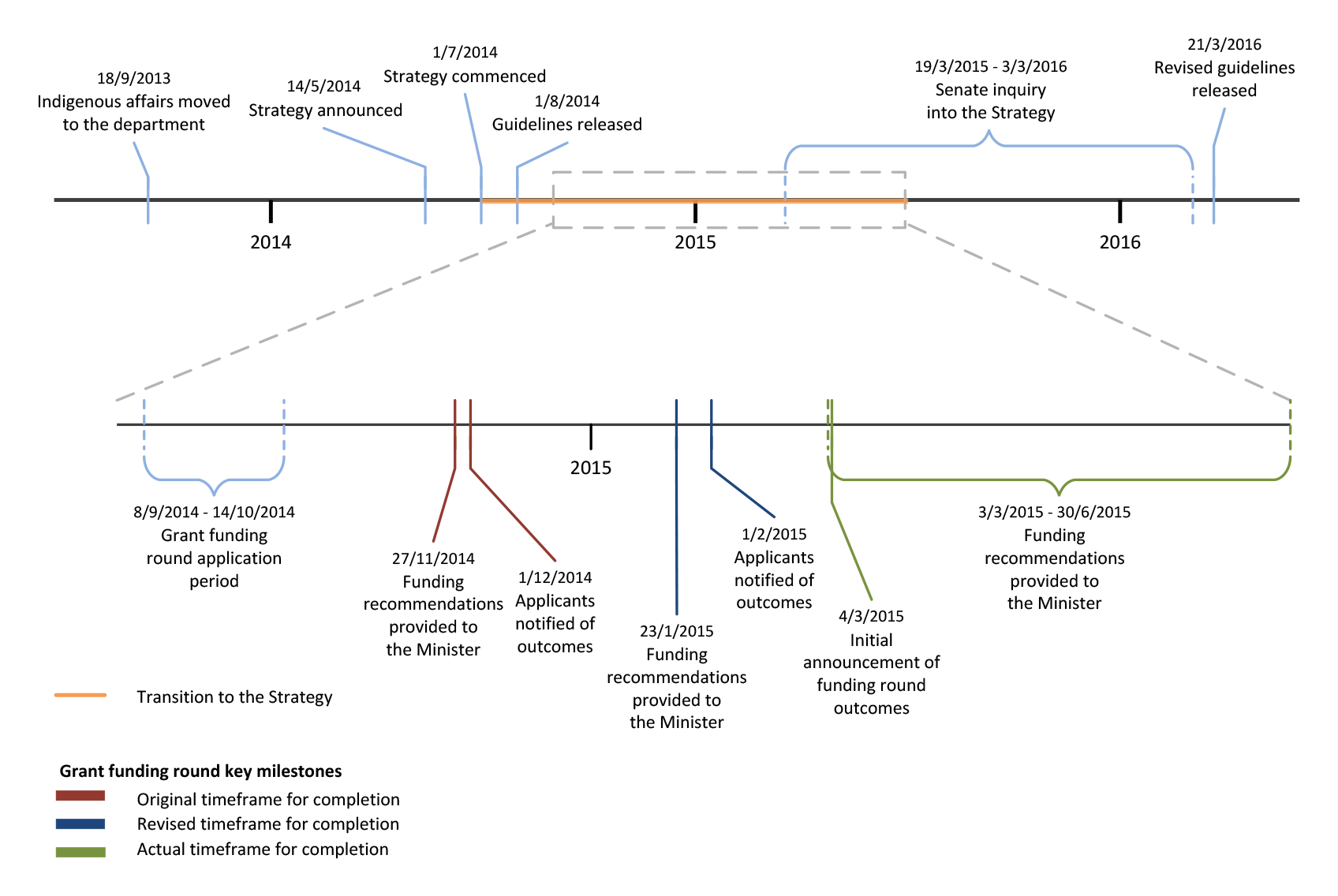

1.3 In the first year of the Strategy, from July 2014 to June 2015, the department focused on transitioning over 3000 funding agreements, consolidating legacy financial systems, administering a grant funding round and establishing a regional network. The timeline for the establishment and implementation of the Strategy is set out in Figure 1.1. Figure 1.2 sets out the legacy programs inherited by the department and the new program structure.

Program objectives and outcomes

1.4 The Strategy’s outcome, as set out in Figure 1.2, is to improve results for Indigenous Australians, with a particular focus on the Government’s priorities of ensuring children go to school, adults work, Indigenous business is fostered, the ordinary rule of law is observed in Indigenous communities as in other Australian communities, and Indigenous culture is supported. In addition to these policy outcomes, the Strategy is intended to:

- reduce program duplication and fragmentation, reduce delivery costs and more clearly link activity to outcomes;

- ensure communities have the key role in designing and delivering local solutions to local problems; and

- offer a simplified approach to funding that reduces the red tape burden on communities and providers.

Figure 1.1: Timeline of key milestones and events in establishing and implementing the Strategy

Source: ANAO analysis.

Figure 1.2: Transition of legacy programs to new program structure under the Strategy

Note: The National Partnership Agreement on Early Childhood Development ceased funding in 2013–14.

Source: ANAO analysis; Department of the Prime Minister and Cabinet, 2016–17 Portfolio Budget Statements, DPM&C, Canberra, 2016.

Grant funding through the Strategy

1.5 Under the Indigenous Advancement Strategy Guidelines 2014, organisations could receive funding through five methods: open competitive grant rounds; targeted or restricted grant rounds; direct grant allocation; demand-driven processes; and ad hoc grants. The department announced the first open competitive grant funding round in September 2014. Approximately $2.3 billion of funding was available, of which $1.65 billion was set aside for the grant funding round, with a further $515 million to support processes such as demand-driven applications and other emerging priorities across 2014–18.

1.6 In the grant funding round, the department received applications from 2345 organisations, which resulted in 996 organisations receiving approximately $1 billion to deliver 1350 projects across Australia.

1.7 The department released new guidelines in March 2016, which consolidated the funding mechanisms into three options. The department can:

- invite applications through an open grant process or targeted grant process where it has identified a need to address specific outcomes on a national, regional or local basis;

- approach an organisation where an unmet need is identified; or

- respond to community-led proposals for support related to an emerging community need or opportunity.

1.8 Funding under the Strategy is administered through a single funding agreement between an organisation and the department, irrespective of the number of projects funded. The intended focus of project funding is clear and measurable results, with payments linked to the achievement of results and outcomes.

1.9 Under the Strategy’s Strengthening Organisational Governance policy, organisations receiving $500 000 or more (GST exclusive) in any one financial year are required to be incorporated under Commonwealth legislation, and remain so while receiving funding through the Strategy. Indigenous organisations are required to be incorporated under the Corporations (Aboriginal and Torres Strait Islander) Act 2006, and non-Indigenous organisations under the Corporations Act 2001.3

Audit approach

1.10 The objective of the audit was to assess whether the department had effectively established and implemented the Indigenous Advancement Strategy to achieve the outcomes desired by Government.

1.11 To form a conclusion against the audit objective, the Australian National Audit Office (ANAO) adopted the following high-level audit criteria:

- the department has designed the Strategy to improve results for Indigenous Australians in the Australian Government’s identified priority areas;

- the department’s implementation of the Strategy supports a flexible program approach focused on prioritising the needs of Indigenous communities;

- the department’s administration of grants supports the selection of the best projects to achieve the outcomes desired by the Australian Government, complies with the Commonwealth Grants Rules and Guidelines, and reduces red tape for providers;

- the department has designed and applied the Strengthening Organisational Governance policy to ensure funded providers have high standards of corporate governance; and

- the department has established a performance framework that supports ongoing assessment of program performance and progress towards outcomes.

1.12 The audit examined the department’s activities relating to the Strategy’s establishment, implementation, grants administration and performance measurement leading up to the Strategy’s announcement in 2014 and its initial implementation until the audit commenced in March 2016. The audit methodology included examining and analysing: the department’s Strategy documentation; correspondence and advice to the responsible Minister; systems and documentation relating to performance and evaluation; departmental data relating to funding and organisations receiving funding; and funding assessments. The audit methodology also involved interviewing departmental staff, applicants from the grant funding round, and relevant peak bodies; and reviewing communication received through the citizens’ input facility.4

1.13 The audit was conducted in accordance with the ANAO’s Auditing Standards at a cost to the ANAO of approximately $908 000.

1.14 Since the commencement of the audit the department has implemented new grant guidelines, re-introduced an Assessment Management Office, implemented a risk management framework, initiated improvements to reporting and held consultation sessions with stakeholders. The department also informed the ANAO that it was in the process of establishing processes to drive risk and compliance management at the local level, developing place-based investment strategies and progressing a strategy to assess the effectiveness of the Strengthening Organisational Governance policy. These initiatives did not fall within the scope of this audit because they were implemented subsequent to the establishment and initial implementation of the Strategy and will be the subject of a future audit by the ANAO.

1.15 The department has acknowledged shortcomings in its record-keeping and management in relation to grants administration under the Strategy. The ANAO found that documentation relating to the planning and initial implementation of the Strategy was not held on a records management system and that the department did not have effective version controls. On this basis, the ANAO does not have assurance that the department has produced complete records of the design and implementation of the Strategy.

2. Establishment

Areas examined

This chapter examines whether the Department of the Prime Minister and Cabinet has designed the Indigenous Advancement Strategy to improve results for Indigenous Australians in the Australian Government’s identified priority areas.

Conclusion

The Australian Government’s identified priority areas are reflected in the Strategy’s program structure and the department considered the potential risks and benefits associated with the reforms. Planning and design for the Strategy was conducted in a seven week timeframe, which limited the department’s ability to fully implement key processes and frameworks, such as consultation, risk management and advice to Ministers, as intended.

Were outcomes identified and did program activities align with desired outcomes?

The five programs under the Strategy reflect the Government’s five priority investment areas and were designed to be broad and flexible by reducing the number of programs and activities. Clearer links could be established between funded activities and the program outcomes.

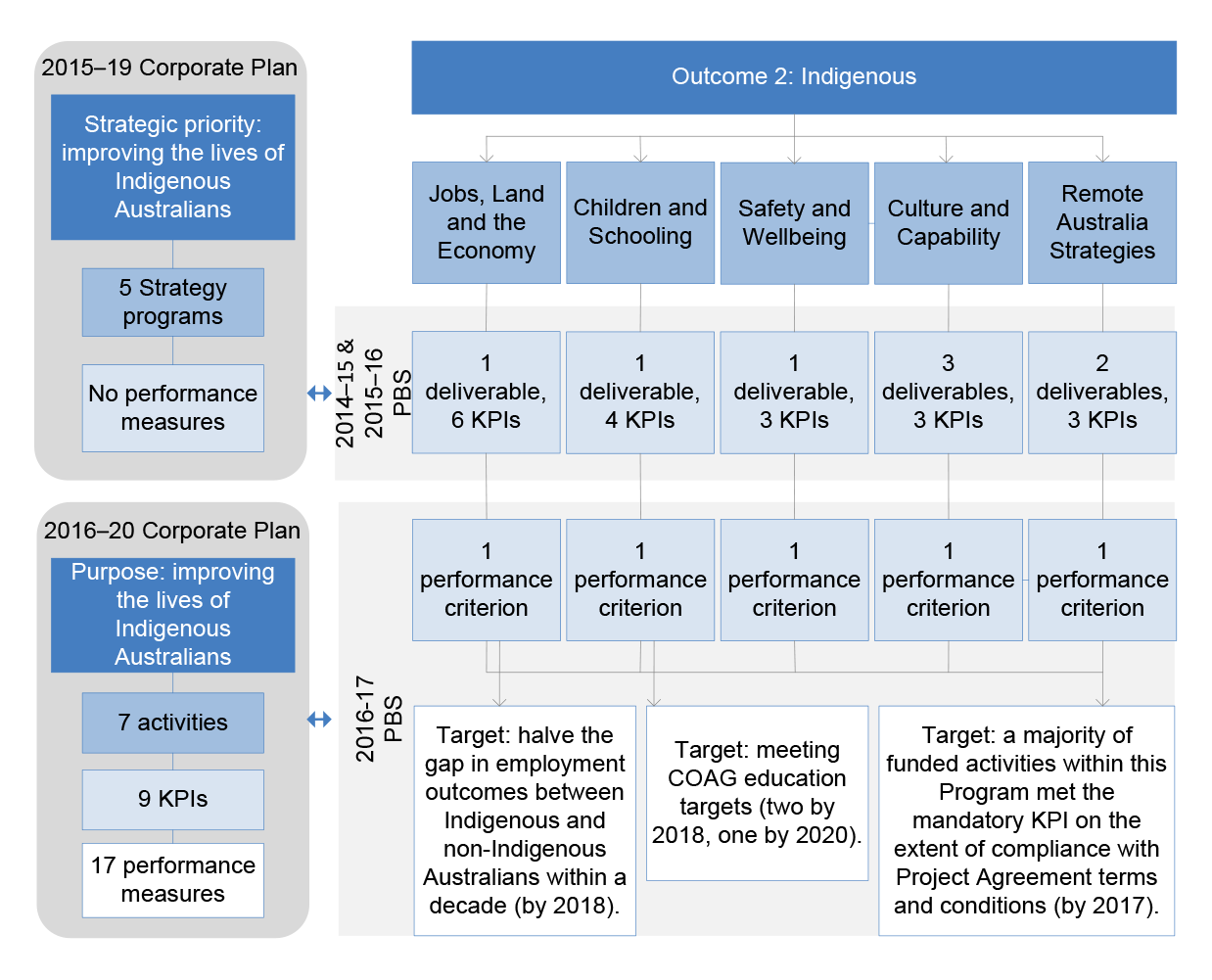

2.1 The Indigenous Advancement Strategy’s (the Strategy) outcome is to ‘improve results for Indigenous Australians including in relation to school attendance, employment and community safety, through delivering services and programs, and through measures that recognise the place that Indigenous people hold in this nation’ and is set out in outcome two of the department’s Portfolio Budget Statements (see Figure 1.2).5 The Australian Government’s five areas for priority investment in Indigenous affairs, set out in paragraph 1.4, broadly align with the five programs under the Strategy.

2.2 The Department of the Prime Minister and Cabinet (the department) advised the ANAO that the program objectives were intended to be broad and flexible. The department also advised that to facilitate flexible and innovative approaches to investment activity it took a limited approach to identifying program activities under the five broad programs. The department provided examples of the types of high-level activities that would be funded under each program in the Indigenous Advancement Strategy Guidelines 2014 (the guidelines). In March 2016 the department revised the guidelines providing additional examples of the types of high-level activities that will be funded under each program.6

2.3 The department had not classified the projects funded under each program into common groups or activities, presenting challenges in linking program activities and the program outcomes. This is discussed further in Chapter 5 in relation to the department’s ability to collect and report performance information at the activity level. In January 2017, the department advised that it had completed a project to group and classify activities under the Strategy.

Were the risks and benefits of delivering the Strategy through a new program structure considered?

The department considered the risks and benefits of delivering the Strategy through a consolidated program structure. To manage the risks identified, the department implemented program guidelines and in 2016 approved an evaluation approach.

2.4 To support the development of a new program structure, the department undertook a review of Indigenous-specific programs and activities transferred during Machinery of Government changes in September 2013. As a part of this review the department outlined which administered items, activities and sub-activities it had inherited through the legacy programs and analysed the potential risks and benefits of moving to a more consolidated program structure.

2.5 The potential benefits identified by the department included: reduced program fragmentation and duplication; simplified program structure and therefore funding arrangements; and clearer linking of activities and investment to outcomes. Feedback provided to the Senate Finance and Public Administration References Committee in June 2015 and to the ANAO indicated broad support for features of the Strategy.7 These features included the benefits of having a more consolidated program structure, greater flexibility through which organisations could receive funding, reducing red tape for service providers and developing on-the-ground responses to issues.

2.6 The key risks of consolidating the program structure identified by the department in its review of Indigenous-specific programs included: continued duplication due to the introduction of large flexible funding streams; reduced flexibility in funding due to existing commitments under previous funding arrangements; inability to link activities to outcomes due to the limited existing evaluation of programs and activities; and the risk that consolidating programs would be viewed by Indigenous Australians as an attempt to reduce support. To manage these risks the department recommended that it establish robust guidelines, foster a strong culture of evaluation and manage the expectations of Indigenous communities, organisations and the service delivery sector.

2.7 The department published the program guidelines in August 2014. The evaluation and performance improvement strategy was drafted in 2014 but was not finalised, and evaluation activities have been limited. In May 2016 an evaluation approach and budget was approved by the Minister for Indigenous Affairs (the Minister) (see Chapter 5).

Were stakeholders consulted as intended?

The department developed a consultation strategy but did not fully implement the approach outlined in the strategy.

2.8 The department identified that broad consultation with individuals, governments, service providers, communities and Indigenous leaders was important to successfully deliver the Strategy’s reforms and the Government’s desired new engagement with Indigenous Australians. To provide direction and guidance in informing and consulting with key stakeholders during the implementation of the Strategy, the department developed a communication and stakeholder engagement strategy. This approach outlined the purpose of initial consultation—to provide information, seek feedback on concerns and opportunities, and seek views on how the new arrangements could best be implemented. Figure 2.1 sets out the key stakeholder groups the department intended to engage with and the purpose for engaging with each group.

Figure 2.1: Purpose of communication and engagement with key stakeholders

Note: The figure refers to three phases of engagement and communication during the implementation of the Strategy—phase one covers June/July 2014; phase two covers July/August 2014; and phase three covers September 2014 onwards.

Source: Department of the Prime Minister and Cabinet.

2.9 The department provided information to the Indigenous Advisory Council (the council) on the Strategy’s new reforms, and sought feedback from the council on concerns and opportunities.8 The department advised the ANAO that it met with Indigenous leaders and national peak bodies outside of the council but did not keep records of the meetings. As such, it is not clear who the department met with, what feedback the department received and how this was considered in developing the Strategy.

2.10 The department reported that it also held approximately 80 information sessions during August and September 2014 with key regional stakeholders, including potential applicants.9 The information sessions focused on providing information on the policy intent and key principles of the Strategy, and the grant funding round. The information sessions did not cover some of the main purposes for consultation set out in Figure 2.1, including how the department should action the Strategy at the local level through community consultation, co-designing regional strategies and actively partnering with government and other service providers to address needs in priority programs and locations.10 Of the 82 public submissions received from stakeholders during the audit, 27 per cent indicated that there had been a lack of consultation between the department and Indigenous communities. Of the 114 applicants interviewed, 12 per cent said that they had been consulted prior to the commencement of the Strategy and 69 per cent said that they had not.11

2.11 During the Senate Finance and Public Administration References Committee inquiry into the Commonwealth Indigenous Advancement Strategy tendering processes, the department stated that it did not have a consistent engagement plan and mechanism for engaging more broadly with service providers and the community.12 Further, the department acknowledged that more engagement should have been undertaken in the early stages of designing and delivering the Strategy.13

Was the impact on organisations considered in designing the Strengthening Organisational Governance policy?

The department considered the impacts of the Strengthening Organisational Governance policy upon organisations, including whether it was discriminatory to Indigenous organisations and the financial and administrative implications.

2.12 The purpose of the Strengthening Organisational Governance policy (the policy) is to ensure that funded organisations have high standards of governance and accountability that facilitate high quality service delivery for Indigenous Australians. In March 2014, the department sought approval from the Minister for the policy, noting that Indigenous organisations could perceive the policy as discriminatory and may resist the requirement to incorporate under the Corporations (Aboriginal and Torres Strait Islander) Act 2006 (the CATSI Act) due to the lack of choice and perceived additional regulatory requirements, reporting and scrutiny being applied. The department also noted that if given the choice of incorporating under the Corporations Act 2001 (the Corporations Act) Indigenous organisations would not be able to access the support services available to meet the specific needs of Indigenous people under the CATSI Act or benefit from the level of monitoring and scrutiny available through the Office of the Registrar of Indigenous Corporations. The department’s advice to the Minister also indicated that the policy would probably not contravene the Racial Discrimination Act 1975.

2.13 The department estimated that the financial impact on organisations required to transfer their incorporation could be between $10 000 and $20 000 for small organisations, and in the order of $100 000 for larger organisations with more complex arrangements.

2.14 Indigenous organisations already incorporated under the Corporations Act were expected to either transfer incorporation to the CATSI Act or apply for an exemption from the policy if able to demonstrate they were well-governed and high-performing. In May 2015 the department recommended to the Minister that the policy be amended to allow Indigenous organisations already incorporated under the Corporations Act to retain this incorporation without applying for an exemption. The department advised the Minister that to force Indigenous organisations already incorporated under the Corporations Act to transfer their incorporation ‘is onerous and could be perceived as heavy-handed’ as ‘both the Corporations Act and CATSI Act require similar governance standards’. The Minister agreed to the department’s recommendation.

2.15 The department has not finalised a formal strategy to assess the effectiveness of the policy and whether it is having a positive impact upon governance within applicable organisations.14 The department advised the ANAO in July 2016 that the regulatory requirements of Commonwealth incorporation legislation are expected to inherently strengthen organisational governance and that, as most organisations had transferred incorporation within the past six months, it is too early to identify broad improvements in governance.

Were risks identified and processes put in place for ongoing risk management?

The department identified, recorded and assessed 11 risks to the implementation of the Strategy. The department implemented or partially implemented 27 of 30 risk treatments. Of the risk treatments implemented or partially implemented, three had been fully implemented within the timeframes specified by the department. Risk management for the Strategy focused on short-term risks associated with implementing the grant funding round.

2.16 The department initially assessed the overall implementation risk for the Strategy as medium due to the complexity of the legacy arrangements transitioned to the department, short timeframes and the number of stakeholders impacted. After consulting with the Department of Finance, the department increased the implementation risk level to high for similar reasons, such as the significant structural changes and tight implementation timeframes involved with the reforms proposed under the Strategy.15

2.17 The department identified, recorded and assessed 11 risks to the implementation of the Strategy and identified 30 risk treatments. Of the 30 risk treatments, the ANAO identified that 17 have been implemented, 10 have been partially implemented and three have not been implemented. Of the 17 treatments implemented, three had been implemented by the date specified, nine had not been implemented by the date specified, four had been partially implemented by the date specified and one did not specify a date.

2.18 In assessing implementation risks, the department identified the key sources of risk as: complexities associated with the reform; the number of stakeholders involved; short timeframes; pace and scale of the changes; and inadequate resourcing. The risks identified included: inadequate consultation with external stakeholders; organisations not engaged in a timely fashion; program objectives not achieved; and agreed implementation timeframes not met. The department developed a number of strategies to treat the risks. These included: an implementation plan; high-level program objectives and key performance indicators; governance arrangements; communication and stakeholder engagement strategy; and engagement with peak bodies and the council. The department developed high-level program objectives and key performance indicators and governance arrangements by the dates it had specified in its risk assessment but did not develop or implement the rest of the strategies outlined above until later in the implementation of the Strategy.

2.19 The department reported risks on a monthly basis to the Indigenous Affairs Reform Implementation Project Board.16 The reports focused on short-term delivery risks associated with the timeframes, resources and systems required for the grant funding round. The department did not focus on strategic risks identified in the risk assessment and risk register.

2.20 An Implementation Readiness Assessment17 in December 2014 found that the department did not have a mature approach to risk management during the establishment of the Strategy, with no risk management framework implemented and risk registers not regularly updated. By the second Implementation Readiness Assessment in November 2015, the department had developed a risk management framework and was making progress in updating risk registers covering strategic, program and provider risk. In May 2016, the department developed a strategic risk assessment for the Strategy.

Was timely and appropriate advice provided to the responsible Minister to establish the Strategy?

The department provided advice to the Prime Minister and Minister on aspects of planning and design during the establishment of the Strategy. The department did not meet its commitments with respect to providing advice on all the elements identified as necessary for the implementation of the Strategy. The department also did not advise the Minister of the risks associated with establishing the Strategy within a short timeframe.

2.21 The department provided advice to the Prime Minister and Minister during the seven weeks between the Strategy’s approval by Government and the Strategy’s implementation in July 2014, including about:

- Indigenous affairs funding received by non-Indigenous/non-government organisations;

- the future framework for Indigenous affairs delivery;

- budget savings and reinvestment in Indigenous affairs, the department’s review of Indigenous-specific programs and the proposed submission to Government;

- background papers on proposed reforms to Indigenous affairs;

- proposed 2014–15 transition strategy for the implementation of the Strategy;

- draft items to be included in the Financial Management and Accountability Regulations 1997 for the five new programs under the Strategy; and

- an update on the implementation of the Strategy.

2.22 In seeking Government approval to establish and implement the Strategy, the department undertook to provide a range of key documents to the Minister by 30 June 2014. These documents addressed key elements of planning and designing the Strategy and included an implementation plan, budget investment approach, program administration arrangements (including program outcomes and key indicators, program guidelines, funding agreements and risk management), an evaluation and performance improvement strategy and a community engagement approach. These were not provided to the Minister by 30 June 2014.

2.23 In July 2014 the department provided advice to the Minister on the program guidelines. These guidelines included information on program outcomes and key indicators and the initial approach the department would take to funding agreements. In June 2015 the department provided advice on a proposed consultation and engagement approach focused on reviewing the guidelines. In March 2016 the department also briefed the Minister on an evaluation approach.

2.24 In September 2014 the department provided advice to the Minister on the proposed approach to the grant funding round. In its advice to the Minister the department noted that the tight timeframes and expected volume of applications would place significant pressure on current resourcing but that the department would investigate ways to ensure that the round was finalised successfully. Prior to implementation of the funding round, the department did not provide any further advice to the Minister that agreed implementation timeframes would not be met and the implications of this on implementation (see Chapter 3, timeframes for implementation).

3. Implementation

Areas examined

This chapter examines whether the Department of the Prime Minister and Cabinet’s implementation of the Indigenous Advancement Strategy supports a flexible program approach focused on prioritising the needs of Indigenous communities.

Conclusion

The implementation of the Strategy occurred in a short timeframe and this affected the department’s ability to establish transitional arrangements and structures that focused on prioritising the needs of Indigenous communities.

Area for improvement

The ANAO made one recommendation aimed at providing for the regional network with support to work in partnership with Indigenous communities and deliver local solutions.

Did the department establish internal oversight arrangements for implementation?

The department established an Indigenous Affairs Reform Implementation Project Board to provide oversight and make decisions about the implementation of the Strategy and guide reforms to Indigenous affairs.

3.1 In May 2014 the Department of the Prime Minister and Cabinet (the department) established the Indigenous Affairs Reform Implementation Project Board (the implementation project board) as the decision-making body to oversee the Australian Government’s Indigenous affairs reform agenda. The implementation project board met eight times between May 2014 and October 2014. The membership of the implementation project board was made up of the Indigenous Affairs Group executive.

3.2 In line with its terms of reference, the implementation project board initially discussed overarching strategic issues such as the whole-of-government program framework, evaluation and performance improvement strategy, and communication and engagement strategy. From July 2014, the meetings became focused on the grant funding round and related issues requiring immediate attention.

3.3 In June 2015, the implementation project board was revised as the Program Management Board as part of a new governance structure for the Indigenous Affairs Group of the department. Similar to the implementation project board, the Program Management Board’s membership included Indigenous Affairs Group executive with the addition of the regional network director and an Indigenous engagement special advisor. The responsibilities of the Program Management Board are similar to the implementation project board and include making decisions and providing advice to program owners18 and the department’s executive on the strategic directions and implementation of Indigenous-specific programs, in particular the Indigenous Advancement Strategy (the Strategy).

Were key stages and timeframes for implementation identified and met?

The department identified but did not meet key implementation stages and timeframes. The main timing delays occurred during the early implementation planning and at the assessment stage of the grants funding round.

3.4 The Strategy was a significant reform that the department committed to delivering within a short timeframe. In March 2014 the department developed an implementation strategy that included key deliverables and timeframes (see Table 3.1).

Table 3.1: Key deliverables for implementation of the Strategy

|

Deliverable |

Timeframe for completion |

Date achieved |

|

Implementation plan |

June 2014 |

Draft developed but not approveda |

|

Budget investment approach |

June 2014 |

Draft developed but not approvedb |

|

Program guidelines |

June 2014 |

August 2014 |

|

Risk management plan |

June 2014 |

November 2015 |

|

Program outcomes and performance indicators |

June 2014 |

May 2014 |

|

Evaluation and performance improvement strategy |

June 2014 |

Draft developed but not approvedc |

|

Community engagement strategy |

June 2014 |

July 2014 |

|

Departmental capability development plan |

June 2014 |

Not developed |

Note a: The Secretary considered the draft implementation plan in July 2014.

Note b: The budget investment approach was initially presented to the implementation project board in June 2014.

Note c: The department developed a draft evaluation and performance improvement strategy which was considered by the Indigenous Affairs Reform Implementation Project Board in July 2014. While some evaluation and information analysis activity occurred, the plan was not formally agreed to, endorsed or funded. In May 2016, the Minister for Indigenous Affairs approved funding for evaluation activities.

Source: ANAO analysis.

3.5 The department identified pressures on implementation timeframes in early June 2014. This was partly related to the overall tight timeframes for delivering critical elements of the Strategy, and the lack of appropriately skilled resources for the implementation period. At this time, key tasks had not been completed or were unlikely to be completed within the desired timeframes. For example, the department had not determined the final budget amounts that were available for the 2014–15 and 2015–16 financial years due to the multiple financial systems containing information making it difficult to develop a comprehensive financial position. This affected the department’s ability to build a funding profile for the Strategy and determine priority activities. By August 2014, a number of deliverables had been completed or commenced, but the department was tracking behind schedule in relation to developing a policy for regional strategies, funding allocation options, risk assessment guidelines, the evaluation and performance improvement strategy and a procurement strategy for the funding round.

3.6 The Minister for Indigenous Affairs (the Minister) agreed to timeframes for the grant funding round in September 2014. The department finalised its approach to the assessment process in October 2014, after the grant funding round had been advertised but prior to closing the round to applicants. The department did not meet the initial assessment timeframes because of issues including the volume of project applications received, and the assessment process was not supported by the use of a grants management system (discussed further in Chapter 4). In November 2014, the Minister agreed to extend the timeframes for the assessment process. The department did not meet the revised timeframes. The original and revised timeframes for the grant funding round are outlined in Table 3.2. The department assessed 2472 applications19 between October 2014 and March 2015 at a cost of $2.2 million.20

Table 3.2: Original and revised timeframes for grant funding round

|

Action |

Timeframe for completion |

Date achieved |

|

Original |

||

|

Tender closes |

17 October 2014 |

17 October 2014 |

|

Application assessment complete |

2 November 2014 |

3 March 2015 |

|

Application assessment summary complete |

10 November 2014 |

3 March 2015 |

|

Initial advice provided to the Minister |

27 November 2014 |

See below |

|

Final advice provided to the Minister |

28 November 2014 |

See below |

|

Applicants notified of outcomes |

1 December 2014 |

See below |

|

Revised |

||

|

Initial advice provided to the Minister |

24 December 2014 |

1 March 2015 |

|

Final advice provided to the Minister |

23 January 2015 |

March–June 2015 |

|

Applicants notified of outcomes |

February 2015 |

From March 2015 |

Source: ANAO analysis.

3.7 The department has acknowledged that its planning and timeframes were optimistic and not achievable, and recognises the importance of realistic program planning and implementation timeframes that are commensurate with the scale and complexity of the intended activity.

Were transitional arrangements established that provided certainty for providers, and supported continuity of investment and employment?

From July 2014 to July 2015, the department established and implemented transitional arrangements at the same time as administering the application process for the grant funding round. During this time, general information was sent to service providers about the new arrangements under the Strategy that was not targeted at the project or activity level. To support continuity of investment, the department extended some contracts at reduced funding levels of five per cent or more. Following the grant funding round additional service gaps were identified and action was taken to fill them.

3.8 The Strategy was implemented from 1 July 2014, with a transition period of 12 months to allow continuity of frontline services and time for communities and service providers to adjust to the new arrangements. The purpose of the transition arrangements was to ensure certainty for providers, continuity of investment and continuity of employment, while consultation was undertaken and longer term investment strategies across the five programs were developed. During the transition period, the department also implemented the application and assessment process for the grant funding round.

3.9 In May 2014, the department wrote to existing service providers to advise that existing programs and contracts were transitioning to new arrangements under the Strategy. This correspondence provided general information about the budget, the new Strategy programs and the regional network, and noted that contracts would be honoured, with some extensions offered for either six or 12 months. In September 2014, the department again wrote to service providers advising about the upcoming 2014 grant funding round, and held information sessions for stakeholders. The correspondence was not targeted at the project or activity level and did not state under which of the five programs an existing project or activity would fit.21 Nor did it clearly state that existing service providers would need to reapply for funding for existing activities, instead noting that additional funding cannot duplicate existing services. The department provided the ANAO with a sample of emails sent to existing providers prior to the opening of the funding round to advise under which Strategy program their existing activity fit and that service providers were encouraged to consider whether the proposed activity aligned with other programs.

3.10 Of the 114 applicants interviewed by the ANAO, 16 indicated that the process was confusing, 20 indicated that there needed to be more clarity regarding the types of organisations and activities being funded and 18 indicated that that the department needed to more effectively engage and communicate changes to organisations. Nineteen submissions to the ANAO also indicated that organisations found it difficult to fit projects within the structure and that it was unclear what activities were being funded. This is consistent with feedback provided to the department. In November 2014, the department identified that over 100 existing service providers with contracts expiring in December 2014 had not applied for funding and that, as a result, there was a risk of service gaps.

3.11 In June 2014, the department had 2961 contracts that required transitioning to the new arrangements under the Strategy. The contract transition arrangements established by the department for June 2014 to December 2014 included:

- honouring existing contracts;

- extending some contracts that were ending on 30 June 2014 for six or 12 months; and

- continuing other activities, such as Indigenous wage and cadet subsidies or other tailored employment grants, under legacy guidelines until the new guidelines were released, and limiting these activities to contracts of one year where possible.

3.12 The department estimated that the transition strategy would commit approximately $347.8 million of the $467.4 million funding available for 2014–15.

3.13 In order to make additional funding available for new activities, the Minister agreed to the department offering contract extensions with reduced funding of five per cent or more. The department advised the ANAO that the value of contract extensions was $130 million.

3.14 In November 2014, when timeframes for the grant funding round process were extended, the department estimated that over 1200 funding applicants were not contracted beyond December 2014. The Minister agreed to the department extending the assessment process to avoid service delivery gaps. Six month extensions were offered to providers with contracts expiring in December 2014, and 12 month extensions (to December 2015) were offered for some school-based activities. In both May and December 2014, the department advised existing service providers that contracts would be extended within a month of the contract expiring.

3.15 Consequences reported to the department indicated that delays in announcements had an impact on existing services due to the lack of security about what activities would be continued and some organisations reported losing staff due to the delays in finalising funding.22

Service delivery gaps following the grant funding round

3.16 The department identified that gaps in service delivery would emerge from July 2015 after it made the initial announcement of grant funding round recipients in March 2015. The department advised the Minister that the service delivery gaps had emerged because:

- providers had not applied for funding;

- providers received a large reduction in funding on the department’s recommendation and the subsequent level of approved funding would result in reduced levels of service;

- providers were not initially recommended for funding by the department in the assessment process;

- the department’s recommended funding reductions would result in reductions in employment;

- services were not originally assessed as high need by the department; and

- the department made administrative errors.

3.17 The department identified these gaps in March, May, June and November 2015, with all but one identified before the end of June 2015.23 As a result, the department re-briefed the Minister, entered into new contracts and adjusted funding amounts in existing contracts. The gap-filling exercise was completed in November 2015 and the total value of changes made to the original recommendations was an increase of $240 million.

Did the regional network support communities to have a key role in designing and delivering local solutions through a partnership approach?

The regional network did not have sufficient time to develop regional investment strategies and implement a partnership model, which were expected to support the grant funding round.

3.18 The Australian Government’s intent under the Strategy was for Indigenous communities to ‘have the key role in designing and delivering local solutions to local problems’.24 The primary mechanism by which the department engages with Indigenous communities is through its regional network of offices and staff distributed around Australia.

3.19 The regional network was designed to support working in partnership with Indigenous communities to tailor action and long-term strategies to achieve outcomes and clear accountability for driving Australian Government priorities on the ground. The regional network is made up of 37 regional offices, across 12 defined regions. The regions reflect similarities in culture, language, mobility and economy, rather than state and territory boundaries. The Indigenous Advancement Strategy Guidelines 2014 (the guidelines) state that the regional network would be established in July 2014, with a 12–18 month transition period. The department advised that the regional network was established in March 2015.

3.20 A key feature of the Strategy’s investment approach was to support regionally focused solutions through the development of regional strategies. The department envisaged that regional strategies would: map the region’s profile against priority indicators (for example, school attendance); identify the key policy and geographic areas that would have the greatest impact on improving outcomes; and propose strategies to improve outcomes and the measures by which these strategies would be assessed. Regional strategies were also intended to reflect community-identified priorities and inform government investment decisions following consultation with Indigenous communities.

3.21 Regional strategies were not developed to support the 2014–15 grants funding round due to time constraints. Instead, the department created simplified regional profiles to inform investment. Each regional profile contained demographic data and statistics about the disadvantage of the region’s Indigenous populations and information relevant to each of the five Strategy programs. There is limited evidence that regional profiles were considered in the grant assessment process. As at August 2016, two years after the commencement of the regional network, the department has drafted but not finalised regional strategies.

3.22 In July 2014, the department planned to develop a Network Performance and Accountability Strategy, setting out the key performance indicators and reporting requirements for regional network staff. The department did not develop the proposed strategy. To monitor the performance of the regional network, the department required regional network staff to report against regional strategies and provide regular reports to the Minister.

3.23 The department advised that it has provided the Minister with dashboard reports since August 2015. The reports include a short local issues section that, until January 2016, focused on the Community Development Program and Remote School Attendance Strategy. The dashboard reports provide a reflection of activity within the regions, but do not meaningfully report on the performance of the regional network.

Community involvement in designing and delivering local solutions

3.24 The department established a series of criteria for assessing grants. One specifically considered community consultation and participation, and another related to an applicant’s understanding of need in a community.

3.25 To date, the main funding opportunity under the Strategy has been through the grant funding round. In considering funding applications received in the grant funding round, the department:

- assessed applications against the five published selection criteria and then a moderated score was presented to the Grant Selection Committee25 (the committee) as a single score. The committee did not receive scores against individual selection criteria;

- allocated each application a need score based on assessment of the regional need for the project which was presented to the Grant Selection Committee. The need score did not always record a supporting rationale that explained the basis of the score or how it directly related to need;

- assessed applications at a national level against the merits of all other applications;

- did not contact applicant referees to ascertain the extent of their support for projects in their community; and

- did not contact applicants or engage with communities to discuss the impact of partial funding on the original projects.

3.26 The department intended that the regional network would partner with Indigenous communities to design and deliver local solutions to local problems. The regional network contributed local knowledge to the funding round through the determination of a need score and participation on the committee.26 The regional network was also responsible for negotiating funding agreements once the Minister had approved funding. However, the extent to which the regional network could adopt a partnership model during the administration of the grant funding round was limited because of the short timeframes involved in the application, assessment and negotiation process. The department advised the ANAO that, given the time constraints, a partnership approach was unrealistic during the grant funding round.

3.27 Outside of the funding round, communities could also apply for funding under the demand-driven process.27 While demand-driven applications were considered at a regional network level, the department’s administrative arrangements state that program owners are responsible for making funding recommendations within a program and the Minister is the ultimate decision-maker. To support a local solution through the demand-driven process, the department’s process required that the proposal was submitted through the regional network to the program area in national office for consideration. The demand-driven process was not supported by a consistent internal process, grants investment strategy, clear budget or guidance on what could be funded. The regional network reported issues with the demand-driven process. The issues included a lack of clear articulation of program intent and transparency around the available funding, as well as program area specific considerations. Further, decisions about demand-driven project applications were often time-consuming.

3.28 Stakeholder feedback provided to the ANAO indicated that community involvement in the Indigenous Advancement Strategy was limited.28 Thirteen submissions expressed a concern that local solutions were not supported, 22 indicated that there was a lack of consultation with Indigenous communities and nine stated that there was still a top-down approach to service delivery. When the ANAO asked applicants what changes they would like to see, the two most common responses were greater partnership and collaboration with Indigenous communities to design solutions (40 applicants) and a bottom up approach to service delivery (31 applicants).

Recommendation No.1

3.29 The Department of the Prime Minister and Cabinet ensure that administrative arrangements for the Indigenous Advancement Strategy provide for the regional network to work in partnership with Indigenous communities and deliver local solutions.

Entity response: Agreed.

3.30 This recommendation is consistent with steps already taken and ongoing work to ensure administrative arrangements for the Indigenous Advancement Strategy optimise opportunities for the department’s regional network to work in partnership with Indigenous communities and to deliver local solutions.

3.31 Regional network staff had an integral role in delivering information on the roll out of the Indigenous Advancement Strategy and the grant funding round. Approximately 80 information sessions were held in August and September 2014 with key regional stakeholders including potential applicants. These sessions by necessity focused on explaining the key elements of the Strategy and the grant funding round given the implementation timeframes. With a maturing of the Indigenous Advancement Strategy this role is being strengthened and extended.

3.32 Regional network staff also played a key part in assessing grant round applications and applying local knowledge to the assessment process. Regional Assessment Teams were established in network offices to consider all applications which had projects proposed for delivery within their region. These teams drew on the known current service footprint and considered local issues relevant to each application.

3.33 In March 2016 the department issued revised Indigenous Advancement Strategy Guidelines. These provide a stronger role for regional network staff in supporting organisations to develop proposals, particularly through the new community-led grants process. The revised Guidelines also include strengthened assessment criteria that relate to Indigenous community support for proposals.

3.34 The regional network has an extensive on-the-ground presence through 37 offices in capital cities, regional and remote locations, supplemented by a direct presence in approximately 75 communities. As such, it is integral to the effective implementation of the Indigenous Advancement Strategy. The network places senior staff close to communities and has specialist officers who lead direct engagement with communities: Government Engagement Coordinators and Indigenous Engagement Officers (IEOs). IEOs live in their community, speak the Indigenous language(s) used by the local community and use their knowledge of the community and language to help government understand local issues and to ensure community feedback is heard. The regional network supports active engagement with communities and stakeholders to explain government policies, identify and implement tailored local solutions to improve outcomes for Indigenous Australians and monitor funded projects and results achieved.

4. Grants Administration

Areas examined

This chapter examines whether the Department of the Prime Minister and Cabinet’s administration of grants supported the selection of the best projects to achieve the outcomes desired by the Australian Government, complied with the Commonwealth Grants Rules and Guidelines and reduced red tape for providers.

Conclusion

The department’s grants administration processes fell short of the standard required to effectively manage a billion dollars of Commonwealth resources. The basis by which projects were recommended to the Minister was not clear and, as a result, limited assurance is available that the projects funded support the department’s desired outcomes. Further, the department did not:

- assess applications in a manner that was consistent with the guidelines and the department’s public statements;

- meet some of its obligations under the Commonwealth Grants Rules and Guidelines;

- keep records of key decisions; or

- establish performance targets for all funded projects.

Areas for improvement

The ANAO has made two recommendations aimed at ensuring consistency between the department’s internal assessment process and the advice provided to applicants and improving the quality of the advice provided to the Minister for Indigenous Affairs.

The ANAO suggests that the Department of the Prime Minister and Cabinet explore options for implementing an effective grant management system that: facilitates effective and efficient management of the Indigenous Advancement Strategy; streamlines the process by which applicants engage with the department; is consistent with the grant assessment and performance monitoring processes implemented by the department; and supports decentralised approaches and greater regional network engagement.

Did the published guidelines and application process support applicants to apply for funding?

The department developed Indigenous Advancement Strategy Guidelines and an Indigenous Advancement Strategy Application Kit prior to the opening of the grant funding round. The guidelines and the grant application process could have been improved by identifying the amount of funding available under the grant funding round and providing further detail on which activities would be funded under each of the five programs. Approximately half of the applicants did not meet the application documentation requirements and there may be benefit in the department testing its application process with potential applicants in future rounds.

The guidelines

4.1 The Indigenous Advancement Strategy Guidelines 2014 (the guidelines) and the Indigenous Advancement Strategy Application Kit (the application kit) were the key references for applicants applying for funding under the Indigenous Advancement Strategy (the Strategy).29 The guidelines described the five general selection criteria of the Strategy, which addressed the extent to which applicants understood the needs of the target group or area, would achieve outcomes and value for money, could show experience or capacity in both program delivery and achieving outcomes, and fostered Indigenous participation.

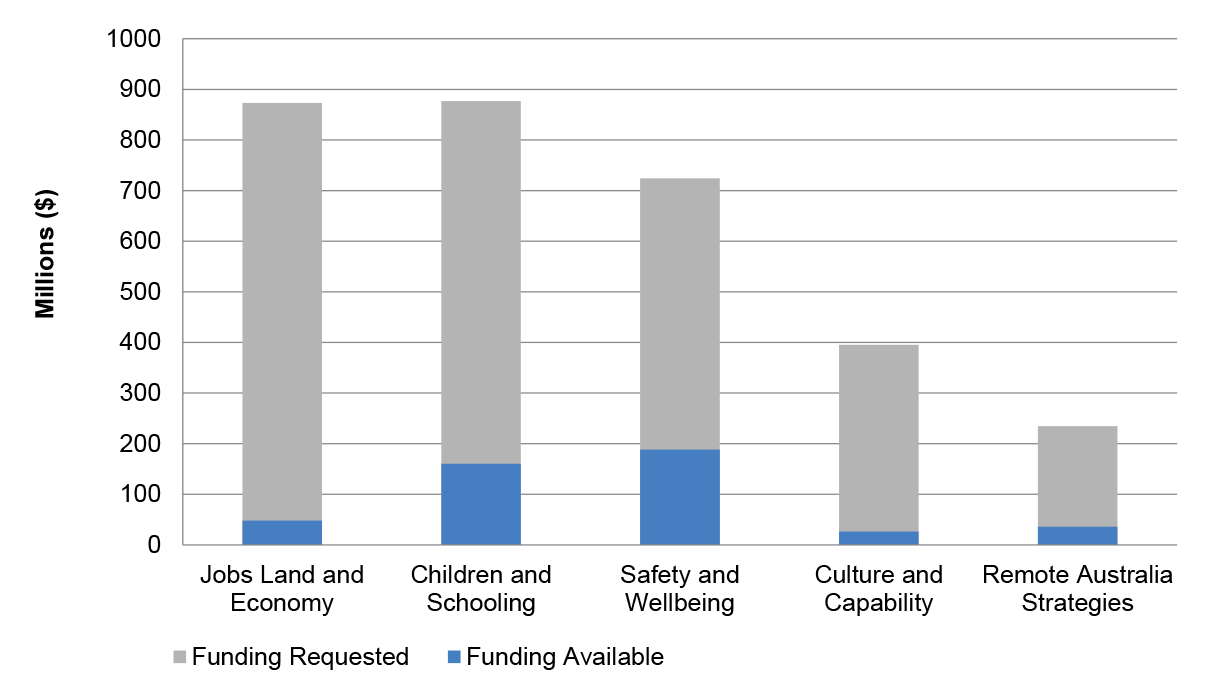

4.2 The guidelines stated that the Australian Government had committed $4.8 billion over four years to the Strategy. The application kit stated that $2.3 billion was available over four years, primarily through the initial grant funding round. The Department of the Prime Minister and Cabinet (the department) did not publicly communicate how available funding would be distributed between the five programs. Figure 4.1 illustrates the distribution of funding between programs for the 2015–16 financial year for the grant funding round, relative to the amount of funding sought by applicants.

Figure 4.1: Funding requested against funding available, by program, 2015–16

Source: ANAO analysis.

4.3 As funding was allocated to specific programs30, the program for which an applicant chose to apply was a factor in determining the amount of funding that could be received. Publicly communicating available funds would have been of benefit to both applicants and the department, assisting: applicants to appropriately scope activities relative to the funds available; and the department to manage applicant’s expectations of funding success.

4.4 The guidelines could have been improved by providing further detail and clarity to support applicants in identifying the most suitable program for which to apply. The department had mapped legacy programs to the new program structure, but this information was not included in the guidelines. Further, the language used is complex; simpler language would assist in making the guidelines more accessible for first-time applicants.31

4.5 Applicants provided feedback to the ANAO and the department that a lack of clarity regarding available funding and eligible program activities complicated the application process. Following the grant funding round, a departmental review indicated that there was insufficient clarity surrounding activities that were eligible for funding, for which program an applicant should apply, and the amount of funding available.32 The department advised that it consulted with stakeholders on the guidelines; however, as noted in Chapter 2, the department did not keep records of these meetings. As such, the ANAO is not able to confirm that the department tested the guidelines with stakeholders to identify concerns prior to the application process commencing.33

Application process

4.6 The ANAO examined the manner in which applicants could submit applications to the department, and the associated requirements for application forms and documentation. The application kit stated that applicants could apply for Strategy grants via email, or by providing a hard-copy application form. The department advised the ANAO that timeframe pressures and limitations of existing systems prevented the development of an online grant management system.34

4.7 Applicants were required to submit a single application form for funding under the Strategy, regardless of the number of initiatives for which funding was sought.35 The application kit also established a series of requirements for application documentation including word limits36, maximum page counts, attachments and application size. The department advised that 1233 (50 per cent) of the 2472 applications received were assessed as non-compliant against these requirements.37

4.8 The department did not test the application form, requirements and process with applicants prior to the opening of applications. Of the 108 applicants that provided feedback to the ANAO about this issue, 44 per cent (47 applicants) rated the difficulty of the application process as high, and 18.4 per cent (21 applicants) as medium.

Were department staff provided with adequate guidance and training to achieve consistency in the assessment process?

The department provided adequate guidance to staff undertaking the assessment process. The department developed mandatory training for staff, but cannot provide assurance that staff attended the training.

4.9 The department developed guidance for staff involved in the assessment process, in the form of process diagrams and documents, an overall assessment plan, and a probity plan. These documents described roles and responsibilities, and expected outputs. The department also established an internal email address for staff to contact with questions and concerns regarding probity matters.

4.10 The written guidance was adequate to support staff to undertake the assessment process, but could be improved by providing additional clarity about how individual projects on the same application could be differentiated.38 Departmental staff were responsible for identifying separate projects on the single application form submitted by applicants, as the application form did not provide a means for applicants to identify separate projects themselves. In around 90 per cent of instances, the two assessing staff identified the same number of projects on application forms. For 9.3 per cent of the applications, the two assessors identified different numbers of projects. For half of these applications, the number of projects identified by each assessor differed by two or more, and the greatest difference in projects identified in an application was 19.

4.11 The department advised the ANAO that verbal guidance regarding distinguishing projects on an application was provided in training and by assessment panel chairs to ensure assessors had assistance where required and to maintain the integrity of assessments. No evidence of this guidance or these discussions was provided to the ANAO.

4.12 The department required that staff assessing grant applications attend a mandatory training session and it developed a series of training materials. The department was unable to provide a training register or other documentation recording which staff had attended training. As such, no assurance is available that all staff assessing grant applications completed the mandatory training requirements.39

Was the assessment process consistent with the process outlined in the Strategy’s grant guidelines?

The department’s assessment process was inconsistent with the guidelines and internal guidance. Applications were assessed against the selection criteria, but the Grant Selection Committee did not receive individual scores against the criteria or prioritise applications on this basis. The basis for the committee’s recommendations is not documented and so it is not possible to determine how the committee arrived at its funding recommendations.

4.13 As noted in Chapter 1, funding under the Strategy was available through multiple mechanisms including open competitive tender and demand-driven funding processes. The bulk of Strategy funding was made available via the competitive tender process. The funding mechanisms were assessed via different processes.

Assessment of open competitive tender applications

4.14 The guidelines and application kit specified that applications for funding would be assessed by a series of assessment panels according to five equally-weighted general selection criteria (discussed in paragraph 4.1). The department manually registered all applications received in a database developed specifically for the grant assessment process. The database and several additional spreadsheets, stored on network drives without adequate version controls, were used to manage the assessment process.

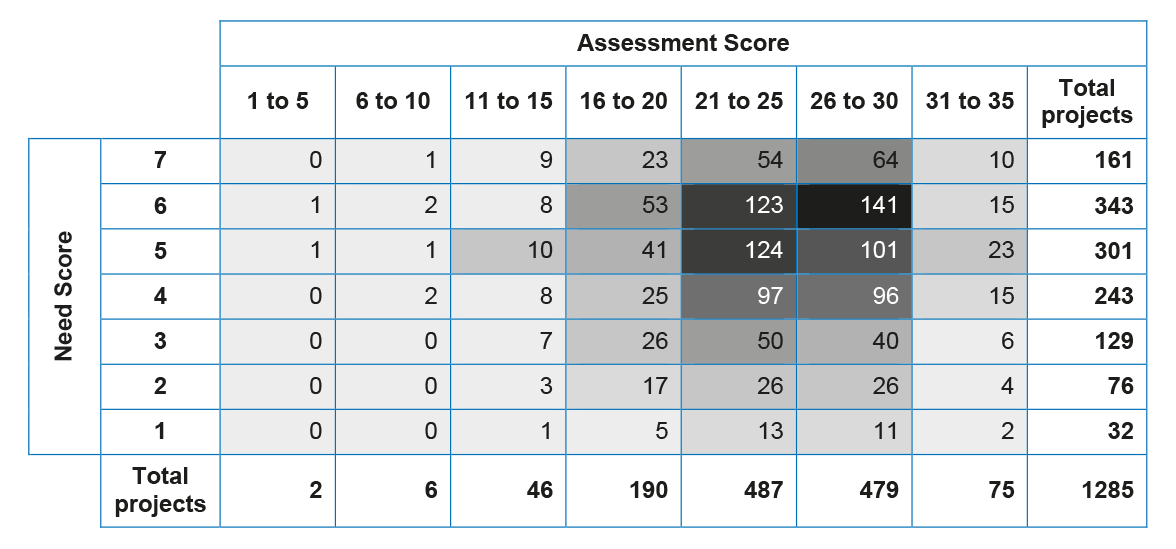

4.15 Following registration, applications were assessed for compliance against the requirements outlined in the guidelines. The department advised the ANAO and Parliament that, due to the high rates of non-compliance, these requirements were waived. The ANAO conducted a limited review of 60 of the 1233 non-compliant applications and found that the majority were assessed in accordance with the standard assessment process. However, the department did not waive the compliance requirements for all applications. The ANAO identified four applications that were not assessed40, and a further two instances were identified in which organisations submitted multiple compliant applications for different projects, but only one application progressed to an assessment. Applications that did proceed through the compliance process were assessed and assigned:

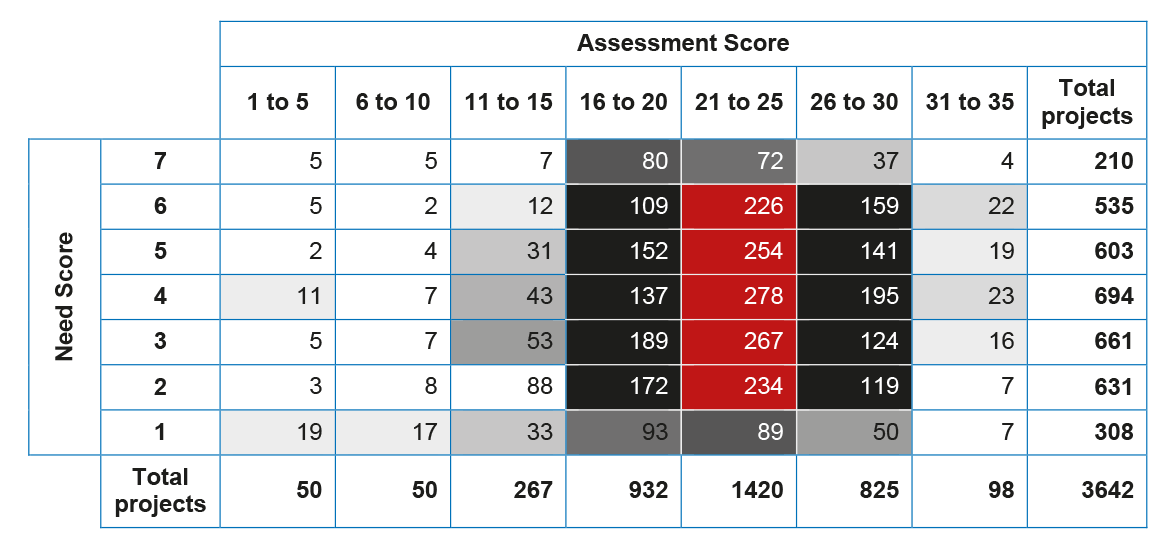

- an assessment score (up to 35 points), assessed by two staff from one of seven assessment panels against the five Strategy selection criteria; and

- a need score (up to seven points), assessed by regional network staff as to whether the project demonstrates a response or solution to demand and need within the region (as discussed in Chapter 3).41