Browse our range of reports and publications including performance and financial statement audit reports, assurance review reports, information reports and annual reports.

Design and Implementation of the Energy Efficiency Information Grants Program

The objective of the audit was to assess the effectiveness of the design and implementation of the EEIG program. The focus of the audit was the preparation for, and conduct of, the first funding round of the program.

Summary

Introduction

1. The objective of the Energy Efficiency Information Grants (EEIG) program is to empower small and medium size business enterprises (SMEs) and community organisations to make informed decisions about energy efficiency. It is to achieve this objective by funding industry associations and non-profit organisations to deliver practical and tailored energy efficiency information to help SMEs and community organisations respond to the impact of increasing energy costs.

2. The EEIG operates as a competitive merit-based grant program. Funding of $40 million is available over the period 2011–12 to 2014–15. Of this amount, $34.9 million is available for grants, with the remaining $5.1 million allocated to the Department of Climate Change and Energy Efficiency (DCCEE) to administer the program.

3. The first funding round was completed in May 2012, with 28 projects funded to the value of $20.05 million. This left $14.9 million for the second funding round, which opened to applications in October 2012.1

4. To assist it to design and implement the program, DCCEE engaged a probity adviser and contracted a three-person program advisory committee (PAC). DCCEE assessed the eligibility of the 207 applications received for the first funding round. These applications sought some $123 million in grant funding. The PAC then assessed the 188 eligible applications against the three published merit criteria.2 The PAC’s merit assessment report was included in the department’s subsequent briefing to the Minister for Climate Change and Energy Efficiency (the Minister). Acting on the department’s recommendations, the Minister approved funding for the 28 recommended projects, which were publicly announced on 17 May 2012.

Audit objectives, criteria and scope

5. The objective of the audit was to assess the effectiveness of the design and implementation of the EEIG program. The focus of the audit was the preparation for, and conduct of, the first funding round of the program.

6. The audit examined the program against relevant policy and legislative requirements for the expenditure of public money and the grants administration framework (including the Commonwealth Grant Guidelines (CGGs)).

Overall conclusion

7. The EEIG program was one of a suite of measures announced by the Government in July 2011 to encourage energy efficiency. The 28 applications approved for funding through the first round are expected to assist a range of industries, with around half the grant recipients having a national membership or national focus. The average value of the funding awarded was $708 000, with the value of the approved projects ranging from $145 000 to $1.87 million (excluding the Goods and Services Tax). Funding agreements have been signed with each of the 28 approved applicants, and each of these projects is contracted to be completed by no later than 30 June 2015.

8. DCCEE was well resourced to design and implement the EEIG, and the design of the program was effective. Of note was that program governance arrangements were established and a range of internal documentation to support the administration of the program was developed. In addition, drawing on a sound foundation of stakeholder consultation, a robust set of program guidelines were developed and published. These guidelines clearly identified the program eligibility requirements. They also outlined the merit assessment criteria that would be applied, including weighting for each of the three criteria as well as the respective roles DCCEE, the PAC and the Minister would have in the assessment and approval of applications.

9. DCCEE also planned a sound approach to undertaking application eligibility checks, assessing the merit of eligible applications and ranking them for the Minister’s consideration. In this respect, the contractual arrangements with the three PAC members set out the information DCCEE would provide to the PAC to inform its work. The contracts also set out the records that were to be made by the PAC members of their merit assessment of each eligible application, and required these records to be provided to the department once they were completed. DCCEE was also to provide secretariat support to the PAC, including by preparing the minutes of PAC meetings.

10. A recurring theme in ANAO’s audits of grants administration is the importance of agencies implementing programs in a manner which accords with published program guidelines, and treats all applicants equitably. In this context, notwithstanding the good work undertaken by the department to establish the program, there were significant shortcomings in the conduct of the assessment process for applications. In particular, the merit assessment process departed in important respects from that outlined in the program guidelines, and inadequate records were made and retained to demonstrate that each application was assessed in accordance with the published eligibility and merit criteria.3 Of particular note in these respects was that:

- most eligible applications were allocated to one of four merit categories, with each of the 28 applications allocated to the highest merit category (termed ‘outstanding’) then placed into one of six ranking bands (in case the Minister did not wish to approve all 28 ‘outstanding’ applications). Neither process was foreshadowed by the program guidelines. In addition, the allocation of the 28 ‘outstanding’ applications to a ranking band used a process that did not relate to the score each application had achieved in terms of the published merit criteria;

- DCCEE destroyed records made by each PAC member of the assessment of each eligible application against the three published merit criteria, notwithstanding that the contractual arrangements specified that these were official records and that DCCEE had made no arrangements to otherwise record the scoring by PAC members.4 The minutes of the PAC meetings were also too brief to provide any insight into the merit assessment and scoring of each eligible application; and

- fortunately for DCCEE, one PAC member made and retained his own electronic record of some of the scoring results but there is no record, official or otherwise, as to how each of the 28 recommended (and approved) applications had been assessed and scored against the three published merit criteria.

11. DCCEE has advised ANAO that it has confidence in the PAC, the department sought and followed probity advice, and it has no reason to believe that the successful projects were not the most competitive against the merit criteria. Nevertheless, it is not possible to be satisfied that the most meritorious eligible applications (in terms of the published merit criteria) were recommended to the Minister for approval. The shortcomings in the implementation of the program have also meant that DCCEE was unable to provide unsuccessful applicants with feedback against the merit criteria as to the reasons their application was considered less meritorious than those recommended and approved for funding.

12. A key factor cited by DCCEE for the departures from the assessment approach documented in the program guidelines was that the number of applications received was significantly greater than had been planned for. In this context, it is common for competitive, applications-based grant programs to be over-subscribed. However, DCCEE had not developed strategies for how it would respond to receiving significantly more applications that it anticipated. This was notwithstanding that there had been a high level of interest in the program prior to applications opening, which should have foreshadowed to DCCEE an increased likelihood that the program would be over-subscribed to a significant extent.

13. While the assessment process was deficient, DCCEE’s briefing of the Minister on the assessment outcomes for the first funding round was timely and comprehensive. Nevertheless, the briefing informed the Minister that the assessment processes had met the requirements of the CGGs as well as guidance included in ANAO’s grants administration Better Practice Guide, when this was clearly not the case. In light of the findings of this audit, the department also now agrees that this was not the case. However, consistent with the CGGs framework and better practice guidance, DCCEE provided the Minister with a clear recommendation that he award funding to those 28 applications categorised by the PAC as ‘outstanding’. The Minister agreed with the department’s recommendation.

14. Recognising that a second EEIG funding round is currently underway, and that DCCEE is administering other competitive grant programs, the ANAO has made four recommendations. Two are focused on improved record keeping concerning, respectively, the eligibility checking process and the merit assessment of eligible applications. Another relates to enhancing the governance arrangements where an advisory committee or panel is involved in the assessment of grant applications. ANAO has also recommended that, for each future competitive applications-based grant program, DCCEE develop strategies to better manage the risk of funding rounds being over-subscribed. There would also be merit in DCCEE updating the program guidelines for the second round to clearly outline the approach that the department will be adopting for merit assessment.5

Key findings by chapter

Program framework (Chapter 2)

15. DCCEE established a robust framework for the design and management of the EEIG. This included the department contracting a probity adviser as well as a program advisory committee to assess eligible applications against the published merit criteria.

16. The establishment of the PAC enabled specialised expertise and knowledge to be brought to bear in the assessment of the merit of eligible applications. While there were some delays with the contracts being signed, the contractual arrangements provided a sound basis for the PAC to undertake, with support from DCCEE, the merit assessment process in a transparent and accountable way that accorded with the published program guidelines.

17. However, in establishing the PAC, DCCEE did not recognise that the task the PAC was performing involved directly informing a decision by the Minister to commit to the expenditure of public money, and the PAC was therefore bound by the requirements of the CGGs.6 In addition, the arrangements to record the deliberations and decisions of committee meetings, and the handling of conflicts of interest, did not deliver the transparent and accountable process required by the CGGs.

Submission of applications and eligibility assessment (Chapter 3)

18. The department had estimated that it would receive around 30 to 40 applications for EEIG funding under the first round, with program planning based on this level of response. In the event, 207 applications were received. This situation, together with the unavailability of electronic systems to support the application process and the reasonably tight timeframes set by the department to complete eligibility assessments, meant that the extent of eligibility and merit assessment work required was significantly greater than had been planned for.

19. The eligibility requirements for the first round were clearly grouped and identified in the program guidelines. A range of mandatory documentation was also required as part of the application. Applicants were assisted in addressing these requirements through an application guidance document specifically highlighting the questions in the application form relevant to the eligibility assessment as well as where further documents were required as part of the application.

20. Of the 207 applications received, 188 were assessed as eligible and proceeded to the merit assessment stage of the program. In total, 19 applications (representing nine per cent of applications) were assessed as ineligible. This level of ineligible applications is not atypical and indicates that the eligibility criteria were effectively communicated to potential applicants through the program guidelines and other documentation developed and published by DCCEE.

21. The department used a checklist to assess the completeness and eligibility of applications. Initial assessments were then subject to more senior review (termed a ‘QA check’). As may normally be expected, changes in eligibility/ineligibility occurred with these reviews. However, the reasons for these changes were not well documented by DCCEE. Consequently, it is not possible to accurately determine from departmental records whether all possible eligibility issues raised during the initial assessment stage were addressed and resolved through the quality assurance process.

Merit assessment (Chapter 4)

22. The program guidelines had outlined that the second stage of the assessment process (following the department’s assessment of application eligibility) would involve the PAC assessing eligible applications and allocating a merit ranking to each. However, there were significant differences between this guidance and the way the merit assessment and ranking of eligible applications was undertaken.7 This involved an initial broad assessment of applications and their allocation to the categories of ‘outstanding’, ‘very good’, ‘good’ and ‘poor’8, with only the ‘outstanding’ applications subject to detailed assessment. Further, the detailed assessment of ‘outstanding’ applications used an additional scoring regime that was not clearly linked to the merit assessment criteria, to then rank projects in an order of merit.

23. A key reason cited by the department for the changes to the merit assessment stage was the unexpectedly large number of eligible applications received. However, while some 12.7 per cent of total program funding was made available to DCCEE for its administrative costs, relatively few resources9 were spent on the merit assessment process, notwithstanding its crucial importance to any competitive merit-based grant program. In addition, less time than had been planned10 was spent on assessing applications. Subsequent delays in the development and signing of funding agreements for the approved projects indicate that the overall program implementation timetable could have reasonably allowed greater time for the assessment of eligible applications.

24. A further feature of the assessment process for the EEIG program was the absence of records to support and explain the assessment and scoring of eligible applications in terms of the published merit criteria. Some important records made by the PAC members of their initial assessment and scoring of each application were destroyed by DCCEE11, and the department did not otherwise document the results of the merit assessment process. Consequently, it is not possible to be satisfied that the published merit criteria were applied in a consistent and robust manner, or for DCCEE to demonstrate that the most meritorious applications were identified and recommended to the Minister for approval.

Advice to the Minister and funding decisions (Chapter 5)

25. A timely and comprehensive briefing on the outcome of the funding round was provided to the Minister. The briefing included a clear recommendation from DCCEE that the Minister should approve those 28 applications categorised as ‘outstanding’ by the PAC. The briefing to the Minister on the outcomes of the funding round also stated that the process had met the requirements of the CGGs as well as guidance included in ANAO’s Better Practice Guide. This was clearly not the case. In light of the findings of this audit, the department also now agrees that this was not the case.

26. The Minister agreed to the funding recommendation he received from DCCEE.

Funding distribution, feedback to applicants and signing of funding agreements (Chapter 6)

27. The first EEIG funding round resulted in funding being distributed in a way that was consistent with the program seeking to achieve a diffusion of benefits across a range of business and community sectors and into regional settings. In terms of electoral distribution, the application and assessment approach adopted, and the resulting briefing of the Ministerial decision-maker, reduced the risk of the grant funding decisions being influenced by electoral considerations, and there was no evidence of any such bias in the approval of funding.

28. An area where DCCEE’s approach did not reflect sound grants administration practice related to unsuccessful applicants not being provided with reasons for the non-awarding of funding. The department provided eligible but unsuccessful applicants with no insights as to how they had been assessed against the published merit criteria. This was a consequence of the department retaining some records of the assessment of applicant eligibility but destroying12 the PAC’s records of the merit assessment process and not otherwise making adequate records of the assessment outcomes.

Summary of agency response

29. Formal comments on the proposed audit received from DCCEE are set out below. Additional comments received from DCCEE and the PAC were also considered in preparing the final audit report.

The department welcomes the audit report as an important contribution to the department’s endeavours on continuous improvement in program delivery. As the department prepares for the receipt of applications for the second round of the EEIG program, the findings of this audit will be addressed along with other lessons from the delivery of the first round. Consistent with this, the department accepts the recommendations made by the ANAO.

The department welcomes the overarching findings, particularly that the department had ‘... established a robust framework for the design and management of EEIG’ and that ‘The first EEIG funding round resulted in funding being distributed in a way that was consistent with the program seeking to achieve a diffusion of benefits across a range of business and community sectors and into regional settings’.

The department acknowledges the need for improvement in documentation and record keeping, and clarification of the role of the official standing and obligations of independent experts assisting the Commonwealth in merit assessment processes. While the department has confidence in the merit assessment undertaken by the Program Advisory Committee (PAC), the department accepts the need to maintain better records of the PAC deliberations and outcomes of their assessments against individual and overall merit criteria.

Recommendations

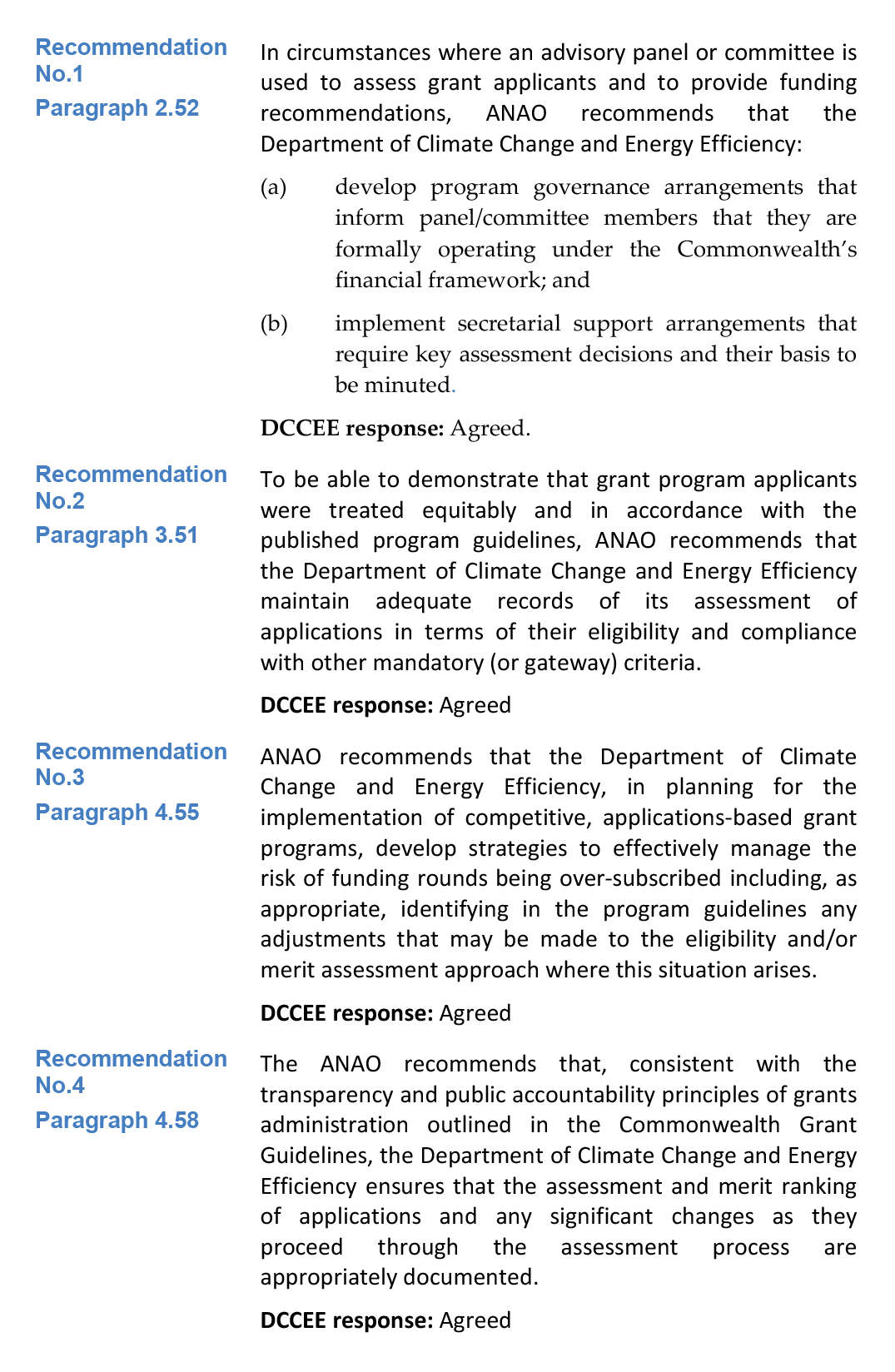

Set out below are ANAO’s recommendations and the Department of Climate Change and Energy Efficiency’s abbreviated responses. More detailed responses are shown in the body of the report immediately after each recommendation.

Footnotes

[1] The second funding round opened to applications on 30 October 2012, with a closing date of 20 December 2012. Successful EEIG applicants are expected to be announced in May 2013.

[2] These criteria were: project effectiveness (weighted at 60 per cent); project design and management (weighted at 20 per cent); and value for money (weighted at 20 per cent).

[3] In this context, a departure from the guidelines refers to processes being applied that were not described in the program guidelines.

[4] The term ‘destroy/destruction’ is used in the Archives Act 1983, and in this context characterises the action DCCEE undertook in relation to PAC merit assessment records.

[5] In November 2012, DCCEE advised ANAO that it will include material concerning the process to be followed by the PAC in the program’s internal procedures document and probity plan for the second round that is currently underway, but that amendments to the published program guidelines will be made at ‘an appropriate point for any future rounds’, notwithstanding that there is not expected to be any funding remaining after the current round.

[6] See further at paragraphs 2.28 to 2.32 of the audit report.

[7] See further at paragraph 4.52 of the audit report for a more detailed summary of the differences between the program guidelines and the way the merit assessment was undertaken.

[8] There were 15 applications that were not allocated to any merit ranking category. See paragraph 4.44 and footnote 76 of the audit report for further details on applications not allocated a merit ranking.

[9] The total fees paid for the work of the PAC in respect to merit assessing 188 eligible applications was $54 199. In addition, the contracted probity adviser performed a limited role in overseeing the application assessment process.

[10] DCCEE’s program timetable had allowed five weeks for the assessment of applications (both for eligibility and merit assessment), and a further week to provide funding recommendations to the Minister. As it eventuated, the entire assessment process was completed in around four weeks.