Browse our range of reports and publications including performance and financial statement audit reports, assurance review reports, information reports and annual reports.

Management of the Try, Test and Learn Fund Transition Projects

Please direct enquiries through our contact page.

Audit snapshot

Why did we do this audit?

- The audit provides assurance to Parliament on the effectiveness of the Department of Social Services’ (the department) administration of grants to Try, Test and Learn Fund (TTL Fund) projects that demonstrated promise in reducing long-term unemployment and

long-term welfare dependency. - The projects awarded funding for a further two years were known as the transition projects.

Key facts

- Due to the COVID-19 pandemic, the program objective for the transition projects differed to that for TTL Fund projects, as they focused on supporting short-term employment outcomes and trialling an outcomes-based funding model.

- Grants were awarded under an ad hoc, closed non-competitive process, with nine providers of TTL Fund projects invited to apply for transition funding.

What did we find?

- The department’s administration of the grants for the transition projects was largely effective.

- There were deficiencies in record keeping relating to program design and funding decisions which reduced the transparency of the department’s decision making.

- The department did not document its consideration of risks associated with the funding model or impacts of the COVID-19 pandemic on project delivery when setting the performance targets for each project and establishing the funding agreements. This reduced the effectiveness of the department’s administration of the funding arrangements.

What did we recommend?

- There were no recommendations to the department.

- The report identifies five opportunities for improvement.

52 projects

funded across eight priority groups in two tranches from the Try, Test and Learn Fund.

$12.6m

funding provided in the 2021–22 Federal Budget for an additional two years of funding for successful Try, Test and Learn projects.

7 projects

awarded grant funding under the program for the Transition Funding for Successful Try Test and Learn Projects.

Summary

Background

1. In the 2016–17 Federal Budget, $96.1 million was allocated over four years for the Department of Social Services (the department) to establish the Try, Test and Learn Fund (TTL Fund). Fifty-two projects were funded in two tranches between 2016 and 2018 to test policy interventions, try new approaches and build the evidence base for delivering employment outcomes for vulnerable Australians most at risk of long-term unemployment and long-term welfare dependence.1

2. In the 2021–22 Federal Budget, the Australian Government announced $12.6 million in additional funding over two years (transition funding) for successful TTL Fund projects that demonstrated promise in supporting groups of people at risk of long-term welfare dependence into work (transition projects). The funding was announced as one-off, time limited grants.

3. Due to the COVID-19 pandemic, the program objective of the transition funding differed from the two tranches funded under the original program. Specifically, the transition funding focused on supporting employment during a period of economic recovery, providing short-term employment outcomes, and trialling an outcomes-based funding model.

Rationale for undertaking the audit

4. This performance audit was conducted to provide assurance to Parliament on effectiveness of the department’s administration of grants to TTL Fund projects that demonstrated promise in reducing long-term unemployment and long-term welfare dependency. This performance audit was conducted to provide assurance to Parliament on effectiveness of the department’s administration of grants to TTL Fund projects that demonstrated promise in reducing long-term unemployment and long-term welfare dependency.

Audit objective and criteria

5. The objective of this audit was to assess the effectiveness of the administration of grants for the Try, Test and Learn Fund transition projects.

6. To form a conclusion against the audit objective, the ANAO adopted the following three high-level criteria.

- Was the program well designed?

- Were funding recommendations and decisions made in accordance with the Commonwealth Grants Rules and Guidelines?

- Were appropriate funding agreements established?

Conclusion

7. The department’s administration of the grants for the transition projects was largely effective. Deficiencies in record keeping relating to program design and funding decisions reduced transparency of the department’s decision making.

8. The design of the program and department’s basis for selecting projects invited to apply for transition funding was largely effective. The department relied on multiple inputs to inform its selection of transition projects. The prioritisation of projects and weightings of inputs were not documented which limited the transparency of the decision-making process.

9. Funding decisions were documented and largely compliant with the Commonwealth Grants Rules and Guidelines 2017. The department documented recommendations for the award of funding by the relevant delegate. The recommendations were not supported by a sufficiently documented assessment of grant funding proposals and applications.

10. The funding agreements established for the transition projects were partly effective. Funding agreements outline the performance reporting requirements and the outcomes framework which includes performance targets which have been tailored for each project. The department did not document its consideration of the risks associated with each provider’s capacity to transition to a Payments by Outcome (PbO) model of funding or service delivery challenges arising from the COVID-19 pandemic when establishing performance targets, particularly for the first observation period. The department made payments to providers without performance targets being achieved in the first and second outcome observation periods.

Supporting findings

Program design

11. The department used multiple inputs to inform the selection of projects recommended to be invited to apply for transition funding, including assessments of the projects funded in tranches one and two from the TTL Fund and an outcome analysis aligned to the new project objective for the transition projects. The department’s use of various ranking and prioritisation frameworks across the inputs reduced the transparency of the selection of projects. There was limited documented rationales to support the selection of two of the nine projects recommended to be invited to apply for funding. There were deficiencies in the department’s implementation of measures for identifying and mitigating conflicts of interest in accordance with Commonwealth Grants Rules and Guidelines 2017 and departmental procedures (see paragraphs 2.5 to 2.38).

12. The Grant Opportunity Guidelines issued to providers invited to apply for transition funding outlined the program’s governance arrangements and selection processes. The Minister for Social Services agreed to the proposed grant selection process, which was an ad hoc grant opportunity, the draft Grant Opportunity Guidelines, and for a departmental official to be delegated as the decision maker. The Grant Opportunity Guidelines set out the selection process being submission of a Letter of Invitation with Acceptance. There were further requirements regarding what constituted eligible grant activities, which were tailored to the nature of the transition project (see paragraphs 2.39 to 2.47).

Funding decisions

13. Nine proposed providers were invited to submit funding proposals. The department did not provide assessment criteria to the proposed providers but did assess each of the funding proposals. Matters of concern or risk identified by the department during the assessment process were recorded. The closure of these matters following further discussion with the relevant providers or risk mitigation strategies developed by the department were not documented. Following the assessment of grant funding proposals and issuance of Grant Opportunity Guidelines, which included eligibility criteria, the department did not document how it was satisfied that providers invited to deliver transition projects had met the eligibility criteria in the Grant Opportunity Guidelines. This also included one instance where the waiving of an eligibility criterion was not documented following a provider’s late and incomplete submission (see paragraphs 3.3 to 3.18).

14. Funding decisions were informed by recommendations made to the departmental delegate and were partly supported by documentation to demonstrate compliance with the Commonwealth Grants Rules and Guidelines 2017 (see paragraphs 3.19 to 3.23).

Funding agreements

15. Funding for the transition projects is delivered by a PbO model. The department included an outcomes framework in the grant agreements. The framework included outcomes and associated performance targets linked to receipt of payments and performance indicators for the assessment of overall performance of the grant activity. The department shared baseline analysis with providers at the time that Grant Opportunity Guidelines were issued to assist with negotiation of the outcome performance targets. These performance targets were tailored to the nature of the individual transition projects and were incorporated into the grant agreements with outcomes-based milestone payment schedules. In developing the performance monitoring and outcomes frameworks, the department did not sufficiently recognise provider capacity and the time that would be required by them to transition to a PbO model. The department made payments to providers without performance targets being achieved in the first and second outcome observation periods (see paragraphs 4.5 to 4.87).

16. The department has largely defined appropriate data and information collection requirements and specified these within the individual grant agreements for the transition projects. Participant and service delivery information must be submitted by providers via the department’s Data Exchange. The linking of the reported data with Commonwealth datasets such as those relating to welfare payment data, enables the department to assess whether the provider achieved the performance targets for payments under the PbO model. The department has no formal mechanisms to gain assurance over other performance related information reported and representations made by providers in Activity Work Plan Reports or progress review meetings (see paragraphs 4.29 to 4.44).

Department of Social Services summary response

17. The proposed audit report was provided to the department. The department provided the summary response below. The full response from the department is provided at Appendix 1. The improvements observed by the ANAO during the course of this audit are at Appendix 2.

The objective of the Try, Test and Learn Fund transition projects is to trial an outcomes-based funding model with the view to generate evidence for future policy and program development in the social services sector. The Department of Social Services (the department) is committed to working in partnership with providers delivering the trial projects and ensuring providers continue participating in the program and generating evidence on the efficacy of outcomes-based funding models, fulfilling the purpose of the trial program.

The Department acknowledges the insights and opportunities for improvement outlined in the Australian National Audit Office (ANAO) report on the Management of Try, Test and Learn Fund transition projects.

The Department acknowledges the ANAO’s overall conclusion that the Management of Try, Test and Learn Fund transition projects was largely effective and funding decisions were largely compliant with the Commonwealth Grants Rules and Guidelines 2017.

The Department notes the identified areas of improvement and maintains its strong and continuing focus on providing clear, concise and comprehensive advice to decision-makers, and implementing mechanisms to better support its providers when transitioning to new funding models and enhancing processes for performance monitoring.

Key messages from this audit for all Australian Government entities

Below is a summary of key messages, including instances of good practice, which have been identified in this audit and may be relevant for the operations of other Australian Government entities.

Performance and impact measurement

1. Background

Introduction

1.1 The Australian Priority Investment Approach (PIA) to Welfare2 was developed in response to the Australian Government’s 2015 review of its welfare system, A New System for Better Employment and Social Outcomes.3 A key finding was that an investment approach should be a central feature of Australia’s welfare support system, including targeted investments designed to achieve a return on investment, increase self-reliance of program participants, and reduce the lifetime liability of Australia’s social support system.

Try, Test and Learn Fund

1.2 As part of the PIA and as announced in the 2016–17 Federal Budget, the Department of Social Services (the department) established the Try, Test and Learn Fund (TTL Fund) which provided $96.1 million for projects over four years. The intent of the TTL Fund’s projects was to test policy interventions, try new approaches and build the evidence base for the Australian Government on what works and what does not work to reduce lifetime welfare costs, increase people’s independence from welfare and reduce the risk of intergenerational welfare reliance. It was intended to deliver employment outcomes for vulnerable Australians most at risk of long-term unemployment and long-term welfare dependence.4

1.3 Fifty-two projects were funded across eight priority groups5 in two tranches following an open competitive selection process:

- tranche one — open for application from December 2016 to February 2017, focused on priority groups of young carers, young parents, and students at risk of moving to long-term unemployment or unemployed students; and

- tranche two — open for application from November 2017 to September 2018, focused on priority groups of older unemployed people, working age migrants and refugees, working aged carers and at-risk young people.

1.4 In June 2021, the University of Queensland published the Try, Test and Learn Evaluation, which was commissioned by the department (see paragraph 2.15).6

Transition funding for successful Try, Test and Learn Fund projects

1.5 In the 2021–22 Federal Budget, the Australian Government announced $12.6 million in additional funding over two years (transition funding) for successful TTL Fund projects that demonstrated promise in supporting groups of people at risk of long-term welfare dependence into work (transition projects). The funding was announced as one-off, time limited grants.

1.6 Due to the COVID-19 pandemic, the program objective of the transition funding differed from the two tranches funded under the original program. Specifically, the transition funding focused on supporting employment during a period of economic recovery, providing short-term employment outcomes rather than focusing on longer term outcomes (transition program).

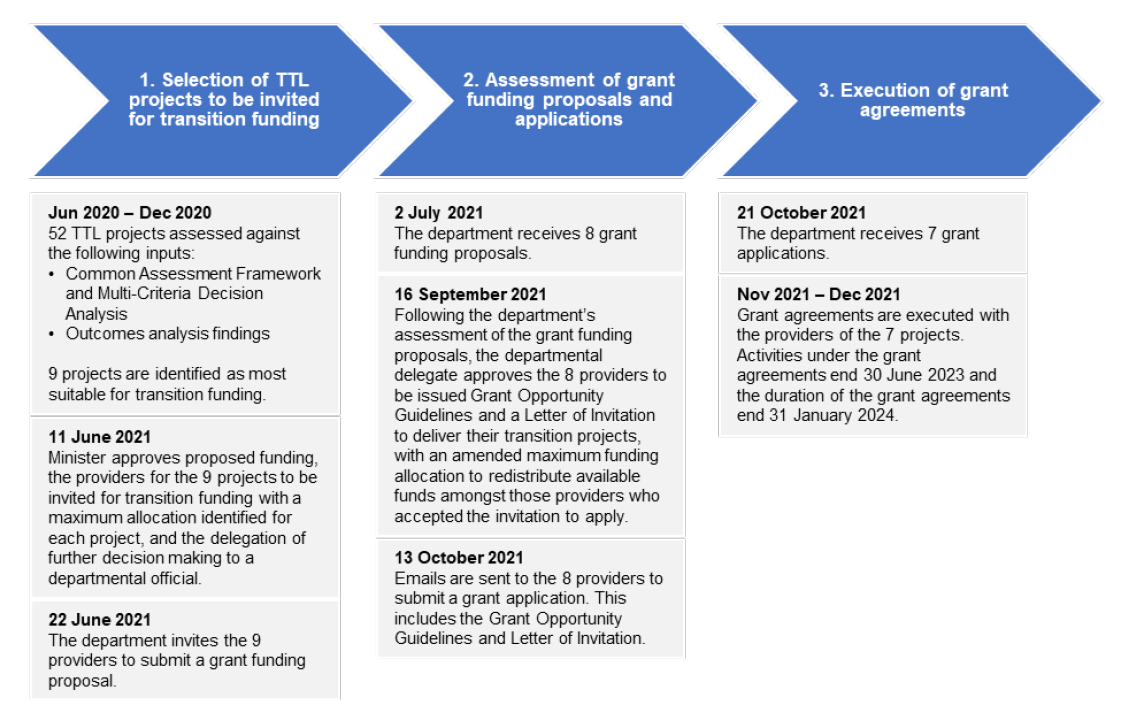

1.7 As part of the transition program, the department used a hybrid funding model which provided a fixed establishment payment (stage one) and then required providers to transition to an outcomes-based funding model (stage two). As illustrated in Figure 1.1, nine TTL Fund project providers were recommended to be invited to apply for transition funding (proposed providers), with the department awarding funding to seven providers.

Figure 1.1: Try, Test and Learn transition projects recommended, assessed, and awarded funding

Source: ANAO analysis of grant agreement, briefs to the Minister for Social Services and to the departmental official delegated as the decision maker.

Rationale for undertaking the audit

1.8 This performance audit was conducted to provide assurance to Parliament on effectiveness of the department’s administration of grants to TTL Fund projects that demonstrated promise in reducing long-term unemployment and long-term welfare dependency.

Audit approach

Audit objective, criteria and scope

1.9 The objective of this audit was to assess the effectiveness of the administration of grants for the TTL Fund transition projects.

1.10 To form a conclusion against the audit objective, the ANAO adopted the following three high-level criteria:

- Was the program well designed?

- Were funding recommendations and decisions made in accordance with the Commonwealth Grants Rules and Guidelines?

- Were appropriate funding agreements established?

1.11 The audit did not examine the administration of the grants under tranches one or two of the TTL Fund. As the TTL Fund transition projects are funded until 30 June 2023, the audit did not assess the effectiveness of the projects awarded funding.

Audit methodology

1.12 To address the audit objective, the audit team:

- reviewed relevant submissions and briefings to the Australian Government;

- examined documentation relating to the department’s processes for identifying projects invited to apply for funding, assessment of funding proposals, recommendations for the award of funding and development of grant arrangements; and

- met with relevant departmental staff.

1.13 The audit was conducted in accordance with ANAO Auditing Standards at a cost to the ANAO of approximately $ 218,000.

1.14 The team members for this audit were Mark Tsui, Kerrie Nightingale, Joel Gargan and Alexandra Collins.

2. Program design

Areas examined

The ANAO examined whether the program for the award of funding to successful projects from tranches one and two of the Try, Test and Learn Fund was well designed, particularly whether there was an appropriate basis for the selection of projects invited to apply for transition funding and whether Grant Opportunity Guidelines outlined governance arrangements and selection processes.

Conclusion

The design of the program and department’s basis for selecting projects invited to apply for transition funding was largely effective. The department relied on multiple inputs to inform its selection of transition projects. The prioritisation of projects and weightings of inputs were not documented which limited the transparency of the decision-making process.

Areas for improvement

The ANAO has highlighted one area for improvement for the department aimed at improving transparency and accountability in decision-making, particularly in circumstances where the selection of providers relies on an evaluation based on multiple sources of input.

2.1 The framework governing the administration of Commonwealth grants is provided by the Public Governance, Performance and Accountability Act 2013 and articulated in the Commonwealth Grants Rules and Guidelines 2017 (CGRGs). The department has responsibility for operating the Community Grants Hub (CGH)7 on behalf of the Australian Government. The department must implement practices and procedures that are consistent with the overarching intent of the principles outlined in the CGRGs to demonstrate proper8 use and management of public resources for which it is responsible.

2.2 The CGRGs outline the different options for grant selection processes which include open competitive, targeted or restricted competitive, non-competitive (open)9, demand-driven, closed non-competitive and one-off to be determined on an ad hoc basis. An ad hoc closed non-competitive process, such as that used for the transition projects, involves providers being invited to submit applications which are assessed individually.

2.3 In the 2021–22 Federal Budget, the Australian Government announced $12.6 million funding over two years (transition funding) for successful Try, Test and Learn Fund (TTL Fund) projects that demonstrated promise in supporting groups of people at risk of long-term welfare dependence into work (transition projects). The funding was announced in May 2021 as one-off, time limited grants.

2.4 The transition funding was administered by an ad hoc closed non-competitive process, with providers selected by the department invited to apply for funding. Ad hoc closed non-competitive grant processes can present a risk associated with the transparency of decision making in the selection of providers invited to apply for grant funding. It is important for the department to maintain records to support the integrity of its decision making, and to ensure its practices and procedures are consistent with the rules and overarching intent of the principles outlined in the CGRGs.

Was there an appropriate basis for the selection of the transition projects invited to apply for funding?

The department used multiple inputs to inform the selection of projects recommended to be invited to apply for transition funding, including assessments of the projects funded in tranches one and two from the TTL Fund and an outcomes analysis aligned to the new program objective for the transition projects. The department’s use of various ranking and prioritisation frameworks across the inputs reduced the transparency of the selection of projects. There was limited documented rationales to support the selection of two of the nine projects recommended to be invited to apply for funding. There were deficiencies in the department’s implementation of measures for identifying and mitigating conflicts of interest in accordance with Commonwealth Grants Rules and Guidelines 2017 and departmental procedures.

2.5 The department’s multi-criteria decision analysis (MCDA) was one input that the department used in determining which projects funded from the TTL Fund under tranches one and two (see paragraph 1.3) were recommended to be invited to apply for transition funding. Applying the MCDA led the department to identify the top performing projects under the TTL Fund that demonstrated promise. The department also undertook its own categorisation of projects based on the MCDA, analysis of linked Data Exchange (DEX)10 and welfare payment data for TTL participants, and individual evaluations of projects (where available).11 The department’s consolidated analysis is referred to as ‘outcomes analysis findings’.

Multi-criteria decision analysis

2.6 In June 2020, the department engaged the University of Canberra (UC) for ‘assistance to design and undertake a multi-criteria decision analysis of 52 TTL Fund projects and data gap analysis’.

2.7 The request for quotation (RFQ) issued to UC indicates that the department intended to use the outcomes to inform future funding decisions and for UC to work collaboratively with the department to:

draw conclusions about which TTL Fund projects best meet the objectives of the TTL Fund as refined through this process; and, if required assist the [department] to develop recommendations about TTL projects that could be eligible for ongoing funding.

2.8 In March 2023, the department advised the ANAO that, at the time the RFQ was issued to UC, the transition program had not been conceived, and the wording reflects the department’s intention to fund projects that could viably attract funding in their own right from various sources.

2.9 The MCDA was intended to assist in drawing conclusions about which tranches one and two projects best met the objective of the TTL Fund and support the department to develop recommendations about TTL projects that could be eligible for ongoing funding.

Common Assessment Framework

2.10 The UC developed the Common Assessment Framework (CAF) in conjunction with the department through:

- a survey of TTL providers which received funding in tranches one and two to determine their perceptions of the critical success factors informing project outcomes; and

- development of workshop data and tools for use in a co-design workshop with external workshop participants, which included testing the CAF on an example project.

2.11 The CAF included seven criteria to assess each project against:

- criterion one assessed the strength of the project’s evidence and the degree of innovation underpinning the projects;

- criterion two assessed whether benefits derived from intervention aligned to program objectives;

- criterion three assessed the impact of the project in improving participant capability to transition to education, training and/or employment;

- criterion four assessed the impact of the project in transitioning target groups to education, training and/or employment;

- criterion five assessed the value of the project through quality of reporting and cohort satisfaction;

- criterion six assessed whether the project had achieved targets related to recruiting participants; and

- criterion seven assessed the project’s return on investment, relative to the cost and an orthodox provision of welfare to participants.

2.12 The department undertook project assessments in November 2020 to apply the CAF to the 52 projects from tranches one and two. The MCDA was the outcomes of the department applying the CAF.

2.13 UC provided detailed input for criteria one and two for all 52 TTL projects to assist the department in applying the CAF and scoring the projects. For each project, UC included the evidence-based assumptions underpinning the project’s goal, analysis of project outcomes, relevant benchmark for comparison and lessons from the project’s delivery. UC developed a five-point ranking system and relevant indicators for use in scoring projects against criteria one and two. The input also included considerations towards a change in the operating context, and how each project’s theory of change and mode of delivery may be impacted due to the COVID-19 pandemic. Appendix 5 outlines a summary of these considerations for the projects that were awarded grant funding.

2.14 In the department’s application of the CAF to the of the 52 projects12, there was no documented evidence of the department’s consideration of the detailed Criteria One and Two inputs provided by UC. The department recorded a one to five ranking in the MCDA scoring sheet for each project, with no associated documentation supporting the rationale for such scoring. The criteria one and two inputs were relevant given the change in the objective of the transition program to focus on short-term employment outcomes (see paragraph 1.6) and the varying social distancing measures implemented by State and Territory Governments at the time, particularly in relation to the hospitality and construction sectors (refer to Case Study 1).

2.15 The MCDA working sheet does not list the University of Queensland Try, Test and Learn Evaluation — Interim Report dated 9 April 2020 (interim evaluation report) as an input (data source) for assessment of any of the seven criteria within the CAF. The department did not use the interim evaluation report as an input to the MCDA as the evaluation was at the program level and not in relation to individual projects.

MCDA Report

2.16 A report was developed by the UC and department (the MCDA report) in November 2020 which ranked the ‘The top 10 performing “Try, Test and Learn” projects’. A data gap analysis with reference to the seven criteria of the CAF was also set out in the final MCDA report. The data gap analysis identified the absence of indicators and monitoring data to allow for the assessment of quality and innovation within the projects, delineation of the different types of outcomes achieved and benchmarking of costs of delivery.

2.17 The MCDA report provides insights regarding five broader lessons for the TTL Fund program from tranches one and two, including:

- co-development needs to be fit-for-purpose, with insufficient emphasis having been placed on the ‘learn’ aspect;

- the department, in future, seeking out ‘tried and tested innovators’13 with projects from tranches one and two not considered to have been particularly innovative;

- selecting a smaller number of projects;

- adopting monitoring and evaluation methods that are agile and appropriate i.e., allow for fast learning or failure which is likely to be more achievable with a smaller number of projects; and

- ensuring cross government collaboration.

2.18 The report also contained recommendations for how the department might enhance the quality of outcomes-driven project management. The report highlighted the lessons to be learnt in relation to program delivery from the providers failing to properly assess the risks of not recruiting sufficient numbers of participants within the target cohort (see Case study 1).

2.19 Case study 1 highlights some of the challenges which were detailed in UC’s criteria one and two input (see paragraph 2.13) and continued to manifest in one of the transition projects.

|

Case study 1. Example transition project |

|

The project was ranked 36 in the MCDA and UC’s input highlighted that the project:

UC’s input also stated that ‘[the] operating context has changed in significant ways, whether [the] current theory of change will be impacted by COVID-19 remains an empirical question’. Despite these MCDA outcomes, the department did not document its consideration of these factors and whether they were sufficiently mitigated before selecting the project for transition funding. Following concerns raised by this project, particularly with regarding to recruiting project participants, there was a mutual agreement to terminate the funding arrangement at 30 June 2022. |

Outcomes analysis findings

2.20 Following receipt of the MCDA report, the department undertook further categorisation of projects based on the results of the MCDA, analysis of linked DEX and welfare payment data for TTL participants, and individual evaluations of projects where available. This was referred to as ‘outcomes analysis findings’ and used a different ranking and prioritisation framework to the MCDA.

2.21 As part of the outcomes analysis findings, the department categorised projects into four categories:

- projects showing significant promise14;

- projects demonstrating some promise, but further time and/or evidence may be required;

- projects not yet demonstrating promise; and

- projects that have not demonstrated promise and are unlikely to do so.

2.22 Seven of the nine projects recommended to be invited to apply for transition funding were categorised as showing significant promise. Two of the nine projects were categorised as demonstrating some promise, but further time or evidence or both may be required.

2.23 Five of the seven projects which were categorised as showing significant promise were also ranked in ‘The top 10 performing “Try, Test and Learn” projects’ in the MCDA report. Neither of the two projects categorised as demonstrating some promise were included in this list in the MCDA report. The department’s ‘outcomes analysis findings’ did not document the reasons why the remaining five projects in the ‘The top 10 performing “Try, Test and Learn” projects’ from the MCDA were not selected, why two projects were selected which did not feature in this list or why two projects the department assessed as showing significant promise were not selected.

2.24 Additional categorisation of projects then occurred based on how directly the project activities were connected to securing employment or education outcomes:

- direct connection: immediate to short term;

- intermediate connection: short to medium term; and

- indirect connection: project designed not to deliver in the short to medium term.

2.25 Six of the nine projects recommended to be invited to apply for transition funding were categorised as having a direct connection and the remaining three were categorised as intermediate connection.

2.26 The department subsequently documented internal talking points on how projects recommended for funding were identified. It uses different framing in the categorisation of projects, combining the two categorisations referred to above as: demonstrating significant impact on employment in the short-term and demonstrating some impact on employment in the short term.

2.27 In a brief to the Minister for Social Services in May 2021, the department advised that project eligibility was based on people at greatest disadvantage in the current labour market, which at the time was still impacted by the COVID-19 pandemic. There was no documentation of how the department formed this advice.

Selection of projects recommended for transition funding

2.28 In the brief to the Minister, the department advised that nine transition projects were identified to be invited to apply for transition funding based on the MCDA and the department’s ‘outcomes analysis findings’15 (see paragraphs 2.20 to 2.27). These nine projects were determined by the department to be most suitable for transition funding, a summary of the projects that were subsequently provided funding are detailed at Appendix 4. The brief and supporting attachments provided factual statements in relation to each of the inputs and their use in identifying projects selected for transition funding.The brief also contained proposed maximum total funding to be allocated to each project.

2.29 The Minister agreed to the department inviting the providers of the nine projects to apply for grant funding. The Minister also agreed to delegate the approval of the final Grant Opportunity Guidelines and the execution of grant agreements to a departmental official.

2.30 Two of the nine projects listed in the Minister’s brief had limited documented rationales to support their selection as projects to be invited to apply for transition funding. These two projects had proportionally low rankings in the MCDA (not featuring in the ‘top 10’ list) and were categorised as projects ‘demonstrating some promise’ in the outcomes analysis findings. The absence of documentation of the decision-making process reduced the transparency of the selection of projects for transition funding, particularly given the different ranking and prioritisation frameworks across the key inputs.

2.31 The department advised the ANAO in November 2022, that these two projects were elevated to ensure that there were projects aligning to the vulnerable cohorts of carers and young parents selected for transition funding. The department’s advice was inconsistent with its records as the projects that were ultimately awarded funding did not achieve sufficient or equitable coverage across the priority cohorts. While there were no other carer related projects assessed as demonstrating some promise, there were two other young parent related projects which were assessed as demonstrating some promise, the same assessment ranking as the projects selected to apply for funding. Also, there were no other projects elevated to provide coverage to older unemployed people and there was no rationale for the significant coverage that three of the seven projects provided to migrants and refugees.

|

Opportunity for improvement |

|

2.32 Where multiple inputs (with different criteria and factors considered) are used to evaluate a project, the weighting for each input could be documented in an evaluation plan, particularly where the scope or goal of a program changes over time. |

Managing conflicts of interest in the selection process

2.33 The CGRGs include seven key principles for grants administration, one of which is probity and transparency. The CGRGs specify that accountable authorities should implement appropriate mechanisms for identifying and managing potential conflicts of interest for grant opportunities.

2.34 The department has developed policies and procedures relating to grant programs such as the Ad hoc and One-Off Grant Opportunity Toolkit (Ad Hoc Grant Toolkit). This guidance requires all departmental personnel (including contractors, external advisors, and consultants) involved in or exposed to information regarding a grant opportunity to complete a conflict of interest form as soon as they are involved in the process. It also requires the department to maintain a conflict of interest register for the grant opportunity.

2.35 The Ad Hoc Grant Toolkit also states that conflicts of interest should be managed in accordance with the processes outlined in the department’s Conflict of Interest Policy, which requires that all new employees and contractors complete a conflict of interest disclosure form at the time of commencing employment with the department. The majority of the conflict of interest forms assessed during the audit were general employment declarations and did not specifically relate to the TTL Fund projects or the grants awarded from the transition funding (see paragraphs 3.16 to 3.18).

2.36 The MCDA and the outcomes analysis findings were key components of the grant program design and project selection process. The department did not maintain a conflict of interest register in accordance with its policy requirements to record declarations made by the membership of the Expert Group that was involved in the MCDA and the departmental staff that were involved in the ‘outcomes analysis findings’ or advising the Minister.

2.37 In the absence of the department maintaining the required conflict of interest register, the ANAO sought to identify those involved in the selection process through documentation which was reviewed as part of the audit. In doing so, the ANAO identified that conflict of interest declarations were not provided for three departmental employees. One of these employees was part of the Expert Group while the other two were involved in the coordination of activities for the CAF/MCDA process. There was also no documented output from the MCDA Project Assessment sessions16 attended by departmental personnel (comprising members of the Expert Group and program support) to indicate whether conflicts of interest were considered or addressed at the start of each assessment session.

2.38 The department advised that conflict of interest forms were not required for the MCDA process as it was primarily undertaken to help the department provide advice to the Minister on which TTL Fund projects were most promising (as opposed to selecting projects for transition funding). One member of the Expert Group executed a deed relating to their work on the TTL Fund which contained clauses relating to identifying and managing conflicts of interests. The contractor from UC also executed a Deed of Confidentiality which included conflict of interest clauses.

Do the guidelines clearly outline program governance arrangements, selection criteria and assessment processes?

The Grant Opportunity Guidelines issued to providers invited to apply for transition funding outlined the program’s governance arrangements and selection processes.17 The Minister agreed to the proposed grant selection process, which was an ad hoc grant opportunity, the draft Grant Opportunity Guidelines, and for a departmental official to be delegated as the decision maker. The Grant Opportunity Guidelines set out the selection process being submission of a Letter of Invitation with Acceptance. There were further requirements regarding what constituted eligible grant activities, which were tailored to the nature of the transition project.

2.39 The CGRGs at paragraph 4.4(a) states that ‘Officials must develop grant opportunity guidelines for all new grant opportunities, and revised guidelines where significant changes have been made to a grant opportunity’.

2.40 Grant Opportunity Guidelines for an ad hoc or one-off grant opportunity are not required to be submitted to the Department of Finance, nor are they required to be published on Grant Connect or the Commonwealth Grant Hub websites. Applicants invited to apply for an ad hoc closed non-competitive grant opportunity receive an email invitation with the Grant Opportunity Guidelines and information on how to apply.

2.41 The department’s Ad Hoc Grant Toolkit requires Grant Opportunity Guidelines include the purpose or description of the grant, the objectives, the selection process, any reporting and acquittal requirements and the proposed evaluation mechanisms.

Grant Opportunity Guidelines

2.42 In accordance with paragraph 11.5 of the CGRGs, the Minister approved the one-off, time limited grants to be awarded to the selected transition projects under an ad hoc process. The Minister agreed to:

a department official being the decision maker to approve final Grant Opportunity Guidelines, and to enter into agreements and commit relevant money under Section 32B of the Financial Framework (Supplementary Powers) Act 1997 (FSSP Act).

2.43 For each of the nine projects approved by the Minister in June 2021, the department sent the proposed provider a letter of invitation and Grant Opportunity Guidelines relevant to the selected project.

2.44 The Grant Opportunity Guidelines outlined the program governance arrangements. A senior departmental official (the decision maker) was to make the final decision to approve the grant and matters associated with approval of the proposed payment-by-outcomes model, associated payments and terms and conditions of the grant. As the Grant Opportunity Guidelines were issued after the department assessed the funding proposal received from each proposed provider, they did not include details of that assessment process (see Figure 1.1 and paragraph 3.3).

2.45 The grant selection process only required that the proposed provider was the one named in the Grant Opportunity Guidelines, that they would deliver the eligible grant activities and to submit to the department the required Letter of Invitation with Acceptance.

2.46 The following eligibility criteria was set out in the Grant Opportunity Guidelines:

- listed as an invited [proposed provider] (namely a provider who submitted a grant funding proposal in response to the department’s initial invitation to apply for funding);

- not a [proposed provider] on the National Redress Scheme’s website on the list of ‘Institutions that have not joined or signified their intent to join the Scheme’;

- willing to transition to an outcome-based funding model during the grant agreement period;

- willing to explore with the department methods and opportunities for securing alternative funding sources18; and

- willing to work with the department throughout the life of the grant to determine project effectiveness and identify lessons learnt.

2.47 Based on the CGRGs and departmental requirements outlined in the Ad Hoc Grant Toolkit, the Grant Opportunity Guidelines developed by the department addressed the minimum requirements.

3. Funding decisions

Areas examined

The ANAO examined whether funding decisions were informed by an assessment of grant funding proposals and applications, and whether recommendations were made to the decision maker, in accordance with the Commonwealth Grants Rules and Guidelines 2017.

Conclusion

Funding decisions were documented and largely compliant with the Commonwealth Grants Rules and Guidelines 2017. The department documented recommendations for the award of funding by the relevant delegate. The recommendations were not supported by a sufficiently documented assessment of grant funding proposals and applications.

Areas for improvement

The ANAO has highlighted one area for improvement for the department aimed at improving transparency of the decision making for the award of grant funding.

3.1 Within the legislative framework governing decisions to spend public money, the Commonwealth Grants Rules and Guidelines 2017 (CGRGs) contain a number of specific decision-making requirements to support the seven key grants administration principles.19 Consistent with those principles, the ANAO assessed the department’s compliance with decision making requirements of the CGRGs and internal procedures.

3.2 The department’s Ad-hoc or One-Off Grant Opportunity Toolkit (Ad Hoc Grant Toolkit) outlines its internal procedures for administering grants in accordance with CGRGs. It was developed by the department’s Community Grants Hub to support policy areas to implement the program. It outlines factors to consider when designing an ad hoc or one-off grant opportunity and provides a high-level overview of the grant opportunity process. There is also a grant documentation checklist to support compliance with CGRG requirements.

Were grant funding proposals and applications assessed against appropriate criteria and the Grant Opportunity Guidelines?

Nine proposed providers were invited to submit funding proposals. The department did not provide assessment criteria to the proposed providers but did assess each of the funding proposals. Matters of concern or risk identified by the department during the assessment process were recorded. The closure of these matters following further discussion with the relevant providers or risk mitigation strategies developed by the department were not documented. Following the assessment of grant funding proposals and issuance of Grant Opportunity Guidelines, which included eligibility criteria, the department did not document how it was satisfied that providers invited to deliver transition projects had met the eligibility criteria in the Grant Opportunity Guidelines. This also included one instance where the waiving of an eligibility criterion was not documented following a provider’s late and incomplete submission.

3.3 Nine proposed providers were invited by the department, via email on 22 June 2021, to submit a two-page grant funding proposal for the transition program. The invitation to submit a grant funding proposal highlighted the expectation that providers would transition to other forms of sustainable funding and commitment to transitioning to an outcomes-focussed funding approach (referred to by the department as a Payment-by-Outcomes model).

3.4 In requesting grant funding proposals, the department asked proposed providers to submit a two-page response which included information relating to:

- the services the provider intended to provide (based on the previously funded TTL project);

- where the provider intended to deliver services;

- known referral pathways for participants;

- proposed eligibility criteria for participants (noting the intention of the program is to assist the more vulnerable into employment);

- the number of participants the provider anticipated providing services to;

- proposed outcomes (and indicative measures) the provider was aiming to achieve and expected timeframes;

- current, or former experience operating under payment by outcomes or similar models (if any);

- what sources of funding or other support the provider currently had available (if any) to the project (for example, corporate sponsorship, philanthropic or in-kind support);

- proposals for where and how the provider might secure alternative funding in the short to medium term; and

- the total funding amount the provider was seeking.

3.5 The request for grant funding proposals stated the responses provided would form the basis of further discussion between the department and providers.

3.6 Eight of the nine proposed providers submitted grant funding proposals in response to the department’s request. The one provider that did not submit a grant funding proposal declined to participate in the transition program and did not provide a reason.

Assessment of grant funding proposals

3.7 The department’s assessment of the eight grant funding proposals was recorded in an assessment rubric. The assessment rubric was structured to include the following information.

- Funding amount — the funding amount approved by the Minister in comparison to the funding amount sought by the provider.

- Delivery location — the geographic location where the prior project under the TTL Fund was delivered in comparison to the proposed delivery location under the transition program.

- Participant numbers — the number of participants in the prior project under the TTL Fund in comparison to the proposed number of participants under the transition program.

- Participant eligibility — the eligibility requirements of participants in the prior project under the TTL Fund in comparison to the proposed eligibility requirements of participants under the transition program.

- Delivery service model — whether there were any differences in the proposed delivery service model since the prior project under the TTL Fund.

- Potential to transition to outcomes-funding — previous experience in operating under an outcomes framework and whether any difficulty in transitioning may be experienced;

- Outcomes — whether the outcomes proposed by the provider aligned to the outcomes of the transition program.

- Potential to attract alternative funding — whether providers had other sources of funding and if it proposed how it could source alternative funding arrangements.

- Cost per outcome — the cost per outcome in the prior project under the TTL Fund in comparison to the cost per outcome under the transition program.

The matters of concern and risk within the categories identified above that were recorded in the assessment rubric formed the basis of subsequent discussion and negotiation with all providers. Resolution and closure of these matters were sought by the department through individual meetings with providers and email correspondence. Outcomes from these communications were not documented in the assessment rubric and were partly documented in minutes recorded by the department (see Table 3.1).

Table 3.1: Analysis of departmental records supporting provider assessment of the projects awarded funding

|

|

Productivity Boot Camp |

Community Corporate |

Two Good Foundation |

AMA Services (WA) |

Australian Migrant Resource Centre |

Adelaide Northern Division of General Practice (t/as Sonder Care) |

Apprenticeships R Us Limited |

|

Funding amount |

▲ |

▲ |

▲ |

▲ |

▲ |

▲ |

▲ |

|

Delivery location |

◆ |

▲ |

◆ |

◆ |

◆ |

◆ |

◆ |

|

Participant numbers |

▲ |

▲ |

▲ |

◆ |

◆ |

▲ |

▲ |

|

Participant eligibility |

▲ |

▲ |

◆ |

▲ |

■ |

◆ |

▲ |

|

Delivery service model |

◆ |

◆ |

◆ |

▲ |

■ |

◆ |

▲ |

|

Potential to transition to outcomes funding |

◆ |

◆ |

▲ |

▲ |

▲ |

▲ |

▲ |

|

Outcomes |

◆ |

◆ |

◆ |

▲ |

▲ |

▲ |

▲ |

|

Potential to attract alternative funding |

■ |

◆ |

▲ |

▲ |

▲ |

▲ |

▲ |

|

Cost per outcome |

◆ |

◆ |

◆ |

◆ |

◆ |

◆ |

◆ |

|

137 |

108 |

104 |

102 |

82 |

95 |

152 |

139 |

Key:

◆ No matter identified by the department that required further consideration with the provider or the closure of a matter explored further by the department with the provider was sufficiently documented in the assessment rubric.

▲ Closure of a matter identified by the department that required further consideration with the provider was not sufficiently documented in the assessment rubric but closure of the matter was validated in other documentation (e.g., meeting minutes, email correspondence).

■ Insufficient documentation to support that the department closed a matter that it had identified in the assessment rubric that required further consideration with the provider.

Note: The analysis summarised in Table 3.1 relates to the seven providers that were subsequently awarded grant funding and entered into grant agreements with the department following the assessment of grant funding proposals and acceptance of the department’s invitation to deliver services.

Source: ANAO analysis of departmental records including the assessment rubric, minutes from meetings with providers and email correspondence with the providers.

3.8 The email correspondence between providers and the department, and the documented outcomes from individual meetings with providers, did not provide closure to all matters identified by the department during its assessment of grant funding proposals. Specifically, there were two providers that the department proceeded to issue Grant Opportunity Guidelines to without documenting its closure to matters of concern and risk.

Assessment of grant applications

3.9 Following the department’s assessment of grant funding proposals, the department issued a Letter of Invitation and the Grant Opportunity Guidelines to all eight providers via email on 13 October 2021. A signed acceptance of the Letters of Invitation were due back to the department from providers at 12:00pm (AEST) on 21 October 2021.

3.10 The department’s Ad Hoc Grant Toolkit for grants administration specifies that ‘Letters of Invitation’ can be used only in circumstances where the department has received a proposal from a proposed provider and its assessment has determined that it is suitable to fund. The department’s assessment rubric demonstrated that it commenced and undertook an assessment of proposals (see paragraph 3.7). The assessment rubric used by the department was incomplete and not finalised as it did not provide documented closure to the assessment process (see paragraph 3.7 and Table 3.1), which therefore did not satisfy the department’s requirements outlined in the Ad Hoc Grant Toolkit.

3.11 The CGRGs and the department’s internal policy, the Ad Hoc Grant Toolkit, do not explicitly require that the grant selection process for ad hoc closed non-competitive grants include assessment criteria. The selection process for ad hoc closed non-competitive grants can be limited to eligibility criteria. The eligibility criteria contained in the Grant Opportunity Guidelines is set out in paragraph 2.46.

3.12 Seven of the eight providers who submitted grant funding proposals proceeded to submit a funding application to the department. The application for the transition projects consisted solely of a signed acceptance of the Letters of Invitation issued by the department. The one provider that did not submit a grant application declined the invitation following concerns with the payment-by-outcome (PbO) model and its impact on cash flow if targets were unable to be met.

3.13 The grant application for one of the seven providers that submitted grant applications to the department, was submitted both late and incomplete. The department emailed this provider after the closing time specified in the Grant Opportunity Guidelines had passed to follow up the submission of its grant application. In addition to the late submission of the grant application by this provider, the department also noted that it was incomplete and required resubmission as the provider had not indicated in its application that it accepted the invitation to deliver services.

3.14 The department’s subsequent assessment of eligibility criteria and acceptance of the seven grant applications was not documented. Additionally, there was no documentation of the department waiving the eligibility requirements for the one late submission or having made the departmental delegate aware of the matter.

|

Opportunity for improvement |

|

3.15 In assessing grant applications and associated grant funding proposals as part of an adhoc closed and non-competitive grant processes, the department could document its assessment to enable the department to demonstrate the delegate’s satisfaction that eligibility criteria have been met and value for relevant money has been achieved as required by the Commonwealth Grants Rules and Guidelines 2017. |

Managing conflicts of interest in the assessment process

3.16 The department did not implement adequate measures to identify and mitigate risks to conflicts of interest during the assessment of grant funding proposals and applications phase as required by the key principle for probity and transparency from the CGRGs and the Ad Hoc Grant Toolkit (see paragraph 2.37). In addition to the absence of a conflicts of interest register for those involved in the selection of providers invited to apply for funding, the department did not maintain such a register for those involved in the assessment of funding proposals or grant applications and advising the delegate.

3.17 There were four non-Senior Executive Service (SES) departmental officers that had not made conflict of interest declarations despite their involvement in the assessment of grant funding proposals and applications. One of the four officers was the same departmental official that was also involved in the selection of providers to be invited for funding (see paragraph 2.37).

3.18 Annual declaration of personal interests by those SES officers involved in the selection and assessment of providers awarded transition funding were reviewed, and no conflicts were identified. An SES officer was the department’s delegated decision-maker for the transition program. Although no conflict that ought to have been declared was identified, the ANAO noted that no declaration specific to the transition funding program had been made by the decision maker.

Were funding decisions informed by recommendations and appropriately documented?

Funding decisions were informed by recommendations made to the departmental delegate and were partly supported by documentation to demonstrate compliance with the Commonwealth Grants Rules and Guidelines 2017.

3.19 On 11 June 2021, the Minister agreed to the department’s recommendation for nine providers to be invited to apply for funding with a proposed allocation of available funding and for a departmental official to approve the final funding decisions (see paragraph 2.29).

3.20 Following the department’s assessment of grant funding proposals, the commitment of relevant money to invite eight providers to apply for the grant opportunity and issue the Letter of Invitation with Grant Opportunity Guidelines to each provider was approved by the departmental delegate on 16 September 2021. The brief outlined the eight providers and the maximum total funding for each provider.

3.21 In response to a brief providing an update on the transition program, the Minister requested on 29 September 2021 that leftover funding (as a result of one of the nine invited to apply for funding declining to submit a grant proposal) be distributed to providers. The departmental delegate approved this revised funding of $256,000 to be allocated equally amongst the eight providers on 12 October 2021. The brief to the departmental delegate also detailed two other approaches to allocation of surplus funds: a ‘pro-rata’ approach for long-term outcomes and payment ‘per long-term outcome’. Neither of these approaches were recommended citing significant variation in allocation across providers and the potential that funding may not be fully utilised, resulting in an underspend in the case of the payment ‘per-long-term outcome’ approach.

3.22 Paragraph 4.5 of the CGRGs requires that where an accountable authority or an official approves the proposed commitment of relevant money in relation to a grant, the accountable authority or official who approves it must record, in writing, the basis for the approval relative to the grant opportunity guidelines and the key principle of achieving value with relevant money. Minutes provided to the departmental delegate with funding recommendations recognised this requirement of the CGRGs however, no rationale was provided to support the department’s achievement of value with relevant money.

3.23 Paragraph 4.7 of the CGRGs states that officials should brief the Minister on the merits of a specific grant and, at a minimum, indicate which grant applications fully met, partially met or did not meet any of the selection criteria.20 The departmental delegate (in lieu of the Minister as decision maker) was not briefed on the grant applications that met the selection criteria, including the one instance of a grant application that was submitted both late and incomplete.

4. Funding agreements

Areas examined

The ANAO examined whether fit-for-purpose performance monitoring and outcomes frameworks were established for the Try, Test and Learn (TTL) transition projects and whether the grant agreements, negotiated between the department and providers, contained appropriate data and information collection requirements to support performance monitoring and the assessment of outcomes.

Conclusion

The funding agreements established for the transition projects were partly effective. Funding agreements outline the performance reporting requirements and the outcomes framework which includes performance targets which have been tailored for each project. The Department of Social Services (the department) did not document its consideration of the risks associated with each provider’s capacity to transition to a Payment by Outcome (PbO) model of funding or service delivery challenges arising from the COVID-19 pandemic when establishing performance targets, particularly for the first observation period. The department made payments to providers without performance targets being achieved in the first and second outcome observation periods.

Areas for improvement

The ANAO has highlighted three areas for improvement for the department aimed at implementing mechanisms to support providers when transitioning to new funding models and enhancing processes for performance monitoring, including mechanisms to verify information and representations made by providers in relation to grant activities, to ensure the department is actively managing performance of providers throughout the life of the grant.

4.1 Under the grant agreements, providers received funding for transition projects in two stages.

- Stage one — During this stage, the department and provider worked together to design and develop performance targets and an outcomes framework.

- Stage two — This stage only proceeded if the department and provider mutually agreed on performance targets and an outcomes framework.

4.2 Stage one included two ‘establishment payments’ for each provider. The first payment was on execution of the grant agreement and the second payment was scheduled for 1 December 2021. Stage two included four ‘outcome payments’ that were to be made subject to a provider’s achievement of agreed outcomes during predefined observation periods.

4.3 The outcome observation periods are defined within the executed grant agreements and are consistent across the transition projects. The date which the grant activities were deemed to commence vary across the projects between September to November 2021. The three outcome observation periods are summarised in Table 4.1.

Table 4.1: Outcome observation period

|

Outcomes observation period |

Timeframe |

|

Outcome observation period 1 |

The deemed activity start date to 25 March 2022 |

|

Outcome observation period 2 |

26 March 2022 to 7 October 2022 |

|

Outcome observation period 3 |

8 October 2022 to 7 April 2023 |

|

166 |

190 |

Source: Example executed grant agreement.

4.4 The department developed a performance monitoring and outcomes framework to give effect to the PbO approach for the grants for the transition projects.21 The framework consisted of specified outcomes measured via performance targets tailored to each project and a suite of performance indicators measured by comparing the project performance against relevant benchmarks. Payments to providers were contingent on the achievement of the performance targets for each of the outcomes during the relevant observation period.

Were fit for purpose performance monitoring and outcomes frameworks established for the projects?

Funding for the transition projects is delivered by a PbO model. The department included an outcomes framework in the grant agreements. The framework included outcomes and associated performance targets linked to receipt of payments and performance indicators for the assessment of overall performance of the grant activity. The department shared baseline analysis with providers at the time that Grant Opportunity Guidelines were issued to assist with negotiation of the outcome performance targets. These performance targets were tailored to the nature of the individual transition projects and were incorporated into the grant agreements with outcomes-based milestone payment schedules. In developing the performance monitoring and outcomes frameworks, the department did not sufficiently recognise provider capacity and the time that would be required by them to transition to a PbO model. The department made payments to providers without performance targets being achieved in the first and second outcome observation periods.

Outcomes framework

4.5 For each proposed provider invited to apply for funding, the department completed baseline analysis of that provider’s TTL Fund project for the period from January 2018 to July 2021 for short term outcome indicators and from January 2018 to September 2021 for longer term outcome indicators (see paragraphs 1.2 and 1.3). The baseline analysis provided an overview of the proposed PbO performance targets to be included in the grant agreements for the transition projects by comparison to outcomes achieved by the provider’s TTL Fund project. The initial baseline analysis documents were given to providers to inform grant agreement negotiations in October 2021.

4.6 The department also developed addendum baseline analysis documents outlining longer term proposed outcome thresholds and performance targets which were utilised during negotiations of performance targets between the department and providers which were included in final executed grant agreements.22

4.7 The grant agreements included the following outcomes for each transition project:

- participants exit income support due to gaining employment;

- an increase in participant’s employment income; and

- participants resume or commence studies.

4.8 Each outcome has an associated performance target relevant to the transition project. The performance targets consist of the number of participants in the grant activity who are required to achieve the outcome over a specified period, for example the number of participants who have ceased receiving income support for at least 32 days within a six-week period. The data collection and reporting requirements are set out in paragraphs 4.29 to 4.36.

Performance reporting requirements

4.9 In addition to the outcomes and associated performance targets detailed in paragraph 4.4, the grant agreements also defined performance indicators and associated measures which were used to assess overall provider performance and program outcomes. The performance indicators are standardised across the grant agreements and included in Table 4.2 below.

Table 4.2: Performance indicators and measures

|

Performance indicator description |

Measure |

|

Number of clients assisted |

Measured using benchmarks, comparing [provider] achievement against similar service providers delivering comparable services, using characteristics defined in the Data Exchange Protocols. |

|

Number of events / service instances delivered |

|

|

Percentage of participants from priority target group |

|

|

Percentage of client achieving welfare outcomes |

Measured using benchmarking, comparing [provider] achievement against historical evidence of [provider] services, using Commonwealth welfare payment data. |

|

Activities are completed according to scope, quality, timeframes and budget defined in the Activity Work Plan |

The department and the Grantee agree that the Activity/ies as specified in the Activity Work Plan have been completed, or are progressed to a satisfactory standard. |

|

203 |

243 |

Source: Example executed grant agreement.

4.10 The performance indicator for ‘Number of clients assisted’ also relates to the outcomes, as it is also used as a measure for the performance target of number of individual participants for outcome observation period three.

4.11 Following the execution of grant agreements, the department and providers agreed output-level detail for the funded activity which is documented in an Activity Work Plan (AWP), for example the location of where the grant activity is delivered, the method of delivery for workshops, the activities to identify and secure alternative sources of funding and the nature of the feedback to be provided as part of evaluation activities. The grant agreements require providers to report progress against the AWP in the Activity Work Plan Report (AWPR) which is to be discussed at quarterly progress review meetings with the department.

Support for the transition project providers

4.12 On 24 June 2021, the department engaged Nous Group through an existing panel arrangement to provide ‘specialist expertise to assist with developing an implementation framework23 for outcomes-based commissioning and to support providers to establish Transition Funding for Successful Try, Test and Learn projects’.

4.13 The key outcomes sought by the department were:

- a comprehensive project plan for establishing the foundations to provide clarity on the pathway to grow outcomes commissioning and attract other (non-government) funding for projects the department supports; and

- a detailed Implementation Framework to enable the identification of programs which are ready to shift to an outcomes focus and provide a way to consider capability and strategies needed for service providers and departmental operations to enable the department to commence a process of outcomes commissioning and contracting for the best candidate programs in the department.

4.14 While the contract indicates Nous Group was to work with the department in determining whether projects could transition to outcomes funding models prior to entering into an executed grant agreement, there was no evidence of Nous Group’s involvement in the selection of projects.

4.15 In March 2023, the department advised the ANAO that Nous Group’s role in assisting providers to develop their data capabilities:

wasn’t required because the reporting methodology between the provider and [the department] was relatively straight forward with the department undertaking the analysis, and an understanding the providers would be able to show investors the results … cashflow and risks of not meeting outcome payments were mitigated through ‘establishment’ payments.

4.16 The contract with Nous Group was subject to three variations, the third of which saw a seven-month delay in the delivery of all workshops to support transition project providers transitioning to the outcomes framework and securing longer term alternative funding arrangements. The workshops were originally due to be completed in September 2021 and the deadline was extended to 1 April 2022. The reasons for the delays in milestone delivery and resultant variations were not documented.

4.17 Nous Group facilitated workshops (February to April 2022) and conducted one-on-one sessions (March to April 2022) with providers to assist with the development of individual business plans, a key deliverable under the contract. The topics which were the focus of the workshops were identified in partnership with the department from insights gained through a survey that Nous Group and the department issued to providers.

4.18 The workshops covered the following key topics:

- broad overview of outcomes-based models and introduction to peers;

- outcome measurement;

- the impact of outcomes-based approaches on organisations’ strategy and business model;

- philanthropic and corporate funding and partnerships; and

- philanthropic and corporate funding and partnerships — implementation.

4.19 Whilst workshops commenced in February 2022, by the time of the extended deliverable date in April 2022, providers had already entered the second outcome observation period of their grant agreements. During the second observation period, payments were contingent on the provider meeting the performance targets for each outcome. The delay meant the providers were already operating under the outcomes framework before they had received all aspects of the support for which Nous Group had been contracted to provide and the department had not yet received Nous Group’s assessment of provider maturity.

4.20 Nous Group provided a Summary Report to the department on 28 April 2022 which outlined the varying maturity and capability of providers relating to payment by outcomes agreements and seeking corporate investment. Nous Group subsequently issued an addendum report on 10 May 2022. The focus of the addendum report was at the provider level, including summarising current state and future priorities, summaries of one-on-one consultations with providers and the detailed mapping against the maturity framework. Nous Group’s Summary Report categorised the providers into one of three archetypes, discussed in Table 4.3.

Table 4.3: Categorisation of providers by Nous Group

|

Archetype |

Description |

Number of providers |

|

Highly expert |

This provider was leading the thinking in terms of processes and experiences of outcomes-based approaches. Their experience included a portfolio of multiple outcome-based grants and contracts to a range of funders, including corporates. |

1 |

|

Experienced |

These providers had previous experience in outcomes-based funding approaches and/or philanthropic and corporate funding and partnerships, though acknowledged there were areas they could still grow. Time and capacity to shift were often mentioned as barriers and reflect the changing sector and its existing demands. |

3 |

|

Gaining momentum |

These providers were relatively new to outcomes-based approaches and diversified funding streams. The [transition] program is one of the providers’ first programs that has required an outcomes-based approach. Many enabling organisational elements, such as data collection and reporting, require a considerable shift to adapt to these new approaches. |

3 |

|

108 |

239 |

95 |

Source: Provider Workshop Summary Report (28 April 2022) — Nous Group.

4.21 Of the three providers categorised by Nous Group in the lowest archetype of ‘Gaining momentum’, one provider has since withdrawn from the transition program and rescinded its grant agreement.

4.22 Nous Group’s findings highlight that providers generally did not have the capacity to transition to the outcomes frameworks and achieve the associated performance targets within the timeframes outlined in the observation periods (see paragraph 4.3). The timeframes established for achievement of performance targets under the outcomes framework were therefore not reasonably achievable.

Payment of outcomes for the first and second observation periods

4.23 Following the stage one establishment payments, the first outcome payments to providers under stage two were scheduled for June 2022. Three of the seven providers had their first outcome payment combined with their stage one establishment payments following negotiation between the department and the providers. This was following the providers’ self-assessments during the grant negotiation process which indicated they would require more lead time regarding recruitment of participants.

4.24 For the remaining four providers, one provider achieved its performance targets for the first observation period under its respective outcomes framework. The department approved payments for the first observation period despite the performance targets not being achieved for three of the four providers.

4.25 The department’s documented decision to process outcome payments for the first observation period, despite performance targets not being achieved in three of four instances, was made in recognition that providers did not have sufficient time to achieve performance targets. This supports Nous Group’s finding relating to the limited ability that providers had to transition to outcomes frameworks.

4.26 The department took a consistent approach for the second observation period, processing outcome payments despite performance targets being achieved by only one provider. The Minute signed by the delegate in February 2023 approving the release of the payments for the second observation period highlights ongoing challenges which have adversely affected providers’ ability to achieve outcomes in the current employment environment. The Minute states:

Recent analysis of Activity Work Plan Reports indicate providers are utilising their funding in accordance with eligible activities in their grant agreement. All have exceeded or are on track to meet total participant recruitment targets, which means although they may not be achieving outcomes, they are still providing a wraparound service for participants of their project.

…

the Department does not consider these organisations to be underperforming because they continue to deliver services to vulnerable peoples. Circumstances have changed which means performance targets are no longer realistic or achievable for providers to achieve. This risk is why the Outcomes Progress Review process was included in grant agreements.

The Minute also noted the department would consider the environmental factors and the outcomes progress reviews for the second observation period, held with providers in December 2022, in the program evaluation due to be completed by 30 June 2023.