Browse our range of reports and publications including performance and financial statement audit reports, assurance review reports, information reports and annual reports.

Award of Funding under the Mobile Black Spot Programme

Please direct enquiries relating to reports through our contact page.

The objective of this audit was to assess the effectiveness of the Department of Communications and the Arts’ assessment and selection of base stations for funding under the first round of the Mobile Black Spot Programme.

Summary and recommendations

Background

1. In June 2015, the Government announced the selection of two network operators (Telstra and Vodafone Hutchison Australia) to deliver 499 new and upgraded mobile base stations across Australia as part of the Mobile Black Spot Programme (MBSP).1 The programme was designed to fulfil a 2013 election commitment to extend mobile phone coverage and industry competition for the provision of coverage in remote, regional and outer metropolitan areas. The total funding for the selected base stations under Round 1 of the programme was $385 million, including $110 million (including GST) in Commonwealth funding and $275 million in co-contributions from state governments, third parties and mobile network operators.

2. The Department of Communications and the Arts is responsible for administering the programme, including the assessment of applications for funding based on criteria that included the: size of the new coverage area of proposed based stations; number of premises and length of transport route covered; coverage of priority areas nominated by Members of Parliament; and co-contributions by the applicants, state governments and third parties. The rollout of the selected base stations funded under Round 1 commenced in late 2015 and is to be concluded by July 2018. The programme guidelines for Round 2 of the programme were released in February 2016. In May 2016, the Government announced an additional $60 million towards Round 3 of the programme as part of its 2016 election campaign, bringing the total commitment to $220 million.

Audit objective and criteria

3. The objective of the audit was to assess the effectiveness of the Department of Communications and the Arts’ assessment and selection of base stations for funding under the first round of the Mobile Black Spot Programme. To conclude against the audit objective the ANAO adopted the following high-level criteria:

- were appropriate programme guidelines developed, which reflected the policy intent and objectives of the programme, and was the programme accessible to potential applicants?

- were applicants assessed in a consistent, transparent, accountable and equitable manner?

- were sound governance arrangements established for the programme?

Conclusion

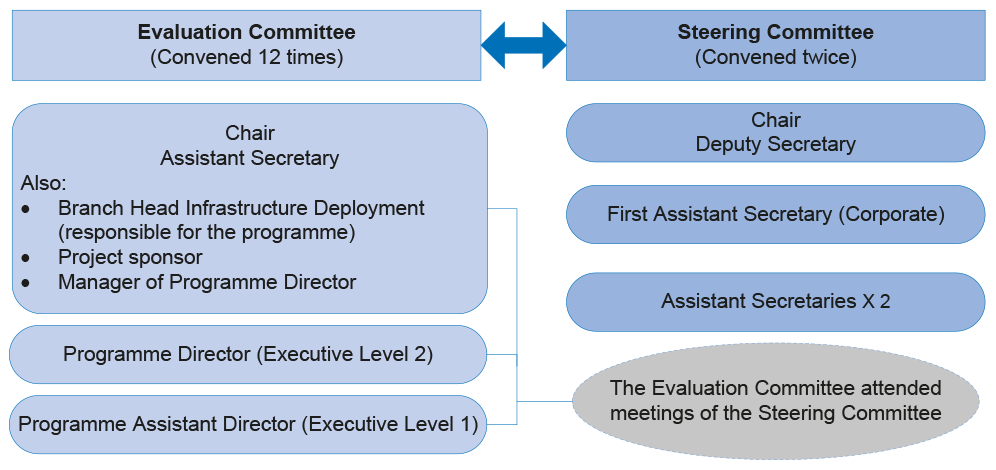

4. The department established the key elements that would be expected to form part of a competitive, merit-based grants programme and, in the main, implemented these elements in accordance with the published guidelines for Round 1 of the Mobile Black Spot Programme. The 499 base stations selected under the programme are expected to provide 162 000 km2 of expanded coverage across Australia (including 68 600 km2 of new handheld mobile coverage), at a cost to the Commonwealth of $110 million and involving co-contributions from state governments to a value of $92 million.

5. Nevertheless, the following weaknesses in programme administration have impacted on the effectiveness of the assessment and selection process, and the value achieved from programme funding:

- The criteria used by the department to assess the merits of each proposed base station did not sufficiently target funding toward the expansion of coverage where coverage had not previously existed (for example, 89 of the 499 selected base stations provided minimal new coverage of additional premises and kilometres of transport routes at a combined cost of $28 million). As a consequence, public funding has resulted in substantial consolidation of existing coverage provided by grant applicants, as opposed to extending coverage in new areas—a key objective for the programme.

- The department did not establish appropriately structured methodologies to inform the technical and financial assessments of applicant proposals. The development of such methodologies, tailored to the objectives of the programme, would have improved the rigour of the department’s assessment of proposals, particularly in relation to applicant costings.

- The department’s ability to measure the overall impact and effectiveness of the programme and report to stakeholders will be difficult given the absence of a fit for purpose performance measurement and evaluation framework for the programme.

Supporting findings

Access to funding

6. The department appropriately identified black spots to guide the location of proposed base stations to be funded under the MBSP through its Database of Reported Locations, which listed over 6000 publicly nominated areas with partial, poor and no mobile coverage. The approach taken to promote the programme across targeted electorates was not, however, consistently conducted and this may have had an impact on the distribution of nominations across electorates.

7. The department developed programme guidelines that reflected the objectives of the programme and contained an appropriate range of information about the programme to facilitate the submission of applications, including the programme objectives, the assessment criteria and the approval process. The assessment criteria were largely aligned with the objectives of the programme, although there was scope for the department to have considered developing indicators of community need and specific local issues to inform the delivery of any future funding rounds.

8. While the department considered that it had designed the guidelines to achieve maximum value for money, weaknesses in aspects of the guidelines impacted on this outcome. In particular, the absence of a minimum coverage requirement and threshold scores for each criterion enabled lower ranked proposals, with minimal new coverage and competition outcomes, to be selected for funding.

Assessment and selection of base stations

9. The department received four applications (that contained 555 proposals for new and upgraded base stations seeking $134 million in Commonwealth funding) that, overall, were assessed in accordance with the requirements established in the programme guidelines.

10. The eligibility requirements outlined in the guidelines were applied fairly, with all assessments appropriately documented and retained by the department. The eligibility assessment process resulted in one applicant, a mobile network infrastructure provider, being excluded from further consideration.

11. The proposals for base station funding submitted by the three applicants were assessed and scored in accordance with the guidelines, but the department’s assessment of applicant costings for proposed base stations lacked sufficient rigour. There was scope for the department to have better prepared for the assessment of coverage claims and applicant costs. The assessment of aspects of submitted applications presented a number of challenges for the department, particularly in relation to the assessment of applicants’ coverage claims, with the department required to modify its approach during the course of assessment.

12. The basis on which the merit list of base stations was developed was appropriately supported by documentation, with funding decisions made in accordance with the recommendations arising from the assessment process. All eligible proposed base stations were ranked in order of the assigned assessment score (from highest to lowest) and the equitable distribution principles were applied in accordance with the requirements outlined in the published programme guidelines. As a result, the proposals of one applicant (Optus) were not recommended for funding. The electoral distribution of selected base stations was primarily driven by the area that operators chose to locate their proposals and the level of co-contributions that had been committed.

13. An assessment of indicators of value for money achieved by the programme, such as benefits for consumers from new coverage, showed mixed results. While handheld coverage is expected to be extended by 68 600 km2 into new areas under the programme, up to 39 base stations were planned to be built in the same or similar areas (according to applicants’ forward network expansion plans) without the need for public funding. Further, up to 89 base stations provided minimal benefits to consumers in areas that previously did not have any coverage and, as result, did not score a single point for coverage benefit. The award of $28 million in state and Commonwealth funding to these 89 base stations undermined the value for money outcomes achieved from the programme. The extent to which competition is improved under the programme through the use of infrastructure by multiple operators is yet to be determined by applicants and the department.

Programme governance arrangements

14. The oversight arrangements established for the MBSP provided an appropriate framework to govern the assessment and selection process, but implementation of aspects of the framework was not effective. In particular, the documentation retained by the department did not demonstrate that the Steering Committee discharged its responsibilities to oversee the work of the Evaluation Committee.

15. To support programme delivery, the department developed appropriate, high-level plans for implementation, proposal assessment, and project management, with internal guidance established to assist in the process of assessing and selecting base stations. The department did not, however, establish a compliance plan or a plan to measure programme performance.

16. The department established an appropriate framework to identify programme risks, including those relating to probity, with these risks and their treatments monitored on an ad hoc basis. The establishment of a more structured process to review and update risks would provide greater assurance that risks were being appropriately managed.

17. The existing programme performance measures do not enable the department to accurately monitor the extent to which programme objectives are being achieved and, as a result, adequately inform stakeholders of the impact and cost effectiveness of the programme.

18. The funding agreements outlined a number of arrangements that provide the department with some capacity to safeguard public funds through claw-back provisions in the event of underspends. These arrangements require further strengthening to reduce the department’s heavy reliance on funding recipients’ verification of expenditure following rollout completion.

Recommendations

|

Recommendation No.1 Paragraph 2.49 |

To better target the award of funding to proposals that support the achievement of Mobile Black Spot Programme objectives and maximise value for money outcomes, the ANAO recommends that the Department of Communications and the Arts establish minimum scores for assessment criteria. Department of Communications and the Arts’ response: Agreed. |

|

Recommendation No.2 Paragraph 3.25 |

To promote timely and robust assessment and to demonstrate that expenditure under the programme represents a proper use of public funds, the ANAO recommends that the Department of Communications and the Arts implement an appropriately detailed assessment methodology tailored to the objectives of the programme. Department of Communications and the Arts’ response: Agreed. |

|

Recommendation No.3 Paragraph 4.24 |

To assess the extent to which the Mobile Black Spot Programme is achieving the objectives set by government, the ANAO recommends that the Department of Communications and the Arts implement a performance measurement and evaluation framework for the programme. Department of Communications and the Arts’ response: Agreed. |

Summary of entity response

19. The proposed audit report issued under section 19 of the Auditor‐General Act 1997 was provided to the Department of Communications and the Arts. An extract of the proposed report was also provided to the former Parliamentary Secretary to the Minister for Communications. The Department of Communications and the Arts’ summary response to the report is provided below, while its full response is at Appendix 1.

The department agrees with the ANAO’s three recommendations outlined in its proposed audit report and its conclusion that the department established the key elements of a competitive, merit-based grants programme. The department notes that the establishment of a minimum new coverage requirement for round 2 base stations is directly relevant to one of the recommendations, and the two remaining recommendations will be implemented by further enhancement of the assessment and evaluation documentation for round 2.

The department notes the ANAO’s observations regarding the extent of new coverage being delivered by the base stations. The department is of the view that extending new coverage is just one of the aims of the programme and that the mobile telecommunications infrastructure being funded through the programme also achieves the programme’s objective of providing the potential for improved competition.

1. Background

Mobile phone coverage in Australia

1.1 Over a number of years, various reviews of regional telecommunication services have identified inadequate mobile phone coverage as a significant issue for outer metropolitan, regional and remote communities.2 To address this issue, during the 2013 federal election campaign the Opposition outlined its proposal to provide $100 million to expand mobile phone coverage in regional and remote areas along major transport routes, in small communities and in locations prone to experiencing natural disasters.3

Mobile Black Spot Programme

1.2 Following the 2013 election, the new government announced the $100 million Mobile Black Spot Programme (MBSP). The programme was intended to extend mobile phone and wireless broadband services and industry competition for the provision of those services in remote, regional and outer metropolitan Australia.4 The timeline for programme delivery is outlined in Figure 1.1.

Figure 1.1: Mobile Black Spot Programme timeline

Source: ANAO analysis of departmental information.

1.3 The MBSP is administered by the Department of Communications and the Arts. To inform the programme’s design, the department released a discussion paper in December 2013 that outlined delivery options, intended arrangements for applicants to access funding, and possible selection criteria. Information was sought from the public on areas with poor or no mobile coverage (black spots). Federal Members of Parliament (MPs)—who represented 75 regional and remote targeted electorates—were invited to nominate up to three priority black spots.5 Based on the responses received, the department developed a Database of Reported Locations (the database) containing the location details of more than 6000 identified mobile black spot locations.

1.4 In December 2014, the department released the MBSP’s guidelines and invited mobile network operators and infrastructure providers to seek funding for new mobile base stations or for upgrades to existing base stations to address identified black spots. Four applications were submitted by mobile network operators and a mobile network infrastructure provider proposing 555 new or upgraded base stations. Of the four applications, one application was deemed ineligible because it did not meet the requirements of the programme. The remaining three eligible applications were assessed against seven criteria (as outlined in Table 1.1).

Table 1.1: MBSP assessment criteria

|

Category |

Consideration |

Scoring |

|

1. New coverage |

New handheld coverage (one point per 200 km2 capped at six points), new external antenna coverage (one point per 400 square kilometres capped at three points) and/or existing external antenna coverage upgraded to handheld coverage (one point if the area is greater than 100 square kilometres). |

Score out of 10 |

|

2. Coverage benefit |

Premises that will now receive handheld coverage (one point per three premises) and the length of major transport routes within new handheld and external antenna coverage area (one point for every five kilometres covered). |

Uncapped |

|

3. MP priority |

The proposed base station’s coverage includes an MP’s priority black spot. |

Score of five |

|

4. Co-contributions |

Cash and/or in-kind co-contributions from applicants (one point awarded or deducted per $10 000 co-contribution above or below 50 per cent of the total estimated capital cost), and state/territory governments, local councils or other third party (one point per $10 000 co-contributed). |

Uncapped |

|

5. Cost to Commonwealth |

Net cost to the Commonwealth of each proposal. Each proposal initially received 50 points with one point deducted for every $10 000 incurred by the Commonwealth. |

Score out of 50 |

|

6. Service Offering |

Service offered from the proposal in addition to the mandatory 3G HSPA+ technology (up to five points) and whether roaming services will be offered to all other operators (10 points). |

Score out of 15 |

|

7. Commitment of use |

Other operators have committed to use the proposed base stations for at least 10 years (10 points per operator) or are capable of supporting additional operators in the future. |

Score out of 20 |

Source: ANAO, adapted from the programme guidelines.

1.5 Further to the assessment criteria, Commonwealth funding was capped at $500 000 per base station and $20 million (of the $100 million Commonwealth funding allocated) was reserved for proposals that addressed specific local issues and had a co-contribution (cash or in-kind) from state, territory or local governments, local communities or other contributing third parties.

Assessment outcomes

1.6 On 25 June 2015, the Minister for Communications announced the selection of 499 base stations for funding under the programme. The successful base stations were selected from two of the three eligible applications6:

- Telstra was awarded $94.8 million (GST inclusive) for 429 base stations; and

- Vodafone Hutchison Australia was awarded $15.2 million (GST inclusive) for 70 base stations.7

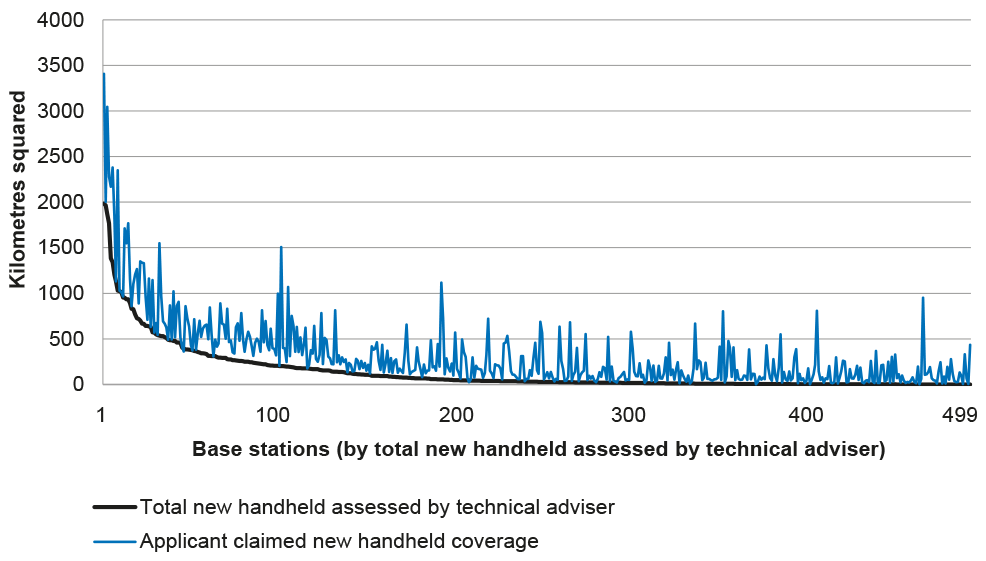

1.7 On the basis of the information included in the applications, the department (with assistance from its technical adviser) estimated that the funded base stations would provide:

- 68 600 km2 of new handheld mobile coverage;

- 150 000 km2 of new external antenna mobile coverage;

- over 5700 km of new handheld and antenna coverage to major transport routes; and

- coverage of approximately half the black spots identified in the database.8

1.8 The number of base stations funded in each jurisdiction is outlined in Table 1.2.

Table 1.2: Base stations funded by jurisdiction

|

State/territory |

Total number of new or upgraded base stations selected |

Number of Telstra base stations selected |

Number of Vodafone Hutchison Australia base stations selected |

|

Victoria |

110 |

109 |

1 |

|

New South Wales |

144 |

101 |

43 |

|

Queensland |

68 |

61 |

7 |

|

Western Australia |

130 |

128 |

2 |

|

South Australia |

11 |

11 |

0 |

|

Tasmania |

31 |

14 |

17 |

|

Northern Territory |

5 |

5 |

0 |

|

Total |

499 |

429 |

70 |

Note: No new or upgraded base stations were funded in the Australian Capital Territory or Australia’s external territories.

Source: ANAO analysis of departmental information.

1.9 The second round of the MBSP commenced in February 2016, with funding of $60 million allocated from the 2015–16 Budget contingency reserve.9

Audit approach

1.10 The objective of this audit was to assess the effectiveness of the Department of Communications and the Arts’ assessment and selection of base stations for funding under the first round of the Mobile Black Spot Programme.

1.11 To form a conclusion against this objective, the ANAO adopted the following high-level criteria:

- Were appropriate programme guidelines developed, which reflected the policy intent and objectives of the programme, and was the programme accessible to potential applicants?

- Were applicants assessed in a consistent, transparent, accountable and equitable manner?

- Were sound governance arrangements established for the programme?

1.12 The scope of the audit included the examination of the department’s administration of the first round of the programme, including the: governance arrangements; development of the database; conduct of the assessments; merit-listing; and decision-making. The audit did not examine other aspects of the programme, such as the ongoing management of the agreements, or the second MBSP funding round.

1.13 In conducting the audit, the ANAO examined departmental records relating to the administration of the programme, including governance procedures, implementation plans and application and eligibility assessment documentation. The ANAO also interviewed departmental staff and stakeholders, including funding applicants, relevant state government entities and received input from industry and consumers.

1.14 The audit was conducted in accordance with the ANAO’s Auditing Standards at a cost to the ANAO of approximately $449 000.

2. Access to funding

Areas examined

The ANAO examined whether mobile black spots were effectively identified to guide the location of proposed base stations and whether appropriate programme guidelines were established that supported a value for money outcome in the context of the MBSP objectives.

Conclusion

The department’s Database of Reported Locations included sufficient location information on areas with partial, poor and no mobile coverage to guide the location of proposed base stations consistent with the intent of the programme. The promotion of opportunities for the public to nominate black spots through awareness forums was not, however, undertaken in a consistent manner across targeted electorates.

The guidelines developed by the department and approved by government facilitated the application process and largely aligned with the objectives of the programme. However, the lack of minimum new coverage requirements and threshold scores for assessment criteria impacted on the extent to which outcomes and value with public money were achieved.

Areas for improvement

The ANAO made one recommendation aimed at better targeting the award of funding under the MBSP to proposals that support the achievement of programme objectives and deliver value for money outcomes.

The ANAO has also suggested that the department: provide additional information on Member of Parliament priority locations to co-contributors; and develop indicators of community need and specific local issues to inform future policy development.

Were black spots effectively identified to guide the location of proposed base stations?

The department appropriately identified black spots to guide the location of proposed base stations to be funded under the MBSP through its Database of Reported Locations, which listed over 6000 publicly nominated areas with partial, poor and no mobile coverage. The approach taken to promote the programme across targeted electorates was not, however, consistently conducted and this may have had an impact on the distribution of nominations across electorates.

2.1 Publicly available mobile coverage maps are based on modelling of predicted coverage. However, user experience of mobile coverage can vary by device, network and a range of other factors, including the physical features of the landscape. The department does not hold reliable data on the quality and extent of actual mobile coverage across Australia.

2.2 In the absence of reliable data to identify black spots for potential funding under the MBSP, the department developed a spreadsheet that it referred to as the Database of Reported Locations (the database) to collate information on locations with reported inadequate coverage. To be eligible for funding under Round 1 of the programme, the guidelines established for the programme by the department required applicants to propose new base stations or upgrades to existing stations to cover at least one black spot listed on the database. The guidelines also indicated that:

it will only be possible to address a portion of these reported locations through the Programme, and therefore it is very important that the areas of highest need are identified.

Populating the Database of Reported Locations

2.3 To populate the database, the department called for public nominations of outer metropolitan, regional and remote areas with poor mobile coverage. These nominations could be made by telephone (dedicated 1800 number), post or email to the department or by contacting the Federal Member of Parliament representing those electorates targeted by the programme.10 Stakeholders, including state governments, local councils and industry groups were also informed of the programme and the public nomination process of black spots by letter.

2.4 The department did not provide guidance to stakeholders on how much information was required to nominate a black spot. As a result, the information supporting black spot nominations varied considerably across nominations. For example, some black spots were identified as a locality only (street name or town) whereas other black spots had been verified by their nominators through consultation with carriers, communities and included information on specific local issues.

2.5 The department advised the ANAO that it did not encourage mobile network operators to nominate black spots directly, as the intent was for the population of the database with black spot locations to be consumer driven. Evidence retained by the department did not indicate that network operators had directly nominated black spots, although one state had obtained a feasibility assessment of its nominations from an operator prior to submitting its nominations to the department. While engagement between a state government and an operator is necessary to negotiate co-contributions, other potential applicants may be disadvantaged in their participation in the programme if a state government chooses to work with only one operator.

2.6 During the nomination period (16 December 2013 to 15 October 2014), the department received over 10 000 nominations of black spot locations. Nominations were recorded and reviewed by the department. Documents retained by the department did not provide a clear basis on which to determine the extent to which the final list was complete and accurate. The final version of the database was publicly released with the programme guidelines on 8 December 2014, with 6221 eligible black spot locations listed across Australia.11

Promotion by the department

2.7 The department promoted the programme and the nomination of black spots by writing to Members of Parliament, local councils and stakeholders and through its website12, Twitter account13 and media releases. There was no paid advertising for the programme. The Communication Strategy developed by the department for the programme outlined that the departmental website would be the central distribution point for online engagement. Any questions relating to the programme were to be directed to the department through its website, with responses to be posted in a Frequently Asked Questions page on the website. The department’s website also provided stakeholders with information on those areas that were ineligible for funding under the programme.14 The website did not include specific information for residents within the 75 regional and remote electorates that were being targeted by the programme.15 In addition to these electorates, other electorates were also eligible to receive one or more mobile base stations under Round 1 of the programme, as they included locations outside a major urban centre.

2.8 The department informed the ANAO that, in addition to its own promotion activities, information about the programme was also provided through a series of awareness forums for consumers conducted by the then Parliamentary Secretary to the Minister for Communications.

Awareness forums

2.9 In the period from October 2013 and April 2015, the Parliamentary Secretary conducted awareness forums across 42 electorates to discuss regional communications-related issues, including access to broadband internet, mobile coverage and broadcasting. The awareness forums were conducted in all states. The department informed the ANAO that, while it was not involved in identifying the locations that forums would be held, it provided briefings to the Parliamentary Secretary when requested and subsequently monitored local press coverage arising from the forums.

2.10 All of the electorates visited by the Parliamentary Secretary were part of the 75 electorates targeted by the programme. The 75 targeted electorates ranged from 56-100 per cent eligible, based on the proportion of the electorate that contained ineligible areas (major urban centres). Of the electorates visited, 24 were 100 per cent eligible. Of the 33 targeted electorates where awareness forums were not held, seven were 100 per cent eligible, including four electorates held by a government member and three electorates held by the Opposition.16 A breakdown of the electorates where awareness forums had been conducted by party held is presented in Table 2.1. The impact of the awareness forums is discussed later in this chapter.

Table 2.1: Awareness forums conducted by electorate and political party

|

Electorate held by |

Targeted electorates |

Targeted electorates visited1 |

|

Government |

55 |

402 |

|

Percentage eligible: 99-100 |

36 |

31 |

|

73-99 |

13 |

8 |

|

55-73 |

6 |

1 |

|

Opposition |

17 |

1 |

|

Percentage eligible: 99-100 |

4 |

0 |

|

73-99 |

11 |

1 |

|

55-73 |

2 |

0 |

|

Independents and Minor Party Members |

3 |

1 |

|

Percentage eligible: 99-100 |

2 |

1 |

|

73-99 |

1 |

0 |

|

55-73 |

0 |

0 |

|

Total |

75 |

42 |

Note 1: The following electorates were visited after 16 October 2014 when nominations closed: Corangamite, Lib; Pearce, Lib; Grey, Lib; McEwan, ALP; Dawson, LNP; Kennedy, KAP; Herbert, LNP, and Maranoa, LNP.

Note 2: Includes the electorates of Calare, Corangamite and Eden-Monaro which were visited twice.

Source: ANAO analysis of departmental information.

Identifying Member of Parliament priority locations

2.11 The assessment process developed for the MBSP by the department included a role for those Members of Parliament representing the 75 targeted electorates under the programme. These Members of Parliament were provided with an opportunity to nominate to the department up to three black spot locations that they considered to be a priority. As noted in Chapter 1, applicant proposals that covered an MP priority location were to be awarded an additional five points as part of the merit assessment process. While the involvement of Members of Parliament in an assessment process for a Commonwealth grants programme is novel, the programme guidelines indicated that MPs ‘will have information regarding the specific local issues and the locations within their electorates that are in greatest need of mobile coverage’.

2.12 In May 2014, the department wrote to the 75 Members of Parliament in those electorates targeted under MBSP requesting their nominations. Guidance was not provided by the department in relation to those matters to be considered when nominating their priorities, such as specific local issues or seasonal demand. While this approach provided Members of Parliament with flexibility in the nomination process, the lack of guidance, such as the minimum details required to identify a black spot, resulted in considerable variation in the nominations provided to the department.

2.13 In total, MPs nominated 212 priority locations with six MPs nominating fewer than three priority locations.17 The department appropriately followed up with those Members of Parliament that had nominated more or less than three locations or priorities in ineligible locations.

2.14 While the names of the areas nominated as MP priority locations were shared with applicants, the geographic coordinates were withheld which made it difficult for applicants to accurately target their proposals to those areas.18 Other key co-contributors (state governments) were not provided with the list, which prevented them from aligning their funding support with those priorities. Providing more specific information on priority locations to applicants and potential co-contributors would enhance their overall usefulness in the application process. The impact of the MP priority locations on the assessment process is examined in Chapter 3.

Registering and reviewing black spot nominations

2.15 Once a nomination was received, it was recorded by the department and reviewed before being registered on the database. The key steps in registering nominations, which were manually intensive, involved the department:

- acknowledging (by phone or email) nominations received;

- confirming that the nomination was in an eligible area;

- electronically ‘pinning’ the location of each black spot on a map using online mapping software; and

- recording the coordinates of the blackspot (including latitude, longitude, suburb and street name) in the database.

2.16 The department was required to make assumptions about where to record the coordinates of black spots in those cases where the nominations lacked specific information (for example, a long section of road or a reference to a town). To consolidate black spots nominated more than once, the department made judgments to identify one black spot that represented the broader geographical area. The black spots registered were, therefore, considered by the department as an indicative location—representing the general suburb, locality or area that had been nominated, rather than specific addresses.

2.17 Given the greater than expected level of interest in the programme, additional departmental resources were required to process the black spot nominations. The risks to programme implementation arising from high levels of interest had not been identified by the department and, as a consequence, treatments to respond to high demand had not been developed. Further, the department had not developed a plan to guide the work required to develop and manage the database.

2.18 The department’s approach to identifying mobile black spots was pragmatic. It was based on the minimum information requirements necessary to identify a potential black spot—suburb, street name and location. The nominations were accepted at face value as the department considered that mobile network operators were best placed to validate the existing mobile coverage of locations identified in the database.19

2.19 The database included nominations of areas of existing coverage (according to publicly available coverage maps). These nominations were not removed from the database. As a result, the database included areas with no coverage by any mobile network operator, as well as those with poor quality coverage and areas of existing coverage by at least one operator. This suggests that most locations were indicative of poor or partial coverage, rather than ‘black spots’ with no coverage.

2.20 To check the accuracy of the black spot data recorded within the database, the department released a draft of the database for nine days in October 2014 for public comment.20 The department informed the ANAO that it subsequently reviewed the database for: correct assigning of location; inclusion of eligible areas; and duplicate registrations.

Distribution of registered black spots

2.21 Black spots were registered in 88 of the 109 electorates that contained eligible areas under the programme, including in all of the 75 targeted electorates. A breakdown of nominations registered by party held electorate is provided at Table 2.2.

Table 2.2: Distribution of registered black spots by party

|

Party |

Number of electorates held |

Total registered black spots1 |

Average |

|

Government |

62 |

5103 |

82 |

|

Opposition |

22 |

651 |

30 |

|

Independents and Minor Party Members |

4 |

466 |

117 |

|

Total |

88 |

6220 |

71 |

Note 1: A nomination received for an area outside of Australia’s electoral boundaries was not included.

Source: ANAO analysis of departmental information.

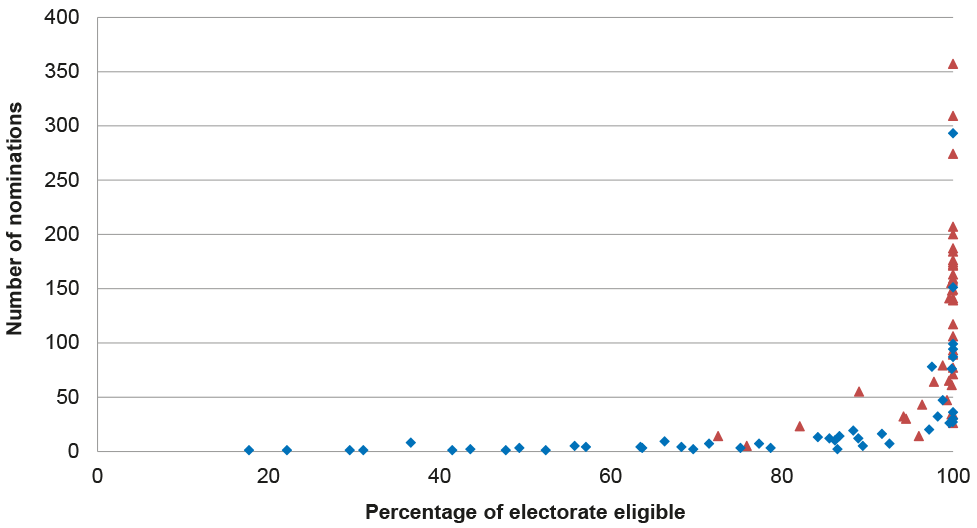

2.22 There was a general correlation between the number of nominated locations registered per electorate and the percentage of the electorate that contained eligible areas under the programme. As illustrated in Figure 2.1, there was a variation in the number of registered nominations in electorates that were 100 per cent eligible. This variation can, in part, be attributed to the differing levels of need, with the distribution of the population within large and small communities potentially impacting on the extent to which black spots are experienced. Further, the number of black spots registered in each electorate may not directly correlate with the number of nominations received, with the department removing duplicate locations.

Figure 2.1: Black spots registered by percentage of electorate eligible for funding

Source: ANAO analysis of departmental data.

2.23 The impact of awareness forums on nominations of black spots is difficult to accurately measure due to the range of factors that may influence community awareness about the programme, such as promotional activities by community groups, local councils and MPs. While acknowledging these factors, the rate of nominated locations registered from 100 per cent eligible electorates where awareness forums were held prior to the database closing was higher (155 average and 154 median nominations) than in 100 per cent eligible electorates where forums were not held or were held after the database closed (128 average and 99 median nominations). The department was aware of the increased local press coverage generated by the forums, but it did not consider that the conduct of forums in some electorates and not others detracted from its approach to promoting the programme. The department further informed the ANAO that it considered that there was a sufficient level of awareness of the programme and a reasonable opportunity for stakeholders to nominate black spots. Notwithstanding this view, the variability in registrations indicates that there would be benefit in the department analysing nomination data and trends to inform programme design parameters.

Did programme guidelines reflect the objectives of the programme?

The department developed programme guidelines that reflected the objectives of the programme and contained an appropriate range of information about the programme to facilitate the submission of applications, including the programme objectives, the assessment criteria and the approval process. The assessment criteria were largely aligned with the objectives of the programme, although there was scope for the department to have considered developing indicators of community need and specific local issues to inform the delivery of any future funding rounds.

Development of programme guidelines

2.24 To inform the development of the MBSP guidelines, the department:

- released a discussion paper on 16 December 2013, which generated 170 submissions from members of the public, industry and stakeholders; and

- held two workshops with the state government representatives and potential applicants in July 2014.21

2.25 The discussion paper generated stakeholder views on the broad design of the programme including three possible funding models: contracting a single operator (or consortium of operators); developing an order of merit for individual base stations from multiple operators; and infrastructure provider co-ordinated implementation. The subsequent workshops with states and potential applicants were used by the department to gain feedback on specific elements of the draft guidelines. The order of merit approach that was adopted by the department was approved by the Government.

2.26 Consistent with the Commonwealth Grants Rules and Guidelines22, the department completed a risk assessment of the granting activities and programme guidelines in consultation with the Department of Finance and the Department of the Prime Minister and Cabinet. The approval to release the guidelines was obtained from the Finance Minister in addition to the Prime Minister and the Minister for Infrastructure and Regional Development in accordance with the programme’s overall risk rating of ‘Medium’.

2.27 The department released the programme guidelines on 8 December 2014 and initially required applications to be submitted by 16 March 2015. An amended version of the guidelines was released on 22 December 2014, which extended the application period by one month, to 16 April 2015, in response to concerns raised by one potential applicant.23

2.28 The guidelines appropriately outlined the: programme objectives; eligibility requirements; total funding available; the assessment criteria and associated scores (as outlined in Chapter 1, Table 1.1); approval process; programme timeframe; the appeals and complaints handling process; and five principles designed to promote an equitable distribution of funded base stations. Roles and responsibilities under the programme were also clarified, with the assessment of applications and merit-listing assigned to the department and decision-making on the award for funding to be undertaken by the then Minister.

Alignment of the assessment criteria to the objectives of the programme

2.29 A merit-based selection process was outlined in the guidelines as the method for selecting base stations for funding. Base stations would be funded based on merit score (highest to lowest) until Commonwealth funding was exhausted.

2.30 The assessment criteria aligned with the programme’s objectives through the allocation of points for new coverage, coverage benefit, and service standards. Additional points were allocated for proposals that offered roaming services to all operators and for commitment of use or capability to be used, by other network operators. The level of Commonwealth funding sought also influenced the allocation of scores, with the assessment criteria placing considerable value on co-contributions provided and the resulting Commonwealth funding sought for each proposal.24 Five points were also allocated for proposals that covered an MP priority, which was designed to address areas in need.

2.31 While acknowledging a benefit for consumers in extending an operator’s network in areas where another operator already provides coverage, the focus of the programme on providing new coverage and coverage of additional premises and transport routes was emphasised in the criteria established for the programme. In particular, the Government’s intention for the programme to fund areas where there is no existing coverage was reflected in assessment criteria 1(a) for new coverage, defined in the guidelines as:

The size (in square kilometres) of the mobile coverage footprint area which will receive new handheld coverage where previously there was no coverage at all from any mobile network operator.

2.32 Areas ineligible for funding included any operator’s existing handheld coverage area.

Specific local issues

2.33 The guidelines established that, of the $100 million allocated to the programme by the Commonwealth, $20 million was reserved for base stations that addressed specific local issues and had a co-contribution (cash or in-kind) from state, territory or local governments, local communities or other contributing third parties. The equitable distribution principles outlined in the guidelines specified that, if less than $20 million was allocated to address specific local issues, the remaining balance would be allocated to fund proposed base stations that had been nominated by a MP in order of merit. The guidelines did not define ‘specific local issues’ or contain provisions to assess if proposals addressed such matters. Ultimately, state and third party co-contributions and MP priority locations were used as a proxy for ‘specific local issues’.

2.34 The department’s database captured location information only, which was one element of community need for mobile coverage. Some nominations included additional information such as detailed site data, references to recent natural disasters, access to emergency services as well as potential economic and social benefits arising from regional businesses, tourism, education and cultural activities. The ANAO’s analysis of black spot nominations indicated that around 18 per cent of emails from the public referred to the benefits of improved coverage for local business. Other common themes that were indicative of specific local issues or consumer need included emergency services and tourism.25

2.35 The department advised the ANAO that it did not analyse this data as it considered that:

There are few (if any) locations across the country that are not susceptible to natural disaster or emergency situations, and therefore all communities/locations could be considered to have valid claims as to requiring access to adequate and reliable mobile phone coverage.26

2.36 In this context, the Regional Telecommunications Review 2015 noted in relation to the MBSP that:

Improved coverage in targeted geographies which yield social and economic benefits to a town or to a community are not easily measured under the current model of the MBSP. The current MBSP evaluation criteria could give higher weight to social and economic benefits that would accrue by extending mobile coverage to an area. Benefits might include economic returns associated with state priorities for regional development, or the deployment of mobiles in Indigenous communities to make Commonwealth and state outlays on existing programmes, such as health, more immediate and relevant, or coverage of major roads and highways carrying significant traffic volumes.27

2.37 Feedback on the programme provided to the ANAO by stakeholders indicated that little consideration appeared to have been given to specific local issues or community need and that cost, rather than need, appeared to direct the selection of successful base station locations. The analysis of the information provided with nominations would have assisted the department to have better targeted programme funding to community need. While a more granular approach to determining community need comes with greater resource impost, the use of this information would have better positioned the department to identify specific local issues and target areas of greatest benefit. There is scope for the department to explore options for developing social and economic indicators of community need and objective measures of specific local issues to inform future policy development.28

Did the guidelines promote value for money programme outcomes?

While the department considered that it had designed the guidelines to achieve maximum value for money, weaknesses in aspects of the guidelines impacted on this outcome. In particular, the absence of a minimum coverage requirement and threshold scores for each criterion enabled lower ranked proposals, with minimal new coverage and competition outcomes, to be selected for funding.

2.38 The department considered that the role of the MBSP guidelines was ‘to ensure the programme is delivered as efficiently and effectively as possible, and achieve maximum value for money’. While the guidelines did not directly define value for money within the selection criteria or outline how value for money would be considered, the equitable distribution principles referred to value for money as a consideration in their implementation to, for example, provide equitable distribution of outcomes across jurisdictions.

2.39 Value for money can be inferred by the extent to which the programme promotes outcomes that would not otherwise occur (referred to as additionality). The programme guidelines provided for additionality to be considered as part of the assessment process by specifying that proposals that were included on an applicant’s forward work plan were ineligible for funding. However, the guidelines did not require the department to consider the extent to which an applicant’s proposed base station covered a black spot that would be addressed by future deployments on another applicant’s forward-build plan.29

2.40 Value for money is also reflected by the extent to which selected proposals achieve programme objectives within the available funding (that is, cost-benefit). The guidelines relied on the ranking of a cost-benefit ratio (through the selection criteria) between competing base stations to determine comparative high and low value for money. The department considered that the requirement for applicants to significantly contribute to the cost of providing improved mobile coverage, state co-contributions and competitive tension between proposals (applicants and locations) would provide some pricing discipline to enhance value for money outcomes.

2.41 The selection criteria attempted to balance the relative values of improved coverage and competition (assessment criteria 1, 2, 3, 6 and 7) with cost (assessment criteria 4 and 5). However, given the absence of minimum coverage requirements and minimum merit scores for criteria for new coverage and coverage benefit, base stations remained in contention for selection, even when they scored poorly against these coverage and competition criteria, if they scored highly against cost related criteria, such as co-contributions.

Minimum coverage requirements

2.42 The establishment of minimum coverage requirements within the criteria was considered by the department in developing the programme’s guidelines. A draft of the guidelines included a requirement for at least 65 per cent of the coverage provided by a proposed base station to be new coverage (not already provided by another mobile network operator). The department advised the ANAO that, following a meeting with the Parliamentary Secretary in June 2014, the requirement was removed because it was considered that:

- the awarding of up to 10 points for new coverage in the assessment criteria was significant;

- potential applicants were limited in their ability to estimate the proportion of their proposed coverage beyond the existing coverage of other operators; and

- the subsequent risk of a proposed base station with minimal new coverage being successful was considered acceptable.

2.43 The department informed the Minister of the risk that proposals may be funded that provide minimal new coverage and advised:

The rationale for not specifying a minimum amount of new coverage is that the programme assessment process inherently favours the benefits of new coverage. For example, the assessment criteria awards additional points to base stations according to the number of premises and lengths of major roads to receive new coverage, in addition to the amount of additional raw coverage in square kilometres.

In addition, it is expected that applicants may propose base stations that provide mobile coverage to communities that are immediately adjacent to, or surrounded by, an area that already receives coverage. Introducing a minimum ‘new coverage’ requirement could result in such a community being ineligible for funding, despite the potential benefits to be gained …

It will be open to the Minister not to accept such proposals if, in the end, the view is that they do not adequately address any of the evaluation criteria including the value for money to the Commonwealth.

2.44 The guidelines developed by the department for Round 2 of the programme, which were released in February 2016, include minimum new coverage requirements for proposals to be eligible for funding.30

Limitations of the scoring approach used

2.45 The set range of scores that may be awarded under each criterion and their relative weighting should target funding towards proposals that best meet the programme’s objectives. The department had placed a strong emphasis on the ‘cost to the Commonwealth’ where each proposal initially received 50 points for the criteria with one point deducted for every $10 000 incurred by the Commonwealth. Conversely, less emphasis was placed on ‘new coverage’ with proposals awarded up to 10 points for this criterion. The guidelines also included two uncapped criteria–‘coverage benefit’ and ‘co-contributions’.

2.46 The guidelines did not require applicants to achieve a minimum or threshold score for any of the seven selection criteria. Additionally, the guidelines did not establish an overall minimum score that proposals must achieve. This approach enables base stations with significant co-contributions to achieve high overall scores—and consequently a high ranking—even in those circumstances where they were assessed as having little or no merit against new coverage and coverage benefit. The approach also allows proposals with relatively low overall scores to be assessed as eligible for funding.

2.47 The department informed the ANAO that it had considered establishing minimum or threshold scores for merit criteria during the development of the guidelines. At that time, the department concluded that threshold scores would be very subjective, arbitrary and would result in base stations that met programme objectives being ineligible.31

2.48 As a consequence, proposals with minimal new coverage and low merit scores remained eligible for funding. To manage the risk of funding base stations with minimal new coverage and support value for money outcomes from future funding rounds, the department should adopt minimum scores under each criterion. The selection of proposals with minimal new coverage is examined in Chapter 3, and the management of programme risks is examined in Chapter 4.

Recommendation No.1

2.49 To better target the award of funding to proposals that support the achievement of Mobile Black Spot Programme objectives and maximise value for money outcomes, the ANAO recommends that the Department of Communications and the Arts establish minimum scores for assessment criteria.

Department of Communications and the Arts’ response: Agreed.

2.50 The department accepts the ANAO’s recommendation, and notes that the assessment criteria for round 2 of the Mobile Black Spot Programme includes minimum coverage outcomes to be achieved by the funded mobile telecommunications infrastructure.

3. Assessment and selection of base stations

Areas examined

The ANAO examined whether the Department of Communications and the Arts assessed applications in accordance with the requirements established in the published guidelines, applied eligibility requirements fairly, scored proposals with sufficient rigour and established a clear rationale for the merit listing of base stations and funding decisions.

Conclusion

The processes adopted by the department for the assessment and selection of base stations for MBSP funding were aligned with the approach outlined in the guidelines and subordinate assessment plan. The department did not, however, develop a sufficiently robust approach for the assessment of coverage claims and costs, which adversely impacted on timely and robust decision-making. Once the assessment was complete, the department documented a clear rationale for the selected funding proposals that was in accordance with the requirements established in the guidelines. Notwithstanding the general robustness of the process, weaknesses in the design of merit criteria resulted in 89 of the selected proposals (allocated $28 million in state and Commonwealth Government funding) undermining value for money given the minimal new coverage for premises or roads provided.

Areas for improvement

The ANAO made one recommendation aimed at strengthening the timeliness and rigour of the department’s assessment of coverage claims and costs of proposals.

The ANAO has also suggested that the department: review the approach to assessing overlapping coverage of the proposals of each applicant to help ensure that the award of funding is targeted to areas of greatest need; and compare the proximity of applicant proposals to their competitor’s forward build plans to help demonstrate that programme funding directly supports outcomes that are in addition to normal commercial investment.

Were applications assessed in accordance with the guidelines?

The department received four applications (that contained 555 proposals for new and upgraded base stations seeking $134 million in Commonwealth funding) that, overall, were assessed in accordance with the requirements established in the programme guidelines.

3.1 By the close of the application period on 16 April 2015, the department had received applications from three mobile network operators and one mobile network infrastructure provider. The four applications proposed 555 individual base stations and clusters of base stations and sought $134 million from the Commonwealth. The key features of the assessment process included an eligibility assessment, the assessment of coverage claims and costs, and the application of the equitable distribution principles. The assessment process was based on desktop analysis and did not include site visits or radio frequency validation.

3.2 Overall, the department’s assessment of applications was in accordance with the processes outlined in the guidelines and proposal assessment plan (called an Evaluation Plan by the department) although some steps were conducted in a different sequence. The assessment process was recorded in the meeting papers of the department’s Evaluation Committee. The department’s decision to change the order of assessment activities was not unreasonable, with no adverse impacts identified in the change from the planned approach.

Were eligibility requirements applied fairly?

The eligibility requirements outlined in the guidelines were applied fairly, with all assessments appropriately documented and retained by the department. The eligibility assessment process resulted in one applicant, a mobile network infrastructure provider, being excluded from further consideration.

3.3 There were five steps involved in the department’s assessment of eligibility, as outlined below:

1. Base Station Assessment Tool integrity review

Checked whether the formatting of the spreadsheet file containing the base station proposal information returned by the applicant matched the template originally provided by the department.

2. Application completeness check

Checked whether the applicant had provided all the required information in their application.

3. Applicant eligibility report

Confirmed that the applicant was an eligible mobile network operator or mobile network infrastructure provider.

4. Initial Assessment: Minimum Requirements Assessment

Assessed whether applications met the minimum Operational Agreements conditions outlined in the guidelines, including:

- services required;

- open access and co-location;

- backhaul access and pricing; and

- dispute resolution.

5. Initial eligibility completeness assessment

Provided the overall assessment of the application and recommendations to the department’s application Evaluation Committee.

3.4 Eligibility assessment decisions in relation to mobile coverage were not taken during the initial stages of the assessment process, as these were subject to a separate process of assessment of coverage claims and mapping of coverage benefits. Overall, the range of eligibility assessments designed by the department supported the determination of eligibility in accordance with the guidelines. The department’s conduct of eligibility assessment was well documented.

3.5 Following its assessment and subsequent advice provided by the probity adviser, the department determined that the mobile network infrastructure provider’s application was ineligible on the basis that the applicant had submitted an incomplete application. All three remaining applicants were determined to be eligible and were assessed as meeting the minimum requirements for the programme.

Was the merit of each proposal assessed and scored with sufficient rigour?

The proposals for base station funding submitted by the three applicants were assessed and scored in accordance with the guidelines, but the department’s assessment of applicant costings for proposed base stations lacked sufficient rigour. There was scope for the department to have better prepared for the assessment of coverage claims and applicant costs. The assessment of aspects of submitted applications presented a number of challenges for the department, particularly in relation to the assessment of applicants’ coverage claims, with the department required to modify its approach during the course of assessment.

Assessment against the merit criteria

3.6 To assess applicants’ proposed base stations against the seven merit criteria outlined in the guidelines (see earlier Table 1.1), the department used data and cost information submitted by applicants and obtained assistance from advisers.32 For example, applicants’ submitted information, such as the location of the proposed base station, whether it was a greenfield or brownfield proposal, funding sought from the Commonwealth, and co-contributions to be provided by the applicant and relevant state government. This information was included in a Base Station Assessment Tool (BSAT—a spreadsheet template developed by the department and provided to applicants). The spreadsheets were then used by the department to record assessed coverage claims, calculate scores using formulae, and collate a merit list of all proposals. In particular, the spreadsheets were used to calculate scores for co-contributions, service offering and commitment of use. The ANAO found that the BSAT appropriately reflected the assessment criteria outlined in the published guidelines33, with the department establishing a suitable process to review the accuracy of applicants’ completed BSATs. The department used the input of its advisers to assess coverage claims and costs.

Assessing coverage claims made by applicants

3.7 The assessment of coverage claims was a complex, technically demanding and time consuming task that involved modelling each proposal’s projected coverage in square kilometres (km2) on a topographic map, with this modelling subsequently overlayed with maps of existing coverage to identify areas of new coverage where previously there was none. The department then calculated the size of the new coverage area and the number of new premises and length of transport route(s) (major roads and rail kilometres) covered.34 Given the complex nature and importance of this aspect of the assessment process, it would have been prudent for the department to have developed an appropriately structured methodology to guide the assessment and to have piloted the methodology to determine its applicability.

3.8 In March 2015, three months after the application period opened, the department engaged an external technical adviser to assist in verifying the data provided by applicants and assess the proposed coverage of each base station proposal. As part of the assessment process, the technical adviser, through the department, obtained clarifications from applicants on the technical specifications of the provided data.35 The technical adviser informed the department that the coverage modelling methodology of the three applicants, while differing in some respects, was based on valid assumptions and in line with acceptable industry standards.

3.9 The scope of work outlined for the adviser, the report and maps produced by the adviser, and the use of the adviser’s work by the department, underpinned the department’s coverage assessment. As outlined earlier, the department did not establish a structured methodology for the assessment of coverage claims, including how it intended to use the work of the technical adviser to assess proposals.

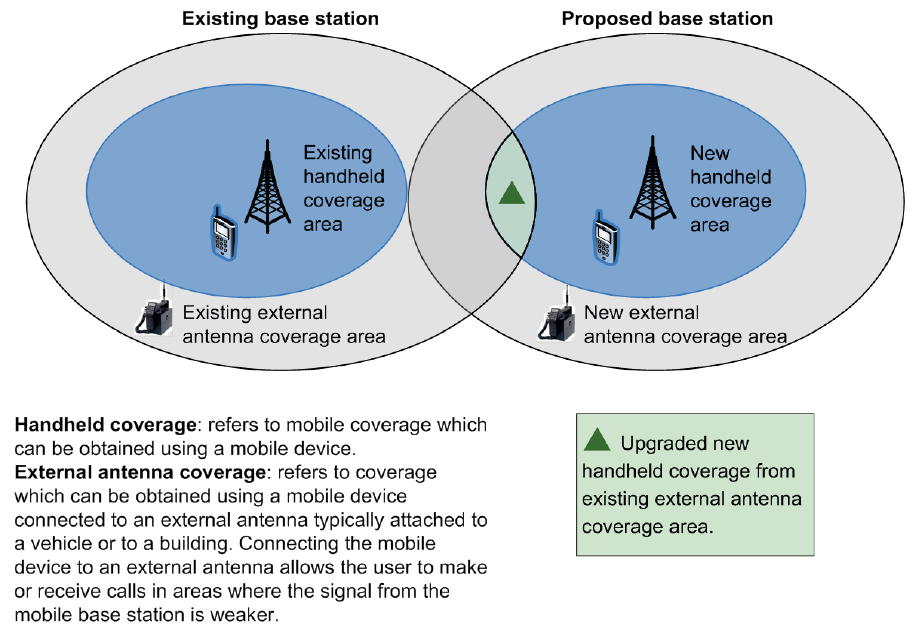

3.10 To score each proposal against the coverage related criteria, the department and technical adviser mapped new coverage in categories of ‘new handheld coverage’, ‘new external antenna coverage’ and ‘upgraded external antenna to handheld coverage’. These categories are illustrated in Figure 3.1.

Figure 3.1: Categories of mobile coverage

Note: When coverage is projected onto a topographical map, the coverage area appears as a splatter, rather than a smooth, continuous circle.

Source: ANAO analysis of departmental information.

3.11 The mapping of coverage claims was initially undertaken on mapping software that was not suitable for the task and alternative software was required. ‘Custom transformers’ (technical workarounds) were developed to prevent data loss. The development of the custom transformations, re-running mapping and conducting visual validations resulted in unanticipated delays to aspects of the assessment process, although overall timeframes for the assessment process were met. The piloting of the proposed assessment, as suggested earlier, may have better prepared the department for this work.

3.12 The difficulties that arose during the assessment of coverage claims process were largely managed through the department’s close working relationship with the technical adviser and the iterative problem-solving approach adopted. The ANAO did not identify any adverse impact on the calculation of merit scores.

Assessing proposed base station’s coverage of identified black spots

3.13 To assess whether proposed base stations would deliver improved coverage to one or more identified black spots, the technical adviser analysed the distance from the edge of each base station’s coverage footprint to the nearest black spot on the database. This analysis indicated that all proposed base stations, except one, would improve (to varying degrees) mobile coverage to an identified black spot.36

Assessing new coverage, coverage benefits and coverage of MP priorities

3.14 The technical adviser calculated the size of new coverage being provided, the number of premises and the number of kilometres of major transport routes included within the new coverage area (coverage benefits) and alignment to the priorities identified by Members of Parliament. Outcomes from the coverage assessments were recorded in spreadsheets and converted into scores through the application of formulae.

3.15 While one proposal was assessed as not providing any new coverage (once existing coverage in the area was subtracted from the total coverage footprint to be provided), the remaining base station proposals were found to provide new coverage in the form of new handheld, external antenna or upgraded handheld coverage from existing external coverage. Based on departmental data, the ANAO found that new coverage per proposed base station ranged from 7534 km2 to 0.00019 km2. Of the 555 proposals mapped, 35 would provide new coverage to an area of less than one km2. Of these 35, nine offered less than 0.1 km2 of new coverage and three proposals offered less than 0.01 km2 of new coverage.

3.16 Most proposed base stations were found to cover at least one premise (averaging 50) or one kilometre of a major transport route (averaging 11 km), although 52 of all assessed base stations did not offer any new coverage of premises or major transport routes. Of the 555 proposed base stations, 162 addressed an MP priority location within the station’s total coverage area.

Assessment of cost

3.17 The guidelines required applicants to provide the estimated cost per base station for construction and installation, connection of mobile signals from the base station to the carrier’s core network, and site acquisition and preparation (including the provision of power). Taking into account co-contributions from applicants and other funding sources, the total cost of all base stations proposed was $420 million. Across all proposals, the applicants committed $194 million in co-contributions and had obtained $92 million in state and third party co-contributions.

3.18 A detailed cost breakdown or description of works and their estimated cost per base station was not required from applicants. As a result, the information provided in applications did not indicate the basis on which applicants identified proposals as greenfield or brownfield, whether proposals intended to leverage off existing infrastructure, whether new towers were to be steel lattice or monopoles, or the type of equipment shelter build proposed. The department considered that proposed costs would reflect broad estimates by the applicants rather than detailed planning. By not requesting this information in the applications, the department was not well placed to assess and demonstrate the reasonableness of the estimates provided.

3.19 As was the case with the assessment of proposed coverage claims outlined in applications, the department did not establish a detailed methodology for the assessment of proposal costs. In March 2015, three months after the application period opened, the department engaged an external financial adviser to assist in the assessment of the base station costs outlined in each proposal and to value the in-kind contributions by state and territory governments, local councils and other third parties.

3.20 The financial adviser reviewed the available cost information included in the three eligible applications, obtained (via the department) and assessed additional information from applicants, and modelled the expected costs of each proposal based on the applicant’s estimates of the capital cost components. The adviser noted that the funding model encouraged applicants to quote deployment costs at higher than market rates in order to mitigate the risk of under-estimating costs.37 The consultant’s modelled costs indicated that applicants had over-estimated costs by up to 35 per cent. While the department subsequently raised concerns to the ANAO about the appropriateness of the methodology used by the financial adviser, the ANAO notes that the financial adviser implemented the methodology that was agreed with the department at the time of engagement.

3.21 The adviser’s draft working paper38 also noted the risk that public funding may be used to improve private network infrastructure not directly related to the proposed base stations:

at a number of base stations, [applicant name] have included in their cost estimates significant upgrades for their existing ‘core network’ as a result of adding a new mobile base station to the edge of the network. While we have little information on how [applicant] came to conclude that it needed these core network upgrades, we remain a little sceptical that the addition of a single new black-spot mobile base station (presumably generating only modest traffic) would be the tipping point for the requirement of an entire core network upgrade that would also potentially benefit many other base stations and/or other services (e.g. fixed broadband) unrelated to the blackspot.

3.22 In contrast to the concerns raised by the financial adviser, the department accepted the applicant’s inclusion of costs to strengthen its core network, taking the view that the applicant was the ‘network specialist’ and had deemed it necessary as part of its proposal. Notwithstanding this view, as the delivery entity for the programme, the department was responsible for ensuring that the provision of funding for proposed base stations represented a proper use of public funds—that is, it was effective, efficient, economical and ethical.39 Given the reliance that the department placed on the expertise of the applicant, it was not well placed to demonstrate the efficient and economical use of programme funds.

3.23 All applicant cost figures were accepted by the department. The additional cost information requested from applicants by the financial adviser was taken by the department as further assurance in relation to the cost of deployment. The department did not consider negotiating with applicants on costs or seek probity advice on other possible avenues to scrutinise or minimise costs. The department’s proposed assessment report (Evaluation Report) noted that, while costs ‘may be higher than expected in some cases’, the proposed claw-back provisions of the funding deed will help mitigate the risk to the Commonwealth of payment above market rates. In this context, it is important to note that the funding agreements negotiated with successful applicants departed from the strong claw-back provisions originally foreshadowed in the guidelines, by enabling applicants to accumulate under-spends in funding pools. Aspects of the funding agreements are examined further in Chapter 4.

3.24 Overall, the department’s assessment of costs included in MBSP applications was not sufficiently robust and primarily relied on the achievement of price discipline through competitive tension between applicants and proposals and rewarding base stations requiring less Commonwealth funding with greater points. The department allocated points based on the original costs submitted with proposals and accepted those costs based on its anticipated use of claw-back provisions in the management of funding agreements. In relation to future rounds of the programme, the department should develop an appropriately detailed methodology for the assessment of applicant coverage claims and costs to promote more timely and robust decision-making and to demonstrate that expenditure under the programme represents a proper use of public funds.

Recommendation No.2

3.25 To promote timely and robust assessment and to demonstrate that expenditure under the programme represents a proper use of public funds, the ANAO recommends that the Department of Communications and the Arts implement an appropriately detailed assessment methodology tailored to the objectives of the programme.

Department of Communications and the Arts’ response: Agreed.

3.26 The department accepts the ANAO’s recommendation and will further enhance the assessment documentation which has been developed to evaluate the mobile telecommunications infrastructure proposed under round 2 of the programme.

Was there a clear rationale for the merit list of base stations and funding decisions?

The basis on which the merit list of base stations was developed was appropriately supported by documentation, with funding decisions made in accordance with the recommendations arising from the assessment process. All eligible proposed base stations were ranked in order of the assigned assessment score (from highest to lowest) and the equitable distribution principles were applied in accordance with the requirements outlined in the published programme guidelines. As a result, the proposals of one applicant (Optus) were not recommended for funding. The electoral distribution of selected base stations was primarily driven by the area that operators chose to locate their proposals and the level of co-contributions that had been committed.

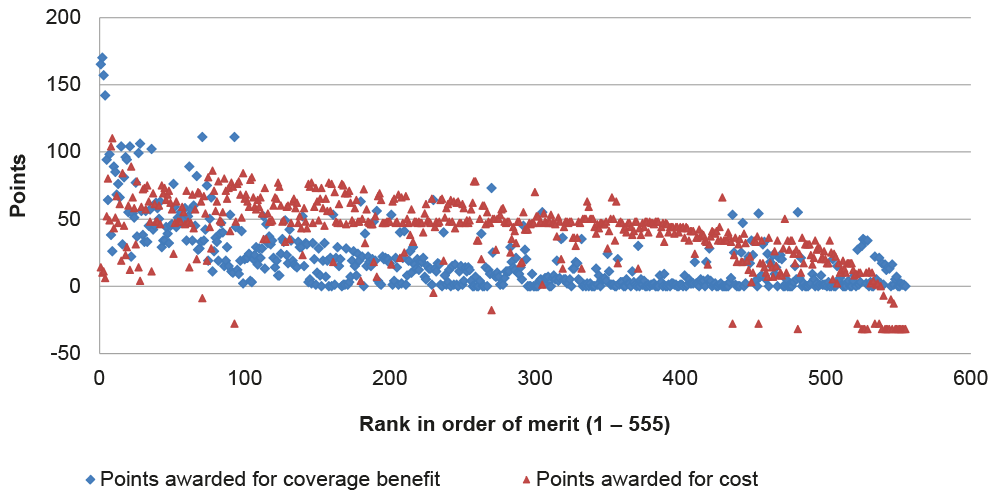

Compiling the draft merit list

3.27 To compile the draft merit list, all eligible proposals were ranked in order of score, from highest to lowest. Across all proposals, the average score was 77 points (given uncapped scoring for some criteria there was no maximum score) with the highest ranked base station receiving 200 points and the lowest ranked receiving minus seven points.40 The department calculated and recorded scores in a spreadsheet. The department informed the ANAO that members of the project team visually checked the spreadsheet to help ensure accuracy and completeness of the scores during the development of the draft merit list. An examination by the ANAO of the formulae used to convert values (such as amount of new coverage or number of premises) into points indicated that the spreadsheets and calculations appropriately reflected the selection criteria. The ANAO did not identify any discrepancies in the scores.

3.28 Less than 40 per cent of selected base stations were awarded points for new coverage, as the number of square kilometres that needed to be achieved to be awarded a single point (200 km2 for new handheld coverage) was too large for base stations proposed in areas where there was existing coverage. All proposals scored points for providing 4G services, in addition to the minimum requirement of 3G technology. No proposals were awarded points for the commitment of an additional provider to also utilise the proposed base station and deliver the specified services on a commercial basis for a minimum of 10 years, though most were allocated points for the proposal being capable of supporting additional providers in the future. A summary of maximum and minimum scores awarded for merit assessed proposals and average scores against the selection criteria is outlined in Table 3.1.

Table 3.1: Summary of actual scores awarded for merit assessed proposals

|

Criteria |

Maximum score available |

Maximum award |

Minimum award |

Average |

|

New coverage |

10 |

10 |

0 |

0.9 |

|

Coverage benefit |

Uncapped |

170 |

0 |

19.0 |

|

Member of Parliament priority |

5 |