Browse our range of reports and publications including performance and financial statement audit reports, assurance review reports, information reports and annual reports.

Award of Funding under the Building Better Regions Fund

Please direct enquiries through our contact page.

Audit snapshot

Why did we do this audit?

- The Building Better Regions Fund (BBRF) is the largest open and competitive grants program administered by the Department of Infrastructure, Transport, Regional Development, Communications and the Arts (Infrastructure).

Key facts

- The program was established in 2016.

- Five funding rounds have been completed, with $1.15 billion in grant funding awarded to 1293 projects. A sixth round is underway.

- Funding awarded under the program was to be directed to projects outside of major capital cities to drive economic growth and build stronger regional communities into the future.

What did we find?

- The award of funding was partly effective and partly consistent the Commonwealth Grant Rules and Guidelines.

- While the BBRF was well designed in a number of respects, there were also deficiencies in some important areas.

- Appropriate funding recommendations were provided for three of the five completed rounds.

- Funding decisions were not appropriately informed by departmental advice, and the basis for the funding decisions has not been appropriately documented.

- The award of funding was partly consistent with the guidelines.

What did we recommend?

- The Auditor-General made one recommendation to Infrastructure and four aimed at improving the grants administration framework.

- The Department of Finance noted all four of the recommendations directed at improving the framework and Infrastructure agreed to its one recommendation.

$1.15bn

in grant funding awarded across the five completed rounds.

94%

of approved projects were located in regional areas.

65%

of infrastructure project stream applications approved for funding were not those assessed as being the most meritorious in the departmental assessment process.

Summary and recommendations

Background

1. The Building Better Regions Fund (BBRF) is a $1.38 billion open and competitive grant program administered by the Department of Infrastructure, Transport, Regional Development, Communications and the Arts1 (Infrastructure) under the Commonwealth Grants Rules and Guidelines (CGRGs). Commencing in November 2016, it was one of the first grant programs to be delivered in partnership2 between Infrastructure and the Department of Industry, Science and Resources3 (DISR).4

2. The objectives of the BBRF are to drive economic growth and build stronger regional communities into the future. A total of $1.15 billion has been awarded to 1293 projects across the first five funding rounds. A sixth round was underway at the time of the audit, with successful applications expected to be announced between June and August 2022. The ANAO has not audited the assessment of applications and award of grant funding under the sixth round.

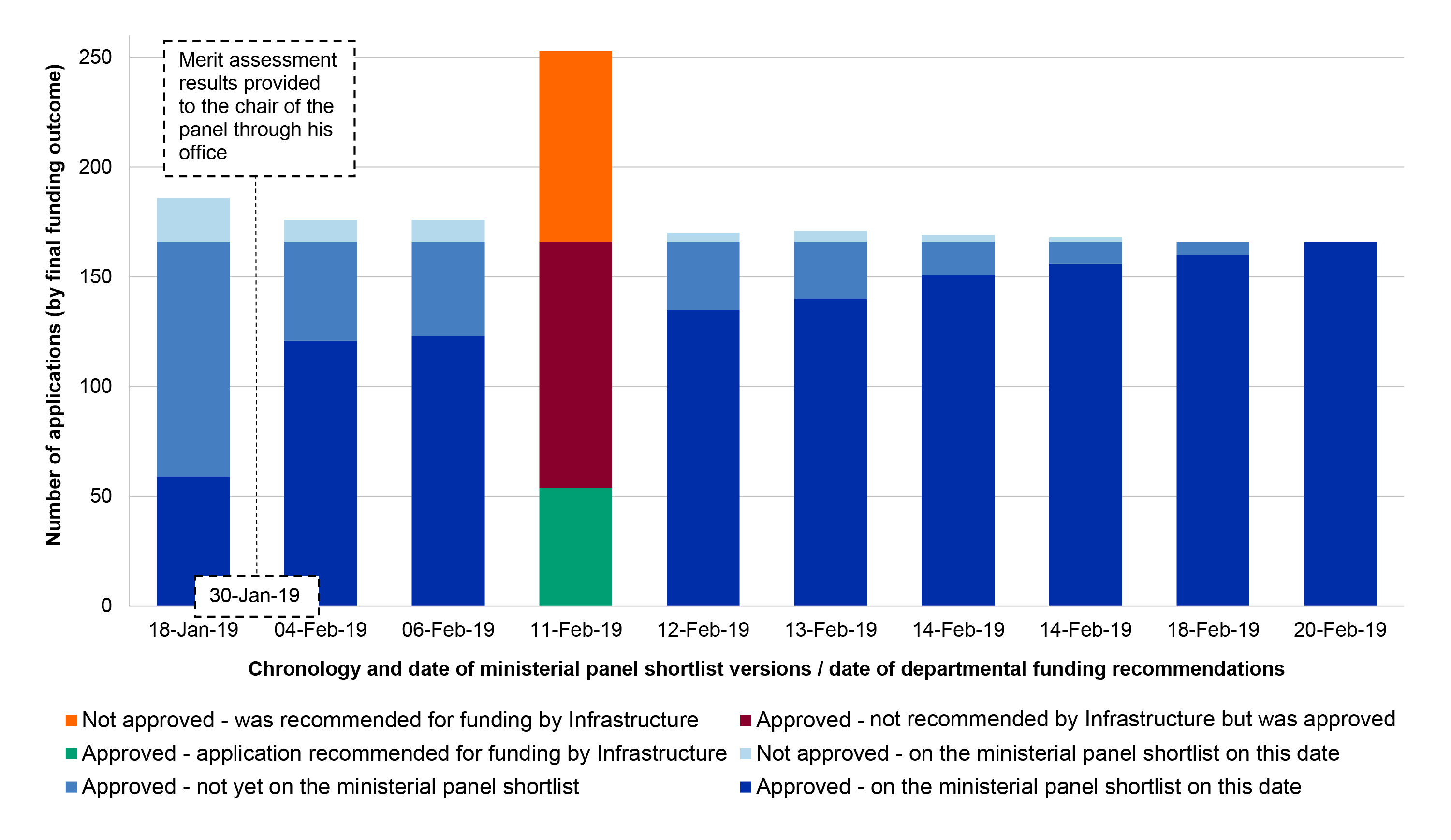

3. Separate guidelines were prepared in each round for the ‘community investments’ and ‘infrastructure projects’ funding streams. The value of grants awarded under the infrastructure projects stream was significantly larger ($1.57 million on average of funding approved compared with $45,782 on average approved under the community investments stream) which was reflected in 98 per cent of the total BBRF funding awarded being under the infrastructure projects stream.

Rationale for undertaking the audit

4. The BBRF is the largest open, competitive and merits-based grants program administered by Infrastructure. This audit was undertaken concurrent with completion of the fifth round and design of the sixth round, providing opportunities to examine any improvements that have been made over time. An audit of the BBRF also allowed the ANAO to provide assurance to the Parliament as to whether Infrastructure has implemented relevant agreed recommendations from the last audit of a regional grant funding program, tabled in November 20195 as well as embedding grants administration improvements previously observed by the ANAO6 and matters previously raised by the Joint Committee of Public Accounts and Audit (JCPAA) in reviews of earlier audits of regional grants programs. The JCPAA identified the potential audit topic as a priority of the Parliament for 2021–22.

Audit objective and criteria

5. The objective of the audit was to assess whether the award of funding under the BBRF was effective as well as being consistent with the CGRGs.

6. To form a conclusion against the objective, the following high-level criteria were adopted.

- Was the program well designed?

- Were appropriate funding recommendations provided?

- Were funding decisions informed by the advice provided and appropriately documented?

- Was the distribution of funding consistent with program objectives and grant opportunity guidelines?

Conclusion

7. The award of funding under the first five rounds of the Building Better Regions Fund was partly effective and partly consistent with the Commonwealth Grant Rules and Guidelines.

8. While the BBRF was well designed in a number of respects, there were also deficiencies in a number of important areas. Positive aspects included the guidelines clearly setting out: that an open competitive application process was being employed; relevant and appropriate eligibility requirements; and the process through which the Business Grants Hub would assess the merits of applications against the four published criteria. Key shortcomings in the design of the program were that the published program guidelines across all six rounds:

- did not transparently set out the membership of the panel that was to make decisions about which applications would receive grant funding; and

- stated that the decision-making panel may use at its discretion the consideration of a non-exhaustive list of ‘other factors’ to override the results of the merit assessment process, with applicants not asked to specifically address those other factors in their applications for grant funding.

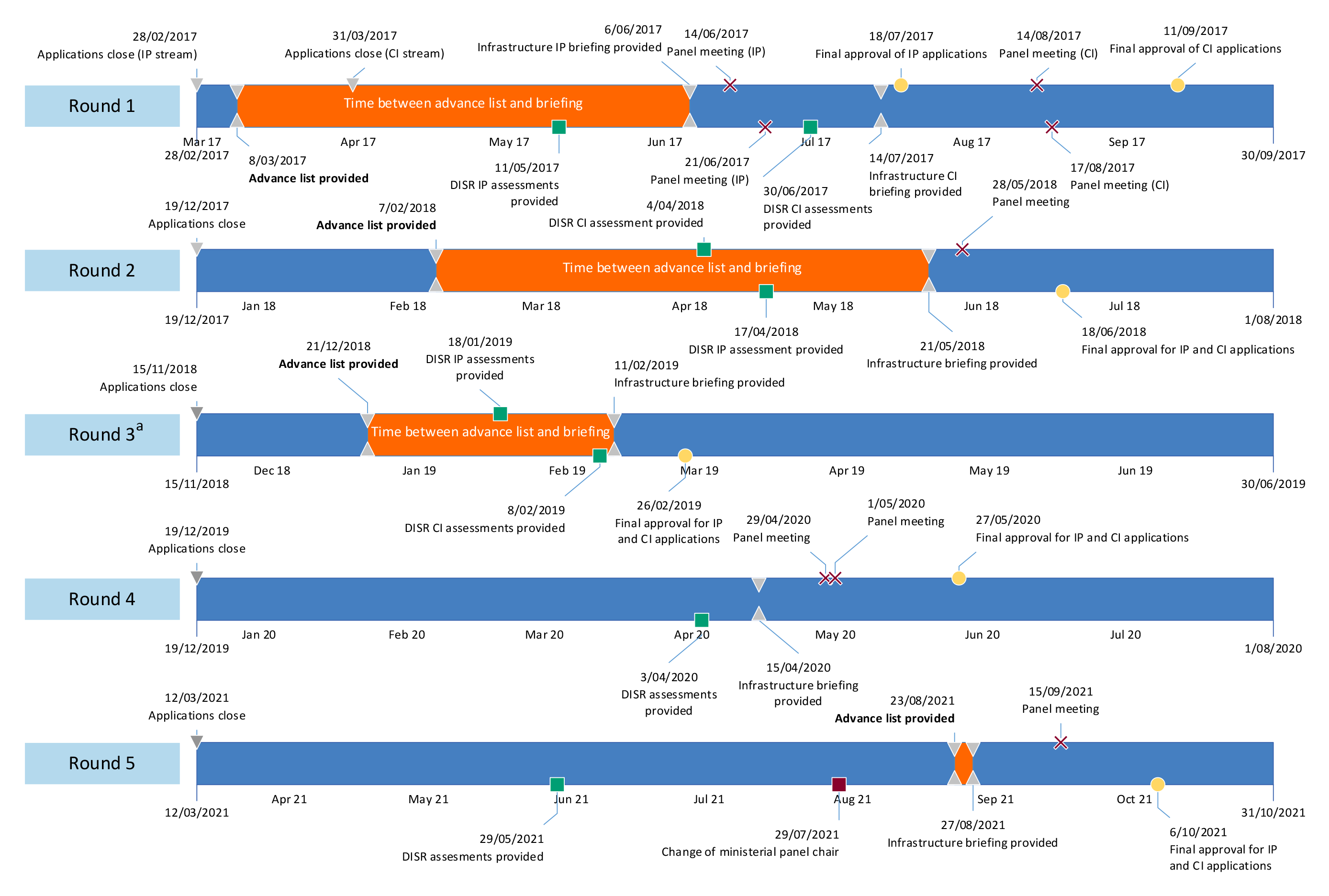

9. Infrastructure provided appropriate funding recommendations based on merit assessment results for three of the five rounds that have been completed. This was not the case for the third and fifth funding rounds. In those two rounds, rather than clearly identifying which applications should be successful up to the limit of the available funding, Infrastructure recommended the panel select from a ‘pool’ of projects. The total value of those projects was significantly in excess of the funding available to be awarded (more than double the funding available in the third round, and more than triple the funding available in the fifth round). The significance of the changes made to Infrastructure’s approach was that, for the fifth round, there would be no circumstance in which any of the 528 applications seeking $761.4 million in grant funding that had been assessed as representing value with relevant money would need to be reported to the Finance Minister (in accordance with the CGRGs) as an approval of an application that the department had recommended be rejected.

10. The decisions about the award of grant funding across each of the five funding rounds were not appropriately informed by departmental advice, particularly with respect to the third and fifth rounds. The basis for the funding decisions has not been appropriately documented, particularly in the three most recently completed rounds. It was common in the infrastructure projects stream (the larger of the two streams) for the approved applications to not be those assessed as best meeting the published criteria and for applications assessed as highly meritorious against the published criteria to not be awarded funding. There were inadequate records of the inputs to the decision-making process and the basis for decisions to approve projects assessed as less meritorious against the four published merit criteria and to reject applications assessed as having higher merit.

11. Overall, the award of funding was partly consistent with the grant opportunity guidelines. Consistent with the program design, the funding has been directed to projects in rural and regional areas. As the program has progressed through the first five rounds, there has been an increasing disconnect between the assessment results against the published merit criteria and the applications approved for funding under the infrastructure projects stream (which comprised the majority of approved projects and funding). This reflects the extent to which the ministerial panel has increasingly relied upon the ‘other factors’ outlined in the published program guidelines when making funding decisions.

Supporting findings

Program design

12. Grant opportunity guidelines were developed, approved and published for each of the six rounds of the BBRF that have been conducted (see paragraphs 2.2 to 2.5).

13. The guidelines for each round clearly outlined the way in which funding candidates would be identified, including the application process. An open competitive approach to selecting the most meritorious applications from those applications assessed as eligible was to be adopted. The guidelines for each round underscored the importance of applications’ performance against the assessment criteria as this was to be the basis for the award of funding (see paragraphs 2.6 to 2.8).

14. Relevant and appropriate eligibility requirements were set out in the grant opportunity guidelines and have remained relatively consistent for each round. Key changes included: adding eligible project locations from outer metropolitan areas; strengthening the definition of an eligible applicant; and decreasing the volume of mandatory supporting documentation required for some projects. Additional eligibility requirements were used in round four to facilitate the targeting and funding of projects in drought-affected communities (see paragraphs 2.9 to 2.14).

15. Four relevant and appropriate merit criteria were identified in the guidelines for each round and stream. In a section separate to the merit criteria, the guidelines for each round also included a non-exhaustive list of ‘other factors’ the ministerial panel could consider when deciding which applications to fund. The inclusion of the other factors was not consistent with the key principles for grants administration set out in the CGRGs as it had the potential to undermine the award of grant funding on the basis of merit (see paragraphs 2.15 to 2.30).

16. Up to and including round four, the grant opportunity guidelines for each round set out how applications would be scored against the merit criteria and stated that the results would provide the basis for determining the most meritorious applications for funding under the program. The guidelines have not accurately reflected the significance of ministerial discretion (represented in the guidelines by the ‘other factors’ clause) as a basis for the panel’s funding decisions (see paragraphs 2.33 to 2.36).

17. While the decision-making role performed by the ministerial panel was clearly identified in the published guidelines for each round, the membership of the panel was not. In addition, the division of roles and responsibilities between the two administering departments has been refined over time and changes reflected in various services schedules to a December 2016 Memorandum of Understanding (see paragraphs 2.37 to 2.41).

18. Infrastructure has practices in place to use lessons learned from previous rounds to inform the design and delivery of subsequent BBRF funding rounds. Notwithstanding a recommendation it agreed to in an earlier audit of the award of regional grant funding, Infrastructure has not taken appropriate steps to analyse the reasons for the panel diverging from the results of the documented merit assessments so as to inform the development of the guidelines for later rounds and/or make adjustments to the conduct of the assessment process (for example, to undertake a departmental assessment of all factors the guidelines state can be taken into account when funding decisions are taken). (See paragraphs 2.42 to 2.49).

Funding recommendations

19. Decision-makers were provided with funding recommendations in written briefings and submissions in each round by the Department of Infrastructure. The guidelines outlined that Cabinet would be consulted on the funding decisions, and written submissions to Cabinet were prepared for each round (see paragraphs 3.3 to 3.7).

20. While the approach differed across rounds, the results of the assessment process were used to place competing, eligible applications that had met the published criteria to a minimum standard into orders of merit that:

- for rounds one and two, individually ranked almost all applications by overall merit assessment score from highest scoring to lowest; and

- from round three onwards, ranked applications in groups, or ranking bands, of between one and 61 applications by overall merit assessment score from highest scoring to lowest (see paragraphs 3.8 to 3.14).

21. For the third, fourth and fifth rounds, applicant requests to be exempted from the requirement to include partnership funding were assessed and clear recommendations were provided to the ministerial panel as to whether those requests should be granted. An assessment of these requests was not undertaken in the first two rounds. Without recommending which of the requests should be approved by the panel in those rounds, Infrastructure provided the panel with details of the applications seeking exemptions and recommended that the chair record which of those exemption requests the panel had approved. Across all five rounds, all applicants that sought an exemption were approved by the respective ministerial panels, irrespective of any departmental recommendations (see paragraphs 3.15 to 3.23).

22. For the first, second and fourth rounds, Infrastructure clearly recommended that the ministerial panel approve specific applications up to the limit of the available funding. Consistent with the published guidelines, the department recommended the panel approve the 591 highest ranked applications across those three rounds up to the aggregate value of $661 million. In a departure from the competitive and merits-based process outlined in the program guidelines, Infrastructure’s recommendations for the third and fifth rounds were presented as ‘pools’ of candidates for the panel to choose from and, while sorted in descending order based on their total assessment scores, the department did not recommend that the highest scored and ranked applications be chosen as successful. Specifically, in:

- round three, there were 306 ‘recommended’ projects seeking grants of $426 million put forward by Infrastructure for the panel to choose from, notwithstanding that there was only $205 million available; and

- round five, there were 528 ‘recommended’ projects seeking grants of $761 million put forward by Infrastructure for the panel to choose from, compared with the $200 million the guidelines had said was available and the $300 million subsequently made available (see paragraphs 3.24 to 3.34).

Funding decisions

23. All applications approved under the five concluded funding rounds were submitted through the open processes outlined in the program guidelines. With one exception, only those applications assessed as eligible were then assessed against the merit criteria and awarded grant funding (see paragraphs 4.2 to 4.3).

24. Approval processes have varied over time and have not been conducted fully in accordance with the published grant opportunity guidelines. Consistent with these guidelines, funding approvals for each round were provided by the chair of the panel after consultation with Cabinet. Including the ‘other factors’ in the guidelines was inconsistent with the intent of the CGRGs and eroded the role of the published merit assessment process by enabling and introducing discretion to decision-makers with little or no transparency. In addition, the guidelines did not provide for the panel’s consideration of applications against the other factors prior to it receiving Infrastructure’s recommendations or, as was the case in the third round, in parallel with DISR’s merit assessment process (see paragraphs 4.4 to 4.21).

25. Appropriate records were not made of the decision-making process. While not good practice in the first round, the record-keeping practices for the decision-making process deteriorated significantly in later rounds. Infrastructure performed a secretariat function for the panel in the first round, and although secretariat services are readily able to be provided by DISR’s grants hub, it has not been engaged to provide administrative support for the panel (see paragraphs 4.22 to 4.34).

26. Out of the 1293 projects approved across the five concluded rounds, there were 179 instances where the bases for the panel’s funding decisions were not recorded. This comprised:

- 164 instances where the panel decided not to approve applications that had been recommended by Infrastructure for funding (which had been assessed as having a high degree of merit against the four published criteria), without recording the reasons for not approving those applications. This contrasted with the approach taken for 40 other recommended applications where the panel’s reasons for not approving were recorded; and

- 15 instances (10 missing from the 76 that should have been recorded in the second round, and 5 from 173 in the fifth round) where projects not recommended by the department were funded.

27. In addition, and particularly in more recent rounds, while there has been some record made of why applications assessed as less meritorious were approved for funding, the basis recorded did not adequately explain why applications assessed as more meritorious in terms of the published criteria have been overlooked in favour of those other applications with lower merit scores (see paragraphs 4.35 to 4.41).

28. Processes were not in place for decision-makers to re-score applications where they disagreed with the departmental merit assessments. In a departure from previously observed better practice, Infrastructure advised the minister in the later stages of the first round that it was unnecessary to rescore applications because it was not required by the CGRGs. Accordingly, where the panel indicated disagreement with departmental scoring in the first two rounds, it did not revise scores. By the third round, the panel’s written basis for funding decisions made no reference to the merit assessment results, instead focusing on the ‘other factors’ mentioned in the guidelines for the program (see paragraphs 4.42 to 4.48).

Award of funding

29. Projects approved for funding under the community investments stream (grant funding totalling $26.3 million) have consistently been those assessed as being the most meritorious against the published assessment criteria. For projects approved under the infrastructure projects (IP) (involving grant funding of $1.1 billion, or 98 per cent of total program funding awarded), 65 per cent of IP stream applications approved for funding were not those assessed as being the most meritorious in the assessment process. Under the first round, 75 per cent of IP applications funded had been scored highly (and recommended by Infrastructure for funding). For subsequent rounds, highly scored IP applications were approved at a lesser rate of between 13 and 55 per cent (see paragraphs 5.3 to 5.11).

30. Across the five rounds, the approach for reporting the panel’s approval of applications that were not recommended for funding falls short of the intent of the CGRGs. This has been the result of Infrastructure changing its approach to making funding recommendations. Certain types of decisions under some rounds have been reported to the Finance Minister, with similar decisions in other rounds not reported. In addition, Infrastructure’s approach to categorising applications into ‘pools’ in conjunction with alternatively worded funding recommendations has resulted in categories of applications where there is no clear recommendation and, as a result and based on Infrastructure’s interpretation of the CGRGs, no requirement for applications approved in those pools to be reported to the Finance Minister (see paragraphs 5.12 to 5.19).

31. Consistent with the objectives for the BBRF, almost $1.08 billion (94 per cent) of the funding awarded under the first five rounds has been directed towards projects in rural (74 per cent or $850 million) and provincial (20 per cent or $227 million) regions. Compared with the results of the merit assessment process, the award of funding favoured electorates held by The Nationals as those electorates were awarded $104 million (or 29 per cent) more funding in total across the five rounds than would have been the case had the results of the merit assessment process been relied upon. Electorates held by all other parties were awarded less grant funding than would have been the case had the merit assessment results been relied upon (see paragraphs 5.22 to 5.26).

32. While there were delays between final approvals and announcements under the first two rounds, public announcements of the funding outcomes have been timely for recent rounds. There have been some delays with the completion of projects, and this has been reflected in seven projects from round one and 33 from round two still being underway. (see paragraphs 5.27 to 5.30).

Recommendations

Recommendation no. 1

Paragraph 2.31

The Australian Government amend the Commonwealth Grants Rules and Guidelines to require that, in circumstances where funding decisions may be made by reference to factors that are in addition to, or instead of, the published assessment criteria:

- applicants be afforded the opportunity to address those other factors as part of their application for funding; and

- records be made as part of the decision-making process as to how each competing applicant had been assessed to perform against each of those factors.

Department of Finance response: Noted.

Recommendation no. 2

Paragraph 3.35

The Australian Government amend the Commonwealth Grants Rules and Guidelines to strengthen the written advice prepared for approvers on the merits of a proposed grant or group of grants by requiring that advice to include a clear and unambiguous funding recommendation that:

- identifies the recommended applications that have been assessed as eligible and the most meritorious against the published assessment criteria; and

- does not recommend applications for an aggregate value of grant funding that exceeds the total amount available for the particular grant opportunity.

Department of Finance response: Noted.

Recommendation no. 3

Paragraph 4.17

The Australian Government amend the Commonwealth Grants Rules and Guidelines to apply the principles for grants administration to situations where stakeholders, such as parliamentarians, play a role in the assessment and award of grant funding.

Department of Finance response: Noted.

Recommendation no. 4

Paragraph 4.49

The Department of Infrastructure, Transport, Regional Development, Communications and the Arts:

- put appropriate processes in place to ensure that where more than one minister, such as a ministerial panel, performs the role of grants decision-maker its written advice on the merits of proposed grants is provided to all panel members prior to funding decisions being taken; and

- improve record-keeping practices so that the basis for decisions is clear, including in circumstances where the decision-maker has not agreed with the assessment of candidates undertaken by officials.

Department of Infrastructure, Transport, Regional Development, Communications and the Arts response: Agreed.

Recommendation no. 5

Paragraph 5.20

The Australian Government amend the Commonwealth Grants Rules and Guidelines to require that:

- when advising on the award of grant funding, officials recommend that the decision-maker reject all applications not supported for the award of a grant within the available funding envelope; and

- the basis for any decisions to not approve applications that were recommended for funding be recorded.

Department of Finance response: Noted.

Summary of entity response

33. The proposed audit report was circulated to the three relevant departments and the chair of each ministerial panel from the five concluded funding rounds (as stakeholders with a special interest in the report under subsection 17(5) of the Auditor‐General Act 1997). Letters of response are included at Appendix 1 and were provided by Infrastructure, DISR, the Department of Finance, the Hon Michael McCormack MP and the Hon Fiona Nash. Summary responses, where provided, are included below.

Department of Infrastructure, Transport, Regional Development, Communications and the Arts

The Department of Infrastructure, Transport, Regional Development and Communications welcomes the report, agrees with the recommendation directed to us, and notes the remaining four recommendations propose modifications to the Commonwealth Grants Rules and Guidelines (CGRGs).

In discharging our grant administration functions across a range of diverse programs, the Department has consistently complied with both the requirements and the spirit of the CGRGs, whilst operating within the context of the design of each program and the Government’s policy objectives.

We note the ANAO’s commentary about the purported use of a ‘pool’ in rounds three and five of the program and acknowledge we incorrectly used the term ‘pool’ in our briefing – it was not an accurate characterisation of the advice we provided to Ministers, in which every eligible application in each round was merit assessed, scored and ranked.

We also note the ANAO’s broader remarks to all entities on the importance of instituting a governance culture that enlivens the principles-based framework of grants administration. We strongly support this sentiment – seeking to live it in our published Values and through our reforms such as development of a pro-integrity framework, improved record keeping, establishment of a regional program governance office, and strengthened probity arrangements.

ANAO comments on Infrastructure’s summary response

34. The ANAO concluded that the award of funding under the first five rounds of the Building Better Regions Fund was partly effective and partly consistent with the CGRGs. The four recommendations to the Australian Government to improve aspects of the CGRGs reflect findings that a stronger grants administration framework would guard against the practices employed in the award of BBRF funding that were not consistent with the underlying intent of the framework.

Department of Industry, Science and Resources

The Department of Industry, Science, Energy and Resources acknowledges the Australian National Audit Office’s report on Award of Funding under the Building Better Regions Fund.

The department notes the ANAO’s conclusion that the award of funding was partly effective and partly consistent with the Commonwealth Grant Rules and Guidelines (CGRGs). The department supports the ANAO’s recommendations to Finance to amend the Commonwealth Grants Rules and Guidelines to strengthen the framework for Commonwealth grants administration.

The department welcomes the ANAO’s finding that the program was well designed in a number of respects.

The department thanks the ANAO for its report, and commits to working with policy partners that engage the Business Grants Hub to ensure adequate transparency in program guidelines when co-designing future programs to ensure alignment with the underlying intent of the CGRGs framework.

Department of Finance

The Department of Finance notes recommendations 1, 2, 3 and 5, which are directed to the Australian Government to amend the Commonwealth Grants Rules and Guidelines.

Any amendment to the Commonwealth Grants Rules and Guidelines is a matter for consideration by Government. The Department of Finance will brief the Government on the ANAO’s findings and recommendations.

The Hon Fiona Nash

I support the ANAO’s recommendations.

The Building Better Regions Fund’s purpose is to ensure funding for projects designed to strengthen regional communities in rural, regional and remote areas of Australia.

While the departmental processes for assessing and scoring applications are in my view sound, it must be recognised that the departmental decision-makers in that process are located in the cities. They do not have the benefit of an on-the-ground understanding of the regional communities, and their circumstances, where projects are proposed to be located, and the potential impact and benefit of those projects.

One of the intentions of the Ministerial Panel was to bring local community knowledge to the decision-making process to strengthen the robustness of funding decisions.

My involvement in the program was through Round 1. I would note that I believe my decisions were appropriately informed by departmental advice.

Regarding Round 1, the ANAO stated:

- In Rounds 1 and 2 “there was a focus on identifying where applicant’s claims against the merit criteria had been overstated or understated”

- “Under the first round, 75% of IP projects [funded] had been scored highly..”

- “Round 1 had the greatest alignment between the merit assessment results and funding approval.” (IP stream).

Key messages from this audit for all Australian Government entities

Below is a summary of key messages, including instances of good practice, which have been identified in this audit and may be relevant for the operations of other Australian Government entities.

Governance and risk management

Grants

1. Background

Introduction

1.1 The objectives of the Building Better Regions Fund (BBRF) are to drive economic growth and build stronger regional communities into the future. A total of $1.38 billion has been allocated to the program out to 2024–25.

1.2 As illustrated by Figure 1.1, five funding rounds have been completed. Across the five rounds, more than 4300 applications were received seeking $6.26 billion in grant funding. A total of $1.15 billion in grant funding has been awarded to 1293 projects. Rounds three and five were the most over-subscribed, with the funding available for award in round five increased in October 2021 by $100 million from the $200 million announced in the grant opportunity guidelines. A sixth funding round commenced in the latter part of the audit, for which the successful applications are expected to be announced between June and August 2022.7

1.3 Two streams of funding have been available in each round — infrastructure projects and community investments. The infrastructure projects stream funds new infrastructure or the upgrade or extension of existing infrastructure. The community investments stream funds new or expanded local events, strategic regional plans, or leadership and capability strengthening activities.

1.4 Of the 1293 successful applications across the five rounds, 56 per cent were within the infrastructure projects stream and 44 per cent were in the community investments stream. The value of grants awarded under the infrastructure projects stream was significantly larger ($1.57 million on average of funding approved compared with $45,782 on average approved under the community investments stream) which was reflected in 98 per cent of the total BBRF funding awarded being under the infrastructure projects stream.

Program administration

1.5 The Commonwealth Grants Rules and Guidelines (CGRGs) are issued by the Finance Minister under section 105C of the Public Governance, Performance and Accountability Act 2013 (PGPA Act). Introduced in July 2009, the CGRGs state that the objective of grants administration is to ‘promote proper use and management of public resources through collaboration with government and non-government stakeholders to achieve government policy outcomes’.8 The CGRGs provide a largely principles-based framework, with some specific requirements.9 They are set out in two interrelated parts, with Part 1 containing mandatory requirements (including that practices and procedures must be put in place to ensure adherence to the seven key grants administration principles10), and Part 2 further explaining how entities should apply the key principles.

1.6 Principles-based grants administration frameworks continue to be adopted over prescriptive approaches by governments because they provide the required flexibility to be fit-for-purpose across a range of granting activities and circumstances.11 For these frameworks to operate effectively, the key principles must be prioritised and applied with appropriate judgement to each grant opportunity in a way that meets both the intent of the framework and its requirements.

1.7 The Department of Infrastructure, Transport, Regional Development, Communications and the Arts (Infrastructure) is the Australian Government entity with policy responsibility for the BBRF.12 The Department of Industry, Science and Resources (DISR)13 through its Business Grants Hub has been engaged by Infrastructure to administer some aspects of the program (discussed at paragraph 2.38).

1.8 A ministerial panel (the panel, see Appendix 3 for the membership of the panel) makes the key decisions on the award of BBRF funding.14 The panel:

- decides whether to agree to applicant requests for an exemption to the requirement that they make a cash contribution towards the cost of the project; and

- in consultation with Cabinet, decides which applications are funded.

1.9 Infrastructure has provided written briefings to inform the panel in its decision-making at both key points, with secretariat support for the panel and its members provided by the office of the chair of the ministerial panel.15 For round five, this support was to include documenting any conflicts of interest declared by panel members in respect to applications from within their electorates and whether they had recused themselves from discussing those projects.16

Figure 1.1: Applications received, assessed and awarded funding

Note a: In round five, there were three applications (seeking total funding of $595,515) among those that had been identified for funding by the ministerial panel that were later withdrawn by the respective applicants between 23 and 30 September 2021. On 6 October 2021, five other applications (seeking a total of $589,951) that had been assessed as being value with relevant money were approved instead.

Source: ANAO analysis of departmental records.

Rationale for undertaking the audit

1.10 Commencing in 2016, the BBRF is a longstanding grants program with a value that has grown over time to $1.38 billion. It is the largest open, competitive and merits-based program subject to the CGRGs administered by Infrastructure. This audit was undertaken concurrent with completion of the fifth round and the commencement of the design of the sixth round, providing opportunities to examine any improvements that have been made over time. An audit of the BBRF also allowed the ANAO to provide assurance to the Parliament as to whether Infrastructure had:

- implemented relevant agreed recommendations from the last audit of a regional grant funding program, tabled in November 201917; and

- embedded the grants administration improvements observed by the ANAO in audits of regional grants programs between August 2013 and December 2016.18 These improvements had addressed matters raised previously by the Joint Committee of Public Accounts and Audit (JCPAA) in its reviews of earlier ANAO audits of regional grants programs (Appendix 4 provides further detail).

1.11 The JCPAA identified the potential audit topic as a priority of the Parliament for 2021–22.

Audit approach

Audit objective, criteria and scope

1.12 The objective of the audit was to assess whether the award of funding under the BBRF was effective as well as being consistent with the CGRGs.

1.13 To form a conclusion against the objective, the following high-level criteria were adopted.

- Was the program well designed?

- Were appropriate funding recommendations provided?

- Were funding decisions informed by the advice provided and appropriately documented?

- Was the distribution of funding consistent with program objectives and grant opportunity guidelines?

1.14 The audit examined the award of funding under each of the five concluded funding rounds and the implementation of the sixth round. The audit scope did not include the conduct of the eligibility and merit assessment work undertaken by DISR (the use of the results of DISR’s assessment work by Infrastructure in developing funding recommendations was examined), or the negotiation and management of grant agreements by DISR.

Audit methodology

1.15 The audit methodology included examination and analysis of Infrastructure and DISR records and engagement with relevant Infrastructure and DISR staff.19

1.16 Access to relevant email records was delayed by 12 weeks due to issues with Infrastructure’s IT system. It was agreed that Infrastructure’s process for identifying and gathering email records in response to requests under the Freedom of Information Act 1982 (FOI Act) provided an appropriate mechanism for retrieving the records requested by the ANAO. In addition to enabling Infrastructure officials to work remotely and in line with COVID-19 related public health directions, this approach was also expected to provide more timely and accurate results than those expected from Infrastructure’s IT system back-ups.

1.17 Initial records provided to the ANAO represented around 75 per cent of the expected volume, indicating there were underlying issues with Infrastructure’s FOI processes. Work was undertaken by Infrastructure to rectify this during the course of the audit to provide the ANAO with a complete set of the requested records. Infrastructure advised the ANAO in April 2022 that this has resulted in improvements to the way its records are identified for FOI purposes.

1.18 The audit was conducted in accordance with ANAO Auditing Standards at a cost to the ANAO of approximately $614,000.

1.19 The team members for this audit were Amy Willmott, Swatilekha Ahmed, Jessica Carroll, Tessa Osborne and Brian Boyd.

2. Program design

Areas examined

The ANAO examined whether the Building Better Regions Fund (BBRF) was well designed, with a focus on whether appropriate grant opportunity guidelines were in place for each round.

Conclusion

While the BBRF was well designed in a number of respects, there were also deficiencies in a number of important areas. Positive aspects included the guidelines clearly setting out: that an open competitive application process was being employed; relevant and appropriate eligibility requirements; and the process through which the Business Grants Hub would assess the merits of applications against the four published criteria. Key shortcomings in the design of the program were that the published program guidelines across all six rounds:

- did not transparently set out the membership of the panel that was to make decisions about which applications would receive grant funding; and

- stated that the decision-making panel may use at its discretion the consideration of a non-exhaustive list of ‘other factors’ to override the results of the merit assessment process, with applicants not asked to specifically address those other factors in their applications for grant funding.

Areas for improvement

The ANAO made one recommendation aimed at improving the way the grants administration framework promotes alignment between the program objectives, the conduct of merit assessments and decision-making.

2.1 The Commonwealth Grant Rules and Guidelines (CGRGs) require that practices and procedures be in place that ensure that the conduct of grants administration is consistent with the seven key principles for grants administration. To promote open, transparent and equitable access to available funding, the CGRGs require that grant opportunity guidelines be developed and made publicly available where grant applications are to be sought. The ANAO examined whether the design of the program included the development of opportunity guidelines for each selection process, and whether those guidelines were consistent with the CGRGs.

Were guidelines for each round developed, approved and published?

Grant opportunity guidelines were developed, approved and published for each of the six rounds of the BBRF that have been conducted.

2.2 For each of the six rounds, grant opportunity guidelines were developed by the Department of Infrastructure, Transport, Regional Development, Communication and the Arts (Infrastructure) and the Department of Industry, Science and Resources (DISR). Separate guidelines were prepared in each round for the ‘community investments’ (CI) and ‘infrastructure projects’ (IP) funding streams.

2.3 Under the CGRGs, entities must conduct a risk assessment of the grants and associated guidelines. Unless a grant is a one-off or ad hoc grant, entities must provide this to and agree the risk assessment rating with the Department of Finance20 (Finance) and the Department of the Prime Minister and Cabinet (PM&C). The agreed rating then informs the handling and public release process for the guidelines. Finance’s ‘streamlined approval process for grant guidelines’ allows for guidelines to be approved for publishing by the responsible portfolio minister without the Finance Minister’s approval in the following circumstances:

- for grant opportunities where amendments are to ‘existing guidelines’ and constitute ‘minor administrative changes’; and

- where the agreed grant opportunity ‘risk rating is low and the [whole-of-government guidelines] templates have been populated as instructed’.

2.4 Guidelines were approved by the chair of the ministerial panel prior to their publication, and for the first three rounds, were also approved by either the Finance Minister or the Prime Minister. All guidelines were made publicly available on business.gov.au on the same day that applications opened, with the exception of the first and fifth rounds where the guidelines were made available earlier. Table 2.1 provides an overview of the approval and release of the guidelines for each round.

Table 2.1: Release of grant opportunity guidelines across funding rounds

|

Round |

Guidelines approveda |

Approver |

Guidelines published |

Applications open |

Applications closed |

|

|

|

|

|

|

|

IP stream |

CI stream |

|

1 |

22 Nov 2016 |

Finance Minister |

23 Nov 2016 |

18 Jan 2017 |

28 Feb 2017 |

31 Mar 2017 |

|

2 |

6 Nov 2017 |

Prime Minister |

7 Nov 2017 |

19 Dec 2017 |

||

|

3 |

20 Sep 2018 |

Finance Minister |

27 Sep 2018 |

15 Nov 2018 |

||

|

4 |

12 Nov 2019 |

Chair of the panel |

14 Nov 2019 |

19 Dec 2019 |

||

|

5 |

9 Dec 2020 |

Chair of the panel |

16 Dec 2020b |

12 Jan 2021 |

12 Mar 2021b |

|

|

6 |

2 Dec 2021 |

Chair of the panel |

13 Dec 2021 |

10 Feb 2022 |

||

Note a: From round four, approval of the guidelines has been provided by the chair of the ministerial panel in accordance with agreement between Infrastructure and Finance that the changes in the program guidelines were ‘minor administrative changes’.

Note b: The round five guidelines were amended on 4 March 2021 to reflect the extended application deadline for both funding streams from 4 to 12 March 2021.

Source: ANAO analysis of departmental records.

2.5 With some variations and departures (see paragraph 2.22), the BBRF guidelines were based largely on Finance’s whole-of-government guidelines templates and so reflected the minimum content requirements set out in the CGRGs. Revisions were made to the guidelines prior to each new round from lessons learned from previous rounds.

Did the guidelines clearly outline the way in which funding candidates would be identified, including any application process?

The guidelines for each round clearly outlined the way in which funding candidates would be identified, including the application process. An open competitive approach to selecting the most meritorious applications from those applications assessed as eligible was to be adopted. The guidelines for each round underscored the importance of applications’ performance against the assessment criteria as this was to be the basis for the award of funding.

2.6 The CGRGs outline that competitive, merits-based selection processes can achieve better outcomes and value with relevant money and should be used unless specifically agreed otherwise by a minister, accountable authority or delegate.21 Between 31 December 2017 and 30 June 2021, 20 per cent of grants representing 20 per cent of grant funding were reported by entities subject to the CGRGs as having been the result of an open competitive selection process.22

2.7 The grant opportunity guidelines for each round of the BBRF outlined that an open call for applications was being undertaken. A significant number of applications were received in each round (see Figure 1.1), with $6.26 billion applied for which was more than four times the $1.38 billion available.

2.8 The guidelines further outlined that applications would be assessed against specified eligibility criteria with eligible applications to then proceed to be assessed against specified merit criteria. Consistent with the CGRGs, the guidelines for the six rounds each emphasised the importance of applications’ performance against the merit criteria. After merit assessments were completed, advice was to be provided to the decision-maker on the merit of the competing applications. The ministerial panel, as the decision-maker, had final approval on which projects would be chosen for funding.

Were relevant and appropriate eligibility requirements established?

Relevant and appropriate eligibility requirements were set out in the grant opportunity guidelines and have remained relatively consistent for each round. Key changes included: adding eligible project locations from outer metropolitan areas; strengthening the definition of an eligible applicant; and decreasing the volume of mandatory supporting documentation required for some projects. Additional eligibility requirements were used in round four to facilitate the targeting and funding of projects in drought-affected communities.

2.9 The CGRGs explain that eligibility criteria represent mandatory requirements which must be met to qualify for a grant. Eligibility criteria should be straightforward and effectively communicated in the grant opportunity guidelines to enable the selection of grantees in a consistent, transparent and accountable manner.

2.10 The guidelines for each round of the BBRF included two sections setting out the mandatory requirements that needed to be satisfied to qualify to proceed to the merit assessment stage of the grant awarding process.

2.11 The first section was titled ‘eligibility criteria’, with the guidelines for each round advising that applications could not be considered for funding if they did not satisfy all eligibility criteria. This section addressed who was eligible to apply and who was not eligible to apply.

2.12 The second section was titled ‘what the grant money can be used for’. It addressed eligible activities, eligible locations and eligible expenditure, with further detail on the latter appended to the guidelines.

2.13 While there was a high degree of consistency in the eligibility requirements for each stream across the five rounds, there were some key changes over time to entity type requirements; eligible project locations; and the supporting information applicants were required to include with their application.

- To be eligible, applicants had to have an Australian Business Number (ABN) and be either a local governing body, or a non-for-profit organisation. Key changes were that:

- from round two, the requirement for a not-for-profit organisation to be established for at least two years was removed; and

- from round three, not-for-profit entities were limited to those with incorporated status.

- As a regional grants program, projects were required to be located in an eligible regional area (as defined by the ‘remoteness classification’ of projects). This requirement was modified from round two to include as eligible for funding any projects located in:

- outer metropolitan regions, or ‘peri-urban areas’, by redefining the geographic boundaries around excluded capital cities23; and

- an otherwise excluded area if the project seeking funding could clearly demonstrate that significant benefits and employment outcomes would flow to an eligible area.

- The grant opportunity guidelines for round four stated that funding under that round would be targeted at drought-affected communities. To give effect to this, additional eligibility requirements were included such that applications would be assessed as ineligible if the project was not located in a drought-affected location, or if this claim was not satisfactorily evidenced.24

- From round two, applicants to the IP stream were no longer required to include a business case, project management plan, or risk management plan to be eligible.

- The provision of a cost benefit analysis under the IP stream was mandatory in round one for applicants seeking over $1 million and optional for other applicants. This became mandatory for all applicants in round two but returned to being mandatory for only those applicants seeking more than $1 million from round three onwards.

2.14 While November 2021 advice from Infrastructure to the ANAO25 did not identify any issues with the construction of the eligibility criteria, Infrastructure outlined that there had been some shortcomings in the assessment against the eligibility criteria. Specifically, that one ineligible application was awarded funding in the first round, another ineligible application was not removed from consideration in the second round (although it was not awarded grant funding) and a ‘potentially ineligible’ entity received grant funding in the third round.26

Were relevant and appropriate assessment criteria established?

Four relevant and appropriate merit criteria were identified in the guidelines for each round and stream. In a section separate to the merit criteria, the guidelines for each round also included a non-exhaustive list of ‘other factors’ the ministerial panel could consider when deciding which applications to fund. The inclusion of the other factors was not consistent with the key principles for grants administration set out in the CGRGs as it had the potential to undermine the award of grant funding on the basis of merit.

2.15 Under the CGRGs framework, assessment (or merit) criteria play an important role in relation to the key principle of achieving value for money from granting activities. Assessment criteria are ‘used to assess the merits of proposals and, in the case of a competitive grant opportunity, to determine application rankings.’27

2.16 Administering entities are advised by the Department of Finance28 that all assessment criteria for a competitive grant opportunity should be contained within the assessment criteria section of the program guidelines.29 Other details that should be contained there include:

- any ‘prioritisation criteria’ to be applied to applications in the event of oversubscription, such as location, policy priority or target groups; and

- whether the selection process occurs in two-stages.

2.17 As reflected in Infrastructure’s responses to questions during Senate Estimates in February 202230, the assessment criteria for the BBRF comprised of two independent sets of criteria that are applied to applications at separate points in the process prior to final funding decisions. Specifically, these are the:

- four merit criteria (see paragraph 2.18), against which eligible applications are assessed by DISR. The results from these assessments provide the basis for Infrastructure’s funding recommendations to the ministerial panel (see paragraph 3.24); and

- ‘other factors’31, that the ministerial panel may apply to applications when deciding which projects to fund (see paragraph 2.22).

Published assessment criteria

2.18 In a section titled ‘assessment criteria’, the grant opportunity guidelines for each round and stream of the BBRF included the following four merit criteria that applications would be assessed against:

- economic benefits the project would deliver to the region;

- social benefits the project would deliver to the region;

- capacity, capability and resources of the applicant to deliver the project; and

- the impact of grant funding on the project (referred to as ‘value for money’ in the first two rounds32).

2.19 The merit criteria were appropriate having regard to the program objectives.

2.20 While the merit criteria remained largely consistent across the rounds, slight changes were made over time to the scores achievable across some criteria. Table 2.2 sets out the scores and weightings applied to each criterion across each funding round and stream.

Table 2.2: Maximum score achievable per criterion for each funding stream and round

|

Merit criterion |

Round 1 |

Round 2 |

Round 3 |

Round 4 |

Round 5 |

||||

|

|

|

|

|

|

IP |

CI |

|

|

|

|

Economic benefits |

15 |

15 |

15 |

10 |

15 |

15 |

|||

|

Social benefits |

10 |

10 |

15 |

10 |

15 |

15 |

|||

|

Value for moneyᵃ |

5 |

5 |

5 |

5 |

5 |

||||

|

Project delivery |

5 |

5 |

5 |

5 |

5 |

||||

|

Total score |

35 |

35 |

40 |

30 |

40 |

40 |

|||

Note a: The ‘value for money’ criterion was revised to be ‘impact of grant funding’ from round three onwards.

Source: ANAO analysis of BBRF program guidelines.

2.21 When seeking approval of the round three program guidelines, Infrastructure advised the Deputy Prime Minister that equal scoring points for the first two criteria (within each stream) had been adopted to provide better alignment with program objectives. The equivalent briefing for the fourth round did not outline the basis for further amendments to the scores in round four. In April 2022, Infrastructure advised the ANAO that:

In Round Four, changes were made to merit criteria one and two, to make it clear what needed to be addressed by the applicant against these two criterion [sic]. The merit scores for the CI stream were then aligned with those of the IP stream, which enables consistent application of the merit framework when looking at similar criterion across the two streams, consistent with the principles of proportionality set out in the CGRGs.

‘Other factors’

2.22 Reflecting the expectations established by the CGRGs, the Department of Finance’s grant opportunity guidelines template does not contemplate the inclusion of broad and non-specific criteria such as ‘[any] other factors’.33

2.23 In a departure from Finance’s template guidance (see paragraph 2.16), the guidelines for each round of the BBRF have included additional criteria, or ‘other factors’, in a separate section of the guidelines.34 When considering draft guidelines for the program, Finance raised the inclusion of the other factors as an issue and asked whether they involved ‘another assessment process’ and, if so, whether applicants should be given an opportunity to provide information on them in their applications. No changes to the draft guidelines or application form were made in response to Finance’s questions.

2.24 The published guidelines stated that the other factors were to be considered by the ministerial panel at the funding decision stage. These included, but were not limited to:

- the spread of projects and funding across regions;

- the regional impact of each project, including Indigenous employment and supplier-use outcomes;

- other similar existing or planned projects in the region to ensure that there is genuine demand and no duplication of facilities or services;

- other projects or planned projects in the region, and the extent to which the proposed project supports or builds on those projects and the services that they offer;

- the level of funding allocated to an applicant in previous programs;

- reputational risk to the Australian Government; and

- the Australian Government’s priorities.

2.25 In this latter respect (the Australian Government’s priorities), some additional detail was provided against this dot-point in the fourth and fifth rounds. Specifically, the:

- guidelines for both funding streams in round four included an additional sentence that ‘round four of the program will support drought-affected locations by targeting projects that will benefit communities affected by drought’; and

- IP stream guidelines for round five included an additional sentence that ‘round five of the program includes $100 million of funding dedicated to supporting tourism-related infrastructure projects’.35

2.26 An eighth factor was introduced in both sets of program guidelines for the sixth round underway at the time of this ANAO audit.36 Specifically, the panel may also consider the:

community support for projects, which can include support from local MPs [Members of Parliament], councils and other organisations confirming the benefits that will flow to their region, provided through information included in applications and letters of support.

2.27 The ANAO’s analysis was that, although not previously recognised in the guidelines, this factor was a key consideration across all previous funding rounds. While its wording suggests that the confirmation of community support would be evidenced through the application process, the ANAO’s analysis is that such support, particularly from parliamentarians, has been largely coordinated outside the application process by the chairs through their offices, and some members of the panel (see paragraph 4.10) through their offices. Applicants were not asked to address this or any of the other factors when submitting their application.

2.28 The extent to which the funding outcomes have been influenced by the departmental assessments against the four criteria and the ministerial panel’s application of the other factors is examined in the section starting at paragraph 5.3. For no round was DISR asked to undertake an assessment of eligible applications against any of the other factors and Infrastructure also did not conduct an assessment of each eligible application against each of the other factors.37 In April 2022, Infrastructure advised the ANAO of its view as follows:

While a list of such ‘other factors’ is included in the Guidelines, it is non-exhaustive and is not intended to form an assessment criteria under the Guidelines.38 Rather, the reference to ‘other factors’ is no more than an explanation of the Ministerial Panel’s discretion to approve or reject, for whatever proper reason the Panel considered appropriate, grants that met the eligibility and assessment criteria.

2.29 This approach to the other factors is not consistent with the key principles for grants administration set out in the CGRGs, noting that the CGRGs require that practices and procedures be in place that ensure compliance with the key principles. In this respect, similar to the recent report into grants administration by the NSW Government (see footnote 29), the strategic review that informed the development and introduction of the Australian Government’s grants administration framework identified that:

Decision-making in grant programs has been a matter of strong public interest, widespread parliamentary and audit scrutiny, and significant political contention in recent times. The reasons for this lie largely in the ‘discretionary’ nature of many grant programs, the high levels of flexibility built into application assessment procedures, and the consequent lack of transparency in many decision-making processes.39

2.30 It is important that the underlying intent of the CGRGs framework be applied in the design of programs and award of funding under grant programs subject to that framework. The inclusion of the other factors clause in the BBRF guidelines was not consistent with the key principles for grants administration set out in the CGRGs, for reasons including that:

- applicants were not afforded the opportunity to address those factors when applying for grant funding; and

- the introduction of the other factors in the guidelines subsequent to and separate from the merit assessment process undermined the award of grant funding on the basis of competitive and comparative merit.

Recommendation no.1

2.31 The Australian Government amend the Commonwealth Grants Rules and Guidelines to require that, in circumstances where funding decisions may be made by reference to factors that are in addition to or instead of the published assessment criteria:

- applicants be afforded the opportunity to address those other factors as part of their application for funding; and

- records be made as part of the decision-making process as to how each competing applicant had been assessed to perform against each of those factors.

Department of Finance response: Noted.

2.32 Any amendment to the Commonwealth Grants Rules and Guidelines is a matter for consideration by Government. The Department of Finance will brief the Government on the ANAO’s findings and recommendations.

Did the guidelines clearly set out how applications would be scored, and how the scoring results would be used?

Up to and including round four, the grant opportunity guidelines for each round set out how applications would be scored against the merit criteria and stated that the results would provide the basis for determining the most meritorious applications for funding under the program. The guidelines have not accurately reflected the significance of ministerial discretion (represented in the guidelines by the ‘other factors’ clause) as a basis for the panel’s funding decisions.

2.33 For each round, the grant opportunity guidelines identified that eligible applications would proceed to be assessed and scored against each of the published merit criteria. The guidelines for each round set out the maximum score that could be awarded against each criteria and, implicitly, the weighting that was being applied to each criterion. The economic benefits criterion was either the highest weighted, or equal highest criterion (with the social benefits criterion), in each selection process.

2.34 The guidelines for each round outlined that the assessment score awarded to each eligible application would be used to identify which applications represented value with relevant money, and those that did not. As illustrated by Table 2.3, the guidelines also outlined that the assessment scores would determine which applications would be recommended for funding approval.

Table 2.3: Grant opportunity guidelines statements on meeting the merit criteria

|

Round |

Statement from the BBRF grant opportunity guidelines |

Scoring threshold adopted to identify applications considered to be value with relevant money |

|

1 |

We will only recommend funding applications that score highly against each of the merit criteria. This ensures Commonwealth funding represents value with relevant money. |

60 per cent of the maximum score that could be achieved against three of the four criteria, 20 per cent against the other criterionᵃ, and 54 per cent overall. |

|

2 |

||

|

3 |

To recommend an application for funding it must score highly against each merit criterion. |

60 per cent of the maximum score that could be achieved against each of the four criteria, and 60 per cent overall. |

|

4 |

As this is an open competitive merit-based program, only the highest-ranking applications will be recommended for funding. |

|

|

5 |

We will only consider funding applications that score at least 60 per cent against each assessment criterion, as these represent best value with relevant money. |

|

|

6 |

||

Note a: Advice from Infrastructure to the minister was that this criterion ‘assesses the extent to which an application has leveraged partner contributions beyond the mandatory co-funding requirement. All eligible applications are assessed as representing value with relevant money against this criterion having already met the eligibility requirement for co-funding (either having met the mandatory co-funding requirement or having been granted an exceptional circumstances co-funding exemption). Therefore, any score against merit criterion 3, including a score of 1, is assessed as value with relevant money’.

Source: ANAO analysis of departmental records.

2.35 The guidelines indicated that a project remoteness loading may be applied to the total assessment scores of projects to assist applicants from small and remote areas to compete against those that are in larger regional areas and better resourced. Very remote projects were to receive the highest loading while inner regional projects were to receive the lowest. Although the program guidelines for the first four rounds flagged the use of a loading, it was only applied to applications under the first two rounds.40 The effect of the loading on scores is discussed from paragraph 3.13.

2.36 Any assessment of applications against any assessment criteria, including ‘other factors’, should be transparent and systematic.41 Across all rounds, the guidelines did not set out how applications would be assessed or considered against the ‘other factors’. This adversely impacted transparency and gave applicants little insight as to how their applications were affected by the panel’s consideration of those factors, and the discretionary nature of those considerations. The impact and significance of the panel’s consideration of the other factors on the approval of applications is examined from paragraph 5.6.

Were roles and responsibilities, including for the decision-making process, clearly identified?

While the decision-making role performed by the ministerial panel was clearly identified in the published guidelines for each round, the membership of the panel was not. In addition, the division of roles and responsibilities between the two administering departments has been refined over time and changes reflected in various services schedules to a December 2016 Memorandum of Understanding (MoU).

2.37 Accountable authorities are required to put in place practices and procedures to ensure that grants administration is conducted in a manner consistent with the CGRGs. This includes program documentation that clearly defines the roles and responsibilities of the various parties involved in undertaking grants administration on behalf of the Commonwealth. These roles and responsibilities are typically outlined in the grant opportunity guidelines, where appropriate, or within departmental governance and guidance documents.42

Division of departmental responsibilities

2.38 The relevant roles and responsibilities between Infrastructure (as the program policy entity) and DISR, for the provision of Business Grant Hub services, were negotiated and then set out in a Memorandum of Understanding (MoU) signed on 19 December 2016.43 While the roles of each department have been refined over time, the general division of responsibilities between the two are outlined below.

- Infrastructure has had responsibility for:

- co-development and approval of all BBRF grant opportunity guidelines;

- administration of co-funding exemptions under the first three rounds;

- design and application of the remoteness loading for the first two rounds (see paragraphs 2.35 and 3.13);

- providing advice on the relative merits of the grant applications to the panel;

- providing secretariat support to the panel during its decision-making process for the first round44; and

- providing DISR with details of successful applicants.

- DISR has undertaken the following tasks:

- co-developing and publishing the guidelines and associated materials;

- receiving and assessing applications against eligibility45 and merit criteria;

- assessing requests for co-funding exemptions from the fourth round;

- advising applicants of the funding decisions and providing feedback when requested; and

- developing and executing grant agreements with successful applicants.

Decision-making responsibilities

2.39 The grant guidelines for each round identified that decisions on projects to be funded would be taken by a ministerial panel, with the membership of each panel to be determined at a later point in time (membership for each round is set out at Appendix 3). The panel’s membership has not been published in the program guidelines or otherwise announced.46

2.40 The guidelines stated that the panel would make its decisions in consultation with:

- the National Infrastructure Committee of Cabinet (NICC) in the first and second rounds; and

- Cabinet, in the subsequent four rounds.

2.41 The ANAO’s analysis confirmed that the guidelines accurately identified the panel as the decision-maker. There was only one round in which any changes to the list of successful applicants were made through the consultation process. Specifically, two projects selected by the panel to receive funding in the second round were removed from the list of successful applicants following the consultation process.

Have lessons learned from previous rounds been effectively used to inform the design and delivery of subsequent rounds?

Infrastructure has practices in place to use lessons learned from previous rounds to inform the design and delivery of subsequent BBRF funding rounds. Notwithstanding a recommendation it agreed to in an earlier audit of the award of regional grant funding, Infrastructure has not taken appropriate steps to analyse the reasons for the panel diverging from the results of the documented merit assessments so as to inform the development of the guidelines for later rounds and/or make adjustments to the conduct of the assessment process (for example, to undertake a departmental assessment of all factors the guidelines outline that can be taken into account when funding decisions are taken).

2.42 The ANAO has examined the award of funding under a number of regional grant programs administered by Infrastructure since the introduction of the grants administration framework in 2009 (Appendix 4 provides a summary of these audits).

2.43 In November 2021, Infrastructure advised the ANAO of the approach it has taken to implementing a continuous improvement approach to its management of the BBRF. The actions taken have included conducting post-implementation reviews of each round. Various changes have been made over time to the grant opportunity guidelines, assessment work practices and the approach to providing funding recommendations to the ministerial panel.

2.44 In its advice to the ANAO, Infrastructure outlined that there had been some shortcomings in the application of the eligibility criteria which had resulted in ineligible applications being erroneously assessed as eligible in multiple rounds of the BBRF.47 As requested by Infrastructure, DISR undertook an internal assurance process of the round five eligibility assessments prior to the assessment results being provided for consideration by the ministerial panel. Infrastructure advised the ANAO that ‘while the report did not identify any systemic or significant issues, it did identify potential issues with the eligibility of a number of applications.’48 This differed from DISR’s view, which was that the report had not identified any eligibility issues affecting the applications provided to the panel.

2.45 Infrastructure advised the ANAO that it has also undertaken some ‘targeted continuous improvement activities’ over the past 12 to 18 months. It drew particular attention to work it has undertaken to improve the reliance it is able to place on eligibility assessments undertaken by DISR (see paragraph 2.49). Infrastructure also outlined the following broader improvements:

- November 2020: establishment of a Regional Initiatives Implementation Office, as the program management office function for the Regional Development, Local Government and Regional Recovery Division;

- December 2020: endorsement of the program implementation framework for the division was endorsed, and approval of the project management office roles and functions;

- February 2021: a number of foundational project management documents were endorsed by the Regional Initiatives Governance Board, the board’s terms of reference were refreshed, and program governance and management roles and responsibilities were clarified. In addition, revised program status report templates were developed, to enable the board and departmental executive to quickly understand the level of risk inherent in individual programs at any given time, and barriers to program delivery;

- March 2021: the Regional Initiatives Implementation Framework was finalised, and a phased governance re-set was undertaken for divisional programs with the approach to the BBRF endorsed in April 2021; and

- July 2021: the board considered the Regional Programs Branch risk and probity plans. A probity adviser was to be appointed to review the branch probity plan as well as to develop a divisional probity plan.49

2.46 Infrastructure also advised the ANAO in February 2022 that after each round of BBRF a review of guidelines was conducted and a number of changes have been made throughout the life of the program. This included some changes to the merit criteria and adjustments to the assessment criteria framework.

2.47 Infrastructure agreed to a recommendation in the ANAO audit of the Regional Jobs and Investment Packages (RJIP)50 that:

In circumstances where there is a high incidence of grant decision-makers indicating disagreement with the assessment and scoring of applications against published criteria, the Department of Infrastructure, Transport, Cities and Regional Development require, in consultation with any service provider, that assessment practices and procedures be reviewed to identify whether there are any shortcomings and, if appropriate, make adjustments.

2.48 Similar to what was observed in the audit of that program, for earlier rounds of the BBRF there was a high incidence of funding recommendations not being agreed to by the panel. In more recent rounds Infrastructure has become less precise in its funding recommendations, with practices similar to those observed in ANAO audit reports of the predecessor programs, the Regional and Local Community Infrastructure Program and the Regional Development Australian Fund, rather than continuing with the improvement evident in the ANAO’s audit of the National Stronger Regions Fund (see paragraph 2.42). Specifically, the ANAO identified a return to broadbanding of applications rather than individual merit rankings and that the department would pool applications for the decision maker to select from, rather than recommending which particular applications should be awarded grants from within the available program funding. The Joint Committee of Public Accounts and Audit, in its review of those earlier ANAO audit reports, supported the ANAO’s findings and recommendations (see Appendix 4).

2.49 In the more recent BBRF rounds, applications assessed to have performed best against the published assessment criteria have not been the most successful with the decision-making records indicating a greater reliance on the ‘other factors’ (see the section starting at paragraph 5.3). Even so, there has been no consequential adjustment to either the criteria in the grant guidelines or the assessment process. In April 2022, Infrastructure advised the ANAO that:

Given the issues raised by the BBRF Ministerial Panel about the Department’s assessment of certain project applications against the assessment criteria for rounds one and two, and in response to lessons being learned through the Yarrabee Consulting – Assurance Review of the Regional Jobs and Investment Packages Program, the Department worked with DISER to review and update the documents forming part of the BBRF Assessment Framework. The Department conducted a quality assurance check on a sample of assessments during the merit assessment process, and DISER used a separate team of experienced staff to undertake quality assurance checks in real time, with a view to achieving a better quality of assessments during the merit assessment process; thereby ensuring assessments complied with agreed procedures. More recently, the Department has worked with DISER to implement external assurance of assessment results, prior to them being submitted to the Ministerial Panel for consideration.

3. Funding recommendations

Areas examined

The ANAO examined the advice provided to the ministerial panel by the Department of Infrastructure, Transport, Regional Development, Communication and the Arts (Infrastructure) to inform the funding decisions under the first five rounds of the Building Better Regions Fund (BBRF).

Conclusion